Fast neutrons from cosmic-ray showers can cause significant errors in supercomputers. But by measuring the scale of the problem, physicists hope not only to make such devices less prone to cosmic corruption but also protect everything from self-driving cars to quantum computers, as Rachel Brazil finds out

In 2013 a gamer by the name “DOTA_Teabag” was playing Nintendo’s Super Mario 64 and suddenly encountered an “impossible” glitch – Mario was teleported into the air, saving crucial time and providing an advantage in the game. The incident – which was recorded on the livestreaming platform Twitch – caught the attention of another prominent gamer “pannenkoek12”, who was determined to explain what had happened, even offering a $1000 reward to anyone who could replicate the glitch. Users tried in vain to recreate the scenario, but no-one was able to emulate that particular cosmic leap. Eight years later, “pannenkoek12” concluded that the boost likely occurred due to a flip of one specific bit in the byte that defines the player’s height at a precise moment in the game – and the source of that flipping was most likely an ionizing particle from outer space.

The impact of cosmic radiation is not always as trivial as determining who wins a Super Mario game, or as positive in its outcome. On 7 October 2008 a Qantas flight en route from Singapore to Australia, travelling at 11,300 m, suddenly pitched down, with 12 passengers seriously injured as a result. Investigators determined that the problem was due to a “single-event upset” (SEU) causing incorrect data to reach the electronic flight instrument system. The culprit, again, was most likely cosmic radiation. An SEU bit flip was also held responsible for errors in an electronic voting machine in Belgium in 2003 that added 4096 extra votes to one candidate.

Cosmic rays can also alter data in supercomputers, which often causes them to crash. It’s a growing concern, especially as this year could see the first “exascale” computer – able to calculate more than 1018 operations per second. How such machines will hold up to the increased threat of data corruption from cosmic rays is far from clear. As transistors get smaller, the energy needed to flip a bit decreases; and as the overall surface area of the computer increases, the chance of data corruption also goes up.

As transistors get smaller, the energy needed to flip a bit decreases; and as the overall surface area of the computer increases, the chance of data corruption also goes up

Fortunately, those who work in the small but crucial field of computer resilience take these threats seriously. “We are like the canary in the coal mine, we’re out in front, studying what is happening,” says Nathan DeBardeleben, senior research scientist at Los Alamos National Laboratory in the US. At the lab’s Neutron Science Centre, he carries out “cosmic stress-tests” on electronic components, exposing them to a beam of neutrons to simulate the effect of cosmic rays.

While not all computer errors are caused by cosmic rays (temperature, age and manufacturing errors can all cause problems too), the role they play has been apparent since the first supercomputers in the 1970s. The Cray-1, designed by Seymour Roger Cray, was tested at Los Alamos (perhaps a mistake given that its high altitude, 2300 m above sea level, makes it even more vulnerable to cosmic rays).

Cray was initially reluctant to include error-detecting mechanisms, but eventually did so, adding what became known as parity memory – where an additional “parity” bit is added to a given set of bits. This records whether the sum of all the bits is odd or even. Any single bit corruption will therefore show up as a mismatch. Cray-1 recorded some 152 parity errors in its first six months (IEEE Trans. Nucl. Sci. 10.1109/TNS.2010.2083687). As supercomputers developed, problems caused by cosmic rays did not disappear. Indeed, in 2002 when Los Alamos installed ASCI Q, then the second fastest supercomputer in the world, initially it couldn’t run for more than an hour without crashing due to errors. The problem only eased when staff added metal side panels to the servers, allowing it to run for six hours.

Cosmic chaos

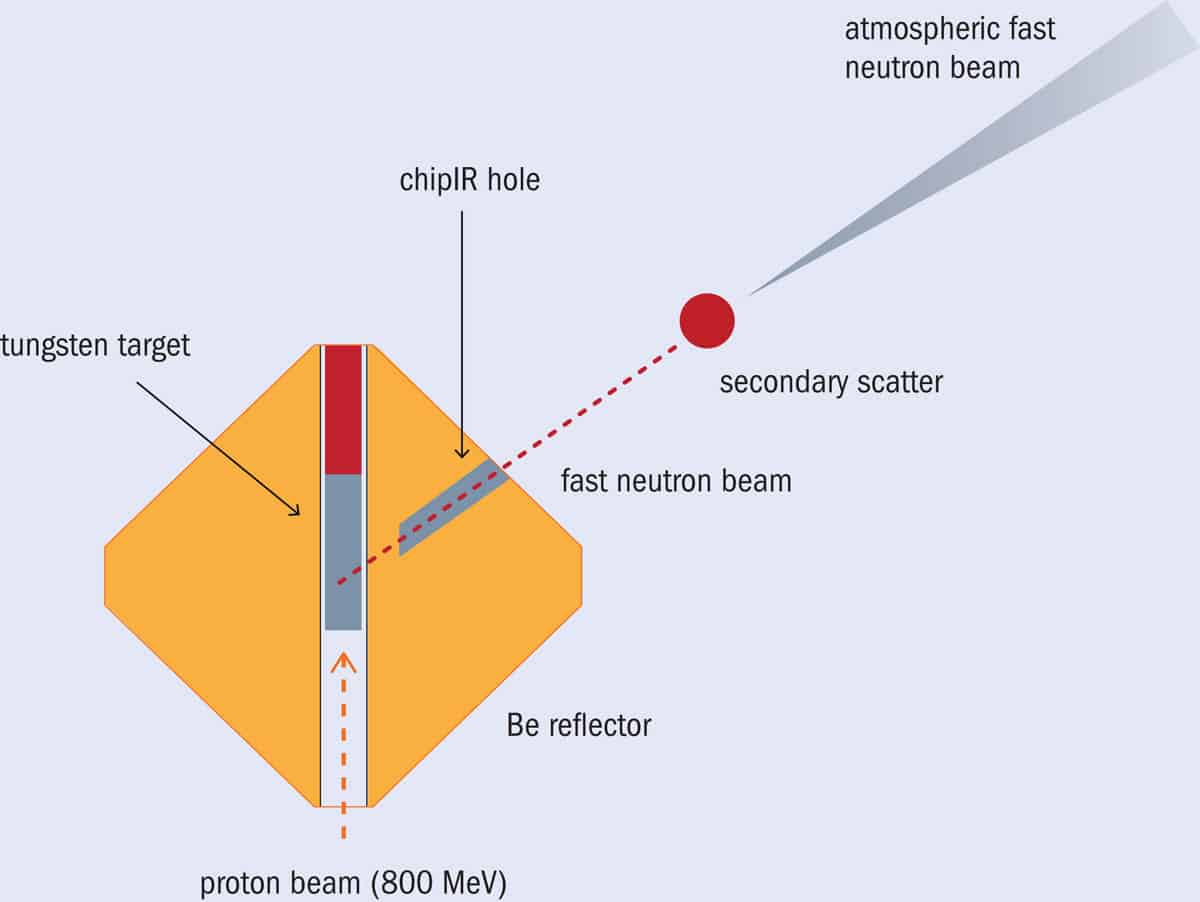

Cosmic rays originate from the Sun or cataclysmic events such as supernovae in our galaxy or beyond. They are largely made up of high-energy protons and helium nuclei, which move through space at nearly the speed of light. When they strike the Earth’s atmosphere they create a secondary shower of particles, including neutrons, muons, pions and alpha particles. “The ones that survive down to ground level are the neutrons, and largely they are fast neutrons,” explains instrument scientist Christopher Frost, who runs the ChipIR beamline at the Rutherford Appleton Laboratory in the UK. It was set up in 2009 to specifically study the effects of irradiating microelectronics with atmospheric-like neutrons.

Millions of these neutrons strike us each second, but only occasionally do they flip a computer memory bit. When a neutron interacts with the semiconductor material, it deposits charge, which can change the binary state of the bit. “It doesn’t cause any physical damage, your hardware is not broken; it’s transient in nature, just like a blip,” explains DeBardeleben. When this happens, the results can be completely unobserved or can be catastrophic – the outcome is purely coincidental.

Computer scientist Leonardo Bautista-Gomez, from the Barcelona Supercomputing Center in Spain, compares these errors to the mutations radiation causes to human DNA. “Depending on where the mutation happens, these can create cancer or not, and it’s very similar in computer code.” Back at the Rutherford lab, Frost – working with computer scientist Paolo Rech from the Institute of Informatics of the Federal University of Rio Grande do Sol, Brazil – has also been studying an additional source of complications, in the form of lower energy neutrons. Known as thermal neutrons, these have nine orders of magnitude less energy than those coming directly from cosmic rays. Thermal neutrons can be particularly problematic when they collide with boron-10, which is found in many semiconductor chips. The boron-10 nucleus captures a neutron, decaying to lithium and emitting an alpha particle.

Frost and Rech tested six commercially available devices, run under normal operating conditions and found they were all impacted by thermal neutrons (J. Supercomput. 77 1612). “In principle, you can use extremely pure boron-11” to be rid of the problem, says Rech, but he adds that this increases the cost of production. Today, even supercomputers use commercial off-the-shelf components, which are likely to suffer from thermal neutron damage. Although cosmic rays are everywhere, thermal neutron formation is sensitive to the environment of the device. “Things containing hydrogen [like water], or things made from concrete, slow down fast neutrons to thermal ones,” explains Frost. The researchers even found the weather affected thermal neutron production, with levels doubling on rainy days.

Preventative measures

While the probability of errors is still relatively low, certain critical systems employ redundancy measures – essentially doubling or tripling each bit, so errors can be immediately detected. “You see this particularly in spacecraft and satellites, which are not allowed to fail,” says DeBardeleben. But these failsafes would be prohibitively expensive to replicate for supercomputers, which often run programmes lasting for months. The option of stopping the neutrons reaching these machines altogether is also impractical – it takes three metres of concrete to block cosmic rays – though DeBardeleben adds that “we have looked at putting data centres deep underground”.

Today’s supercomputers do run more sophisticated versions of parity memory, known as error-correcting code (ECC). “About 12% of the size of the data [being written] is used for error-correcting codes,” adds Bautista-Gomez. Another important innovation for supercomputers has been “checkpointing” – the process of regularly saving data mid-calculation, so that if errors cause a crash, the calculation can be picked up from the last checkpoint. The question is how often to do this? Checkpointing too frequently costs a lot in terms of time and energy; but not often enough and you risk losing months of work, when it comes to larger applications. “There is a sweet spot where you find the optimal frequency,” says Bautista-Gomez.

The fear of the system crashing and a loss of data is only half the problem. What has started to concern Bautista-Gomez and others is the risk of undetected or silent errors – ones that do not cause a crash, and so are not caught. The ECC can generally detect single or double bit flips, says Bautista-Gomez, but “beyond that, if you have a cosmic ray that changes three bits in the memory cell, then the codes that we use today will most likely be unable to detect it”.

Until recently, there was little direct evidence of such silent data-corruption in supercomputers, except what Bautista-Gomez describes as “weird things that we don’t know how to explain”. In 2016, together with computer scientist Simon McIntosh-Smith from the University of Bristol, UK, he decided to hunt for these errors using specially designed memory-scanning software to analyse a cluster of 1000 computer nodes (data points) without any ECC. Over a year they detected 55,000 memory errors. “We observed many single-bit errors, which was expected. We also observed multiple double-digit errors, as well as several multi-bit errors that, even if we had ECC, we wouldn’t have been seen,” recalls Bautista-Gomez (SC ‘16: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis 10.1109/SC.2016.54).

Accelerated testing

The increasing use of commercial graphics processing units (GPUs) in high-performance computing, is another problem that worries Rech. These specialized electronic circuits have been designed to rapidly process and create images. As recently as 10 years ago they were only used for gaming, and so weren’t considered for testing says Rech. But now these same low-power, high-efficiency devices are being used in supercomputers and in self-driving cars, so “you’re moving into areas where its failure actually becomes critical” adds Frost.

Rech, using Frost’s ChipIR beamline, devised a method to test the failure rate of GPUs produced by companies like Nvidia and AMD that are used in driverless cars. They have been doing this sort of testing for the last decade and have devised methods to expose devices to high levels of neutron irradiation while running an application with an expected outcome. In the case of driverless car systems, they would essentially show the device pre-recorded videos to see how well it responded to what they call “pedestrian incidents” – whether or not it could recognize a person.

Of course, in these experiments the neutron exposure is much higher than that produced by cosmic rays. In fact, it’s roughly 1.5 billion times what you would get at ground level, which is about 13 neutrons cm–2 hr–1. “So that enables us to do this accelerated testing, as if the device is in a real environment for hundreds of thousands of years,” explains Frost. Their experiments try to replicate 100 errors per hour and, from the known neutron flux, can calculate what error rate this would represent in the real world. Their conclusion: an average GPU would experience one error every 3.2 years.

This seems low, but as Frost points out, “If you deploy them in large numbers, for example in supercomputers, there may be several thousand or if you deploy them in a safety-critical system, then they’re effectively not good enough.” At this error rate a supercomputer with 1800 devices would experience an error every 15 hours. When it comes to cars, with roughly 268 million cars in the EU and about roughly 4% – or 10 million cars – on the road at any given time, there would be 380 errors per hour, which is a concern.

Large scale

The continued increase in the scale of supercomputers is likely to exacerbate the problem in the next decade. “It’s all an issue of scale,” says DeBardeleben, adding that while the first supercomputer Cray-1 “was as big as a couple of rooms…our server computers today are the size of a football field”. Rech, Bautista-Gomez and many others are working on additional error-checking methods that can be deployed as supercomputers grow. For self-driving cars, Rech has started to analyse where the critical faults arise within GPU chips that could cause accidents, with a view to error correcting only these elements.

Another method used to check the accuracy of supercomputer simulations is to use physics itself. “In most scientific applications you have some constants, for example, the total energy [of a system] should be constant,” explains Bautista-Gomez. So every now and then, we check the application to see whether the system is losing energy or gaining energy. “And if that happens, then there is a clear indication that something is going wrong.”

Both Rech and Bautista-Gomez are making use of artificial intelligence (AI), creating systems that can learn to detect errors. Rech has been working with hardware companies to redesign the software used in object detection in autonomous vehicles, so that it can compare consecutive images and do its own “sense check”. So far, this method has picked up 90% of errors (IEEE 25th International Symposium on On-Line Testing and Robust System Design 10.1109/IOLTS.2019.8854431). Bautista-Gomez is also developing machine-learning strategies to constantly analyse data outputs in real-time. “For example, if you’re doing a climate simulation, this machine-learning [system] could be analysing the pressure and temperature of the simulation all the time. By looking at this data it will learn the normal variations, and when you have a corruption of data that causes a big change, it can signal something is wrong.” Such systems are not yet commonly used, but Bautista-Gomez expects they will be needed in the future.

Quantum conundrum

Looking even further into the future, where computing is likely to be quantum, cosmic rays may pose an even bigger challenge. The basic unit of quantum information – the qubit – is able to exist in three states, 0, 1 and a mixed state that enables parallel computation and the ability to handle calculations too complex for even today’s supercomputers. It’s still early days in their development, but IBM announced it plans to launch the 127-qubit IBM Quantum Eagle processor sometime this year.

For quantum computers to function, the qubits must be coherent – that means they act together with other bits in a quantum state. Today the longest period of coherence for a quantum computer is around 200 microseconds. But, says neutrino physicist Joe Formaggio at the Massachusetts Institute of Technology (MIT), “No matter where you are in the world, or how you construct your qubit [and] how careful you are in your set up, everybody seems to be petering out in terms of how long they can last.” William Oliver, part of the Engineering Quantum Systems Group at MIT, believes that radiation from cosmic rays is one of the problems, and with Formaggio’s help he decided to test their impact.

Formaggio and Oliver designed an experiment using radioactive copper foil, producing the isotope copper-64, which decays with a half-life of just over 12 hours. They placed it in the low-temperature 3He/4He dilution refrigerator with Oliver’s superconducting qubits. “At first he would turn on his apparatus and nothing worked,” describes Formaggio, “but then after a few days, they started to be able to lock in [to quantum coherence] because the radioactivity was going down. We did this for several weeks and we could watch the qubit slowly get back to baseline.” The researchers also demonstrated the effect by creating a massive two-tonne wall of lead bricks, which they raised and lowered to shield the qubits every 10 minutes, and saw the cycling of the qubits’ stability.

From these experiments they have predicted that without interventions, cosmic and other ambient radiation will limit qubit coherence to a maximum of 4 milliseconds (Nature 584 551). As current coherence times are still lower than this limit, the issue is not yet a major problem. But Formaggio says as coherence times increase, radiation effects will become more significant. “We are maybe two years away from hitting this obstacle.”

Of course, as with supercomputers, the quantum-computing community is working to find a way around this problem. Google has suggested adding aluminium film islands to its 53-qubit Sycamore quantum processor. The qubits are made from granular aluminium, a superconducting material containing a mixture of nanoscale aluminium grains and amorphous aluminium oxide. They sit on a silicon substrate and when this is hit by radiation, photons exchange between the qubit and substrate, leading to decoherence. The hope is that aluminium islands would preferentially trap any photons produced (Appl. Phys. Lett. 115 212601).

Another solution Google has proposed is a specific quantum error-correction code called “surface code”. Google has developed a chessboard arrangement of qubits, with “white squares” representing data qubits that perform operations and “black squares” detecting errors in neighbouring qubits. The arrangement avoids decoherence by relying on the quantum entanglement of the squares.

In the next few years, the challenge is to further improve the resilience of our current supercomputer technologies. It’s possible that errors caused by cosmic rays could become an impediment to faster supercomputers, even if the size of components continues to drop. “If technologies don’t improve, there certainly are limits,” says DeBardeleben. But he thinks it’s likely new error-correcting methods will provide solutions: “I wouldn’t bet against the community finding ways out of this.” Frost agrees: “We’re not pessimistic at all; we can find solutions to these problems.”