Faulty components are usually rejected in the manufacture of computers and other high-tech devices. However, Damien Challet and Neil Johnson of Oxford University say that this need not be the case. They have used statistical physics to show that the errors from defective electronic components or other imperfect objects can be combined to create near perfect devices (D Challet and N Johnson 2002 Phys. Rev. Lett. 89 028701).

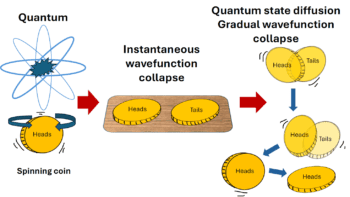

Most computers are built to withstand the faults that develop in some of their components over the course of the computer’s lifetime, although these components initially contain no defects. However, many emerging nano- and microscale technologies will be inherently susceptible to defects. For example, no two quantum dots manufactured by self-assembly will be identical. Each will contain a time-independent systematic defect compared to the original design.

Historically, sailors have had to cope with a similar problem – the inaccuracy in their clocks. To get round this they often took the average time of several clocks so that the errors in their clocks would more or less cancel out.

Similarly, Challet and Johnson consider a set of N components, each with a certain systematic error – for example the difference between the actual and registered current in a nanoscale transistor at a given applied voltage. They calculated the effect of combining the components and found that the best way to minimize the error is to select a well-chosen subset of the N components. They worked out that the optimum size of this subset for large numbers of devices should equal N/2.

On this basis, the researchers say that it should be possible to generate a continuous output of useful devices using only defective components. To find the optimum subset from each batch of defective devices, all of the defects can be measured individually and the minimum calculated with a computer. Alternatively, components can be combined through trial and error until the aggregate error is minimized. Once the optimum subset has been selected, fresh components can be added to replenish the original batch and the cycle started over again.

Challet and Johnson point out that this process and the wiring together of the components will add to the overall cost of making the device. But they believe that these extra costs are likely to be outweighed by the fact that defective components can be produced cheaply en masse. Hewlett Packard, for example, has already built a supercomputer – known as Teramac – from partially defective conventional components using adaptive wiring.

“Our scheme implies that the ‘quality’ of a component is not determined solely by its own intrinsic error,” write the researchers. “Instead, error becomes a collective property, which is determined by the ‘environment’ corresponding to the other defective components.”