As Sydney gets ready to host the World Congress on Medical Physics and Biomedical Engineering later this month, Paula Gould reports on the state of the art in imaging technology

Medical physics has come a long way since Wilhelm Conrad Röntgen first described a “new kind of ray” back in 1895. The discovery that X-rays could be used to display the innermost workings of the human body on a photographic plate was of immediate interest to the medical community at the time. Today, over a century later, the phrase “going for a scan” can refer to any one of a multitude of different medical-imaging techniques that are used for diagnosis and treatment.

The transmission and detection of X-rays still lies at the heart of radiography, angiography, fluoroscopy and conventional mammography examinations. However, traditional film-based scanners are gradually being replaced by digital systems that are based primarily on caesium-iodide scintillators coupled to flat-panel detectors. Some systems rely on charged-coupled devices (CCD) rather than flat panels but the end result is the same: the data can be viewed, moved and stored without a single piece of film ever being exposed.

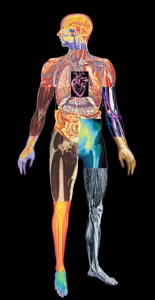

The humble X-ray also forms the heart of modern computed tomography (CT) systems, which can obtain a series of 2D “slices” through the body. A whole host of other physics-based techniques or “modalities” are also routinely used to look inside the body without the need for a scalpel. Single photon emission CT (SPECT) and positron emission tomography (PET) rely on the properties of radioactive isotopes, while magnetic resonance imaging (MRI) exploits the well known principles of nuclear magnetic resonance (NMR), and is the starting point for functional MRI (fMRI). Last but by no means least, ultrasound uses high-frequency sound waves in a similar manner to submarine sonar to produce images of tissue and blood vessels.

Data explosion

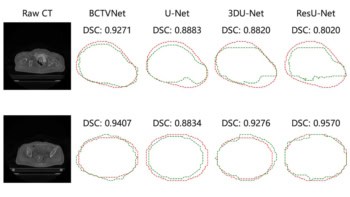

Whatever the modality, a typical scan now yields more information than ever before. This is particularly true for “multislice” CT scanners in which the X-ray source and the detectors are both rotated around the body of the patient. The multislice approach has improved the spatial resolution of CT scans from about 6 mm to better than 1 mm, which is making it increasingly attractive for a wider range of diagnostic and screening tests.

“Lots of new clinical applications have opened up due to the fast-scan capability of state-of-the-art CT,” says John Boone, professor of radiology and biomedical engineering at the University of California Davis Medical Center in Sacramento. “Multislice CT has given rise to the realistic prospect of CT coronary angiography, for instance, and there is also a large clinical trial in the US looking at the feasibility of using it to screen for lung cancer.”

However, the rise in data per CT scan, and the greater use of the machines, means that many hospitals are experiencing a dramatic – often exponential – increase in the amount of data they generate. Computer networks in hospitals – and existing software for the manipulation of images – are struggling to cope with the sheer volume of data now being generated. The end result is that there is often a delay before a doctor can view the results of a scan.

The problem of data overload is only going to get worse according to Sébastien Ourselin, project leader for medical imaging and a member of the e-Health team at CSIRO Telecommunications and Industrial Physics in Epping, Australia. “The issue will not be with the quality of data,” says Ourselin. “Rather, the question will be ‘How can I extract the information that is relevant to me from these hundreds of megabytes of data in just one or two minutes so that I can make a clinical diagnosis?’. Can you expect a radiologist to wait two hours to get a good segmentation of a heart?”

Andrew Todd-Pokropek, head of the medical-physics department at University College London, believes that time would be saved if medical physicists could ensure that all the data leaving the scanner were usable. “There should be ways of controlling data acquisition during the scan to optimize the quality of the data produced,” he says. Todd-Pokropek would like to see “intelligent acquisition” systems that allow for the effects of patient motion. Indeed, even if patients remain absolutely still while being scanned, a beating heart or the movements associated with breathing can sometimes distort the final images.

Combining strengths

Another trend in medical imaging involves combining the power of different imaging techniques. For instance, there is considerable interest in using a combination of multislice CT and PET in cancer imaging. PET is especially adept at mapping areas of metabolic hyperactivity, which is a strong indication that cancer is present, while the sub-millimetre resolution of multislice CT offers a clear picture of anatomical detail. Put the two together and you have an accurate means of locating a tumour and assessing its size and shape. This could prove particularly valuable when characterizing or “staging” tumours when they are first diagnosed, and then monitoring their response to treatment.

There are three options for generating a combined PET/CT scan according to Todd-Pokropek. The first is to collect the PET and CT images independently and then morph them together with powerful image-registration software. This is relatively straightforward for brain scans, but more difficult for whole-body scans. The second option involves fixing the patient to a bed and then wheeling them into the scanners in quick succession. However, it is quite a challenge to design a bed that will restrict the motion of patients to within a millimetre. The third option is to literally bolt the two scanners together in a single system. This last option has found favour with the major medical-imaging manufacturers, all of whom now market such systems.

“Multimodality image fusion is the future of nuclear medicine,” says Todd-Pokropek. “It is absolutely critical that imaging data from nuclear medicine is combined with that from other modalities to extract the most information.” Indeed, Todd-Pokropek predicts that PET/CT scanners will eventually replace single PET systems. This would be part of a general move towards blending physiological information with the anatomical information provided by traditional, diagnostic images.

David Townsend, senior PET physicist at the University of Pittsburgh Medical Center and co-inventor of the first working PET/CT scanner, agrees. “Three years ago we were taking an hour to do a PET study,” says Townsend. “Now we see that the promise of five minute whole-body scans for PET/CT is within reach.”

So what other combinations of techniques might prove useful? The jury is out on the merits of combined PET/MRI – even if the challenges of fitting a PET scanner within the small bore of an MRI unit and then getting it to work in a fairly strong magnetic field could be overcome.

A more promising approach is the merger of fMRI with electroencephalogram (EEG) studies to examine brain activity. This marriage would benefit from MRI’s high spatial resolution and the excellent temporal resolution of EEG studies. “There is a great potential for combined imaging using fMRI and EEG,” says Karl Friston, head of the functional imaging laboratory at University College London. Friston cites studies of epilepsy as a good example. “fMRI is a vital tool in understanding the genesis and nature of epileptic disorders,” he says, “and the complementary spatial and temporal properties of fMRI and EEG should finesse many of our observations about brain responses.”

Animal magic

While many medical physicists are working on ways to combine different imaging modalities, others are busy shrinking their scanners to study an entirely new class of subjects – laboratory mice. This growth of interest in rodent imaging has been triggered by a shift on interest (and research funds) towards gene therapy and other targeted treatments in clinical medicine (see “Watching biology in action”).

Most of the approaches being tested involve attaching a “normal” gene to a virus carrier and injecting it into an infected region in which the cells contain “abnormal” genes that carry disease. The general idea is that the normal genes replace the abnormal ones. The success or otherwise of the therapy can be assessed by attaching another agent to the carrier virus that will show up when a particular imaging technique is used, which allows researchers to monitor the physiological activity at the target location after therapy.

“There is a tremendous amount of activity in relation to small-animal scanners as a result of interest in gene therapy and genetic manipulation,” says Todd-Pokropek. “There is small-animal MRI, small-animal CT, small-animal PET and small-animal PET/CT, as well as systems based on optical fluorescence. Just about everybody is jumping onto this and trying to get small-animal imaging systems because they need them to do their genetic experiments.”

However, designing a scanner for a mouse is not as straightforward as it may seem, according to Ron Price, director of radiological sciences and professor of physics at Vanderbilt University Medical Center in Nashville. For example, miniaturizing a CT scanner means that the individual volume elements used to build up each image become smaller, which tends to reduce the resolution of the scan. This means that the only way to achieve adequate resolution is to increase the duration of the scan and/or the X-ray dose.

“Unlike human studies, where we can now produce a CT image in a fraction of a second, it will take several minutes for an animal,” says Rice. “That is a problem for us right now.”

Surgical solutions

Biologists are not the only group pressing for a new generation of tailor-made medical scanners. Physicists and engineers are also trying to modify the design of traditional, fixed imaging systems so they can be used during certain surgical procedures. The acquisition of clinical images in the operating theatre allows surgeons to operate via thin wire catheters, which is less invasive than other approaches. Surgeons use the images obtained during the operation to ensure that the catheters and other devices are in the correct place, and can also to check that the treatment has been successful.

Ultrasound and X-ray fluoroscopy have long been used in this role, though both have certain drawbacks. The accuracy of the former is highly dependent on the skills of the person who is performing the scan, while the latter exposes patients to ionizing radiation. Now attention is focusing on the potential of MRI-guided procedures, particularly in neurosurgery and cardiac operations where accuracy and the risks from X-ray exposure are of prime importance. “Interventional MRI is going to be an expanding area,” says Price.

The MRI scanners used for surgical applications must have an open magnet structure to give the doctors access to their patient. However, commercially available open MRI scanners tend to have magnetic field strengths of between about 0.2 and 0.6 T, compared with 1-3 T for most closed-bore MRI scanners. The leads to a lower signal-to-noise ratio and correspondingly poor spatial resolution.

One way to improve the image quality would be to increase the field strength, but this is difficult with an open-bore set-up says Price. “In open magnets you are forced to spread things out,” he says. “Think of a typical magnet that you might make at home by wrapping wires around an iron rod or a nail – the field is highest when the windings are close together. But if you spread the wires out to give the surgeon access to the patient, you are going to have to compensate by increasing the current.”

The problem is that the current can become too high for the wires to carry – even if superconducting wires are used. Price is optimistic that clever engineering will eventually solve the problem, but adds that the scanners are going to be “pretty expensive”.

As hospitals wait for the arrival of truly high-field open MRI scanners, so-called XMR scanners might provide a compromise. An XMR device consists of an X-ray scanner and a closed-bore MRI machine. The scanners are configured so that patients can be moved quickly from one to the other, allowing high-field MRI to be used in the middle of surgical procedures. XMR systems are already undergoing trials for intricate heart operations.

Surgeons can also benefit from interactive software tools that allow them to manipulate information from previously acquired images. “It is becoming fairly routine for surgeons to have access a whole series of volumetric images that he or she is able to co-register with the patient while they are on the operating table,” says Price, who is currently involved in a project to develop a “smart image recall device” that will be able to handle multiple sets of data from different scans.

Good vibrations

Ultrasound is another technique that could be used to provide functional data for many clinical applications, according to Price. It is already widely used to monitor changes to blood flow, courtesy of the Doppler effect, and could prove valuable in monitoring so-called anti-angiogenesis cancer therapies, which attack the growth of new blood cells in tumours.

“Blood flow is a very important parameter in cancer imaging,” says Price. “Ultrasound is very sensitive to blood flow, so it has a lot of potential in this area. Unfortunately it is mostly used as a qualitative modality, and a lot of effort needs to be put into making it more quantitative.” Price adds that the potential of ultrasound is sometimes overlooked as attention focuses on the seemingly more hi-tech – and certainly more expensive – approaches such as MRI, PET and CT.

Paul Carson, director of ultrasound research at the University of Michigan, agrees: “Ultrasound lends itself to targeted therapy and imaging.” Carson is also keen to improve the performance of ultrasound by using “contrast agents” to enhance the quality of images. Ultrasound contrast agents are suspensions of tiny gas bubbles that are just a few microns in diameter. Exposure to ultrasonic frequencies causes the microbubbles to resonate, which increases the strength of the reflected signal.

Very intense pulses can cause the bubbles to burst, boosting the signal still further. Data from advanced ultrasound techniques like these could also be fused with CT data in yet another variation on the multimodality imaging theme says Todd-Pokropek.

Other researchers are working on ways to glean more information on tissue behaviour from ultrasound scans, again with an emphasis on measurement rather than qualitative mapping. “Medical physicists and others have developed new methods of quantifying tissue characteristics, which at the very least have led to improvements in image quality and resolution,” says Carson. The bubble-bursting mechanism is also being investigated as a possible method for targeted drug delivery.

Outlook

The past century has seen medical imaging emerge as a powerful method for diagnosing disease, and also for monitoring treatment. Researchers are now striving to enhance the clarity of images, collect more quantitative data, monitor physiological processes, merge existing techniques and find more intelligent ways of displaying and using the information generated. But the greatest challenge could be ensuring that their hi-tech solutions have some real benefit to patients.

“The goal is to make the quality of medical images clinically acceptable,” says David Townsend. “If scanning for three times as long means you get a beautiful image for marketing, it does not mean it has any more diagnostic significance.”

Further information

Wanted: multi-talented physicists

The growing interest in ever-more-sophisticated imaging systems has fuelled job prospects for medically minded physicists, many of whom are now tailoring their training accordingly. David Townsend of the University of Pittsburgh Medical Center notes the growing trend for graduates to enrol on dedicated medical-imaging courses, prior to applying for research posts.

Townsend contrasts this route with his own path to a successful career in scanner design. Having trained as a nuclear physicist in the UK, he spent 10 years at the CERN particle-physics lab before deciding to apply his knowledge of particle detectors to medical scenarios. However, postdoctoral researchers with similar experience now face tougher competition for places in medical-imaging research. “I give talks to high-energy physics groups, and then a line of people ask me how I got into the field,” he says. “But nowadays, if I am looking to recruit a physicist, I can find someone with specific experience in medical imaging. It is harder for high-energy or nuclear physicists to get into the field.”

Entrants to the field should hone up on computer programming, according to John Boone of the University of California Davis Medical Center. Commercially available software tools are not yet sufficiently sophisticated to analyse the quality of digital images, he says, so medical physicists must be prepared to pitch in themselves. “In my opinion, having good computer coding skills is an absolute necessity to becoming a relevant medical physicist in radiology,” he says.

A thorough grounding in biology is also important says Ron Price of Vanderbilt University Medical Center, who used to find it difficult to communicate effectively with colleagues on some collaborative projects before he took a course in molecular biology. “In the main, we lack the vocabulary and background to communicate with some of these investigators right now,” he says.

But prospective candidates should not be put off, because the supply of multi-talented physicists has not yet matched demand and there are jobs aplenty. “There is even more work at the moment than ever before,” adds Andrew Todd-Pokropek, head of the medical-physics department at University College London. “We have trouble recruiting enough people.”