As seven Italian experts stand trial on manslaughter charges for underplaying the risk of a major earthquake, Edwin Cartlidge investigates the latest in earthquake forecasting

In March 2009 a “swarm” of more than 50 small earthquakes struck within a few kilometres of the southern end of the San Andreas fault in California. Several hours after the largest of these, a magnitude-4.8 tremor that occurred on 24 March, the state’s earthquake experts held a teleconference to assess the risk of an even bigger quake striking in the following days, given the extra stress exerted on the fault. They concluded that the chances of this happening had risen sharply, to between 1 and 5%, and therefore issued an alert to the civil authorities. Thankfully, as expected, no major quake actually took place.

What happened a week later in the medieval town of L’Aquila in central Italy was very different. On 31 March a group of seven Italian scientists and engineers met up as full or acting members of the country’s National Commission for the Forecast and Prevention of Major Risks to assess the dangers posed by a swarm that had been ongoing for about four months and which had seen a magnitude-4.1 tremor shake the town the day before. The experts considered that the chances of a more powerful quake striking in the coming days or weeks were not significantly increased by the swarm, and following the meeting local politicians reassured townspeople that there were no grounds for alarm. Tragically, in the early hours of 6 April a magnitude-6.3 earthquake struck very close to L’Aquila and left 308 people dead. The seven commission members are now on trial for manslaughter, and the then head of Italy’s Civil Protection Department, who set up but was not present at the 31 March meeting, is also being investigated for the same offence.

In the wake of the L’Aquila earthquake, the Civil Protection Department appointed a group of experts known as the International Commission on Earthquake Forecasting (ICEF) to review the potential of the type of forecasting used in California. Known as short-term probabilistic forecasting, it involves calculating the odds that an earthquake above a certain size will occur within a given area and (short) time period. The technique relies on the fact that quakes tend to cluster in space and time – the occurrence of one or more tremors tending to increase the chance that other tremors, including more powerful ones, will take place nearby within the coming days or weeks.

In a report explaining its findings and recommendations, published last August, the ICEF points out that while such forecasting can yield probabilities up to several hundred times background levels, the absolute probabilities very rarely exceed a few per cent. Nevertheless, the commission believes that this short-term forecasting can provide valuable information to civil authorities and urged Italy and all other countries in seismically active regions to use short- term-forecasting models for civil protection.

Scientists have developed many such models, each of which makes slightly different assumptions about the statistical behaviour of earthquake clustering. They are now trying to work out which of these models is the most accurate, and ultimately hope to enhance the predictive power of these models as we gain a better understanding of basic earthquake physics.

“In the past there hasn’t been a lot of motivation for governments to take this short-term forecasting seriously,” says the ICEF’s chairman, Thomas Jordan of the University of Southern California, Los Angeles. “But that is changing, partly because of what happened at L’Aquila.” Jordan argues that the tragedy at L’Aquila highlights how vital it is for us to understand what the most reliable types of forecasting are so that we have the best possible information at our fingertips. But he also believes it underlines the need for governments to work out exactly how to respond to such forecasts and in particular under what conditions they should issue alarms.

Faulty matters

The development of probabilistic forecasting marks a change in strategy for earthquake scientists. Previously, seismologists had pursued deterministic prediction, which involved trying to work out with near certainty when, where and with what magnitude particular earthquakes would strike. Researchers came to realize, however, just how complex earthquakes are and how difficult it is to predict them.

Most earthquakes occur on faults separating two adjacent pieces of the Earth’s crust that move relative to each other. Normally, the faults are locked together by friction, and stresses steadily accumulate over time. But when the faults reach breaking point and two rock faces suddenly slide past each other, a huge amount of energy is released in the form of heat, rock fracture and earthquake-causing seismic waves. Scientists have tried to predict earthquakes on the basis that the slow build-up and then sudden release of stress on any given fault occurs cyclically, with nearly identically powerful tremors spaced equally in time. A number of factors complicate this simple picture, including the fact that a single fault can slip at different stress levels, and also that interactions between neighbouring faults are highly complex.

An alternative route to predicting earthquakes is to try to identify precursors – physical, chemical or biological changes triggered in the build-up to a fault rupture. Perhaps the earliest example, often heard in folklore, is the idea that animals flee an area after somehow sensing an impending quake. Other possible precursors include changes in the rates of strain or conductivity within rocks, fluctuations in groundwater levels, electromagnetic signals near or above the Earth’s surface, and characteristic foreshocks (a distinctive pattern of smaller quakes that would precede a larger quake). However, the ICEF reported that it is “not optimistic” that such precursors can be identified in the near future, and is “not convinced” by the claims of Gioacchino Giuliani, a technician at the Gran Sasso National Laboratory near L’Aquila, who hit the headlines after claiming to have predicted the L’Aquila quake using his prediction system based on variations in the local emissions of radon gas. The committee’s reasoning is based on Giuliani’s treatment of background radon emissions and also the fact that he has yet to publish his results in a peer-reviewed journal.

An alternative to trying to predict earthquakes ahead of time is to send out a warning once a quake has started, giving people a few seconds’ notice of impending ground-shaking by exploiting the fact that information can be sent at close to the speed of light while seismic waves travel at the speed of sound. Japan makes use of such warning systems, but unfortunately they cannot provide accurate information on an earthquake’s magnitude, and also cannot alert people close to the earthquake’s epicentre because the effects there are so immediate.

Uncertain times

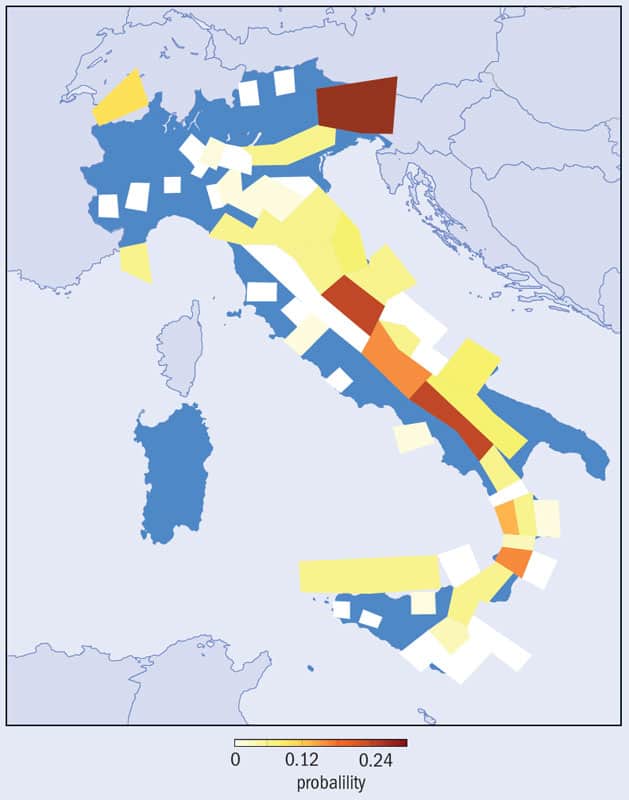

Given the difficulty of earthquake prediction and the limitations of early warnings, forecasting is the main defence against earthquakes. And the key forecasting tool is the seismic-hazard map (figure 1). These are based on long- term time-independent models, which reveal how often – but not when – a certain-sized earthquake is likely to occur. The models, and therefore the maps, do not tell us how the probabilities of major earthquakes change over time as a result of other quakes taking place but instead reveal the expected spatial distribution of quakes of a certain size happening over a certain time period (usually on the scale of decades). The distribution in space relies on seismographic data and historical records, while the distribution by size uses a statistical relationship known as Gutenberg–Richter scaling, which says that the frequency of earthquakes falls off exponentially with their magnitude.

Seismic-hazard maps allow governments to tune the severity of building regulations according to an area’s seismicity (as well as other factors such as the susceptibility of the local terrain to seismic waves) and also enable insurance companies to set premiums. However, the underlying models are only as good as the data used to calibrate them. And unfortunately, seismographic and historical records generally only go back a fraction of the many hundreds of years that typically separate the occurrence of major quakes on most active faults.

This limitation lay behind the complete failure to anticipate the magnitude-9.0 earthquake that struck the Tohoku region in Japan in March last year, which unleashed a devastating tsunami and caused the meltdown of several reactors at the Fukushima Daiichi nuclear plant. The country’s current seismic-hazard maps provide very detailed information about earthquake probabilities across the whole country but, according to ICEF chairman Jordan, they indicated a “very low, if not zero” probability for such a powerful quake because no such quake had occurred in the Tohoku region within the past 1000 years. “They had a magnitude cut-off in that region of Japan,” Jordan points out. In other words, such a high-magnitude earthquake was never expected to strike there.

Jim Mori, an earthquake scientist at the University of Kyoto, says that Japan’s hazard maps are now being re-evaluated to “consider the possibility of magnitude-9 or larger earthquakes”. However, he believes that there are unlikely to be “drastic changes” to Japanese earthquake research, adding that the inclusion of a one-in-a-thousand-year event like that in Tohoku would probably not change the maps a great deal.

Short-term solutions

To calculate how the probability of a major earthquake changes in time by accounting for the occurrence of other quakes, researchers have developed different kinds of time-dependent forecasting models. Some of these models make forecasts for the long term, i.e. over periods of several decades. The simplest form of these models assumes that the time of the next earthquake on a particular fault segment depends only on the time of the most recent quake on that segment, with a repeating cycle of quakes made slightly aperiodic (to try to match the models with observations) by introducing a “coefficient of variation” into the cycle. More sophisticated versions of these models make the time to the next quake also dependent on the past occurrence of major earthquakes nearby.

For a fault segment that has not ruptured for something approaching its mean recurrence time inferred from historical data, these models can yield probabilities roughly twice those obtained with the time-independent models for the occurrence of major quakes. However, such long-term time-dependent models have not fared well when put to the test. In 1984, for example, the US Geological Survey estimated with 95% confidence that a roughly magnitude-6 earthquake would rupture the Parkfield segment of the San Andreas fault in central California before January 1993. This prediction was made on the basis that similar-sized earthquakes had occurred on that segment six times since 1857, the last of which took place in 1966. In the end, however, the next magnitude-6 event did not take place until 2004. Similar failures have occurred when trying to predict earthquakes in Japan and Turkey.

The approach taken with short-term forecasting, which provides probabilities of earthquakes occurring over a matter of days or weeks, is fundamentally different. Once an earthquake has taken place and the stress on that particular fault segment relieved, the chances of another comparable quake taking place on the same fault segment in the short term tends to be lower. But the probability of a quake taking place on a neighbouring fault, thanks to the increased stress brought about by the original tremor, increases.

Short-term models come in a number of different guises. In single- generation versions, such as the Short-Term Earthquake Probability (STEP) model used by the US Geological Survey to make forecasts in California, a single mainshock is assumed to trigger all aftershocks. This contrasts with multiple-generation models, such as Epidemic-Type Aftershock Sequence (ETAS) models, in which each new daughter earthquake itself spawns aftershocks.

When seismic activity is high, short-term time-dependent models can yield probability values that are tens or even hundreds of times higher than those calculated using time-independent models. However, scientists do not yet know which of the many different types of short-term model is the most reliable. Jordan says that even the California Earthquake Prediction Evaluation Council, of which he is a member, does not use properly tested models but instead often relies on “back of the envelope calculations” to generate its forecasts.

Testing the data

To improve confidence in the models, in 2007 Jordan set up a programme known as the Collaboratory for the Study of Earthquake Predictability (CSEP). This provides common software and standardized procedures to test models against prospective seismic data, using independent testers, rather than the authors, to put the models through their paces. Starting from a single test centre in California, it now features centres in other parts of the world, including Italy and Japan, where faulting behaviour, and hence models, are different.

In Italy, Warner Marzocchi and Anna Maria Lombardi of the National Institute of Geophysics and Vulcanology tested an ETAS model against real aftershock data following the L’Aquila earthquake in 2009. Using all of the seismic data since, and including the mainshock on 6 April, the researchers updated their model on a daily basis and carried out aftershock forecasts until the end of September 2009. They found that the calculated distributions of aftershocks broadly tallied with those actually observed. Marzocchi has since teamed up with Jiancang Zhuang of the Institute of Statistical Mathematics in Tachikawa, Japan, to see if the model can in principle be used to forecast mainshocks, as well as aftershocks, on the basis that mainshocks are simply aftershocks that are more powerful than their parent tremors, which are then labelled as foreshocks. After comparing real data with the model, Marzocchi concluded “I am reasonably confident that we can use this kind of model to forecast mainshocks.”

In fact, a few months after the L’Aquila quake Marzocchi and Lombardi used the same model retrospectively to see what kind of forecast could have been made of the 6 April mainshock. They found that a few hours before the quake the model would have given odds of about 1 in 1000 that a powerful tremor would strike within 10 km of L’Aquila within three days, up from the long-term time-independent probability of 1 in 200,000.

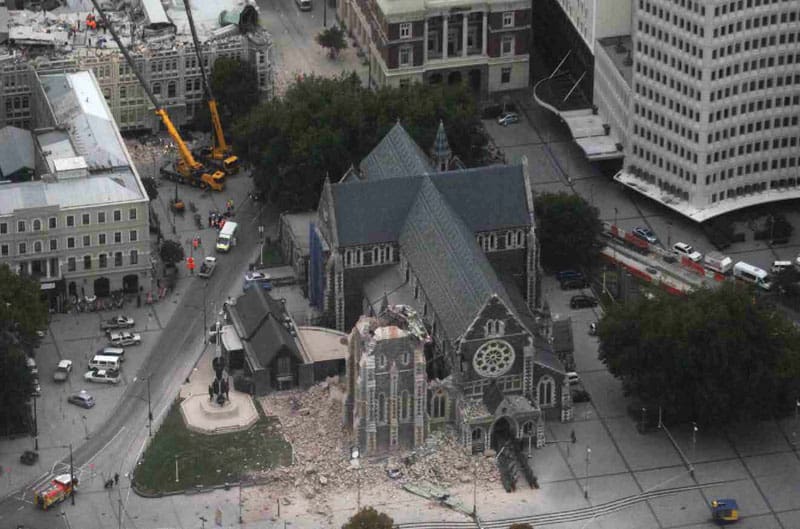

Researchers in New Zealand, meanwhile, have been using probabilistic forecasting to calculate the changing rates of aftershocks in the Canterbury region, following the magnitude-7.1 mainshock near the town of Darfield in September 2010 and the more lethal magnitude-6.2 aftershock that struck close to Christchurch in February last year. Matthew Gerstenberger and colleagues at GNS Science, a geophysics research institute in New Zealand, have used an ensemble of short-, medium- and long-term models to keep the public up to date and to revise building codes in the region. As pointed out by Gerstenberger, who developed the STEP model, time-independent forecasting on its own would be inadequate. “Christchurch was a moderate-to-low hazard region in the national seismic-hazard model prior to these earthquakes,” he says. “But the ongoing sequence has increased its estimated hazard.”

Dramatic changes to earthquake probabilities have also been calculated in Japan, following the Tohoku earthquake last year. Shinichi Sakai and colleagues at the University of Tokyo have worked out that the chances of a magnitude-7 or greater earthquake striking the Tokyo region have skyrocketed to 70% over the next four years. This contrasts with the Japanese government’s estimate of a 70% chance over the next 30 years. The researchers have stated that they obtain a much higher probability because they take into account the effects of a fivefold increase in tremors in Tokyo since the March 2011 event.

The limits of modelling

While ETAS- and STEP-like models can improve on the information available from time-independent forecasts, they are no panacea. In particular, they oversimplify the spatial properties of triggering, by representing earthquakes as point, rather than finite-length, sources, while also ignoring earthquakes’ proximities to major active faults. According to ICEF member Ian Main of the University of Edinburgh, incorporating fault-based information into these models might provide additional probability gain compared with time-independent calculations, given adequate fault and seismicity data. But significant improvements will only be made by gaining a better understanding of the physics of fault interactions. One particular challenge is to understand the extent to which one earthquake triggers another through the bulk movement of the Earth’s crust and how much it does so via the seismic waves it generates. “We know roughly how the statistics of earthquakes scale, and that is why we use statistical models,” says Main. “But the precise physical mechanism that leads to this scaling is underdetermined.”

Even if models can be significantly improved, they will, for the foreseeable future at least, only ever provide quite low probabilities of impending major quakes. That leaves the civil authorities responsible for mitigation actions in a difficult position. The ICEF recommends that governments try to establish a series of predefined responses, based on cost–benefit analyses, that local or national authorities could automatically enact once certain probability thresholds have been exceeded, from placing emergency services on higher alert to mass evacuation. But Marzocchi points out this will not be easy. “I can say from a scientific point of view that such and such is the probability of a certain earthquake occurring,” he says. “But acting on these low probabilities would very likely mean creating false alarms. This raises the problem of crying wolf.”

Some scientists continue to believe, on the other hand, that precursors will be found. Friedemann Freund, a physicist at NASA’s Ames Research Center near San Francisco, is investigating a number of potential precursors, including electromagnetic ones, and he maintains that the combination of such precursors, even if individually they are “fraught with uncertainty”, will lead “in the not-too-distant future to a robust earthquake forecasting system” (see “Breaking new ground”). He contends that seismologists are “too proud to admit that other scientific disciplines could help them out”.

Danijel Schorlemmer of the University of Southern California, who is joint leader of the CSEP model-testing project with Jordan, disagrees. He insists that deterministic earthquake prediction will not be possible “in my lifetime” and adds that, even though he hopes precursors will be identified, “the search has been unsuccessful so far”.

For Jordan, as for many other seismologists, ensuring that buildings are made as resistant as possible remains the most important strategy for combating the destructive power of earthquakes. But he believes that short-term probabilistic forecasting, if carried out properly, has an important role to play. “This approach is tricky,” he concedes, “because no-one can quite agree on which are the best models. So we have uncertainty on uncertainty. But can we ignore the information that they give us? The earthquakes in L’Aquila and New Zealand taught us we don’t have that luxury.”