Rebecca Holmes describes groundbreaking experiments that finally closed the long-standing loopholes in Bell tests, suggesting the end of the road for local realism. But could local realism yet live on?

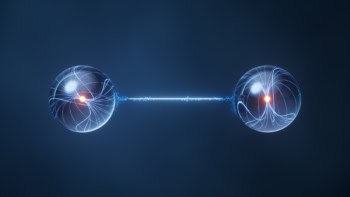

In the early 1960s the physicist John Bell dreamt up one of the most profound experimental tests ever imagined. While on sabbatical in the US on leave from CERN, he had been contemplating the weirdness of quantum mechanics, which predicts some especially strange outcomes in experiments with entangled particles. In an intuitive world, faraway events can’t influence each other faster than the speed of light (what is known as “locality”) and properties of objects have a definite value even if we don’t measure them (what is known as “realism”). However, quantum theory makes different predictions from those one would expect from this “local realism”, and Bell devised a form of experiment, now known as a Bell test, to check whether these theoretical implications translate to the real world.

John Bell and the most profound discovery of science

For half a century, Bell tests showed that local realism doesn’t hold up in the real world – something even the most senior of quantum physicists still struggle to grasp. But there remained two well-known loopholes in the tests that allowed us to hang on to the idea that the tests were flawed, and that the world does, after all, “make sense”. Now, thanks to work by three separate research groups published in 2015, those loopholes have been closed, and the death of local realism is generally accepted.

However, some physicists are suggesting that there could be some even more obscure loopholes at play. The question therefore is: might local realism still be alive and kicking?

The quantum cake factory

Quantum mechanics is famed among students, the public and academics alike for concepts that are difficult to get one’s head around. Locality and realism are some of the worst offenders, as is the related concept of entanglement. Explaining entanglement to students and non-physicists usually needs quantum equations, knowledge of things such as photon polarizations, and abstract proofs that even graduate students find boring. So it was that at a conference one summer in the late 1990s, physicists Paul Kwiat and Lucien Hardy came up with a real-world analogy to explain the weirdness of entanglement without any maths, calling it “the mystery of the quantum cakes”.

1 The mystery of the quantum cakes

Lucy and Ricardo explore nonlocal correlations through quantum mechanically (non-maximally) entangled cakes. Because Ricardo’s first cake (far right) rose early, Lucy’s cake (far left) tastes good. Redrawn from American Journal of Physics 68 33 with the permission of the American Association of Physics Teachers.

Here’s the story as Kwiat, who is now my graduate adviser, told it to me. Imagine a bakery producing cakes for sale, and Lucy and Ricardo are inspectors testing the finished product. The bakery, shown in figure 1, is unusual because it has a kitchen with two doors, one on the left and one on the right, from which emerge conveyor belts (like the moving sidewalks at an airport). Cakes are sent out on the conveyor belts in little ovens, and they finish baking as they travel to Lucy (on the left) and Ricardo (on the right). The cakes are sent out in pairs, so Lucy and Ricardo always get one at the same time.

There are two tests that Lucy and Ricardo can do on the cakes. They can open the oven while the cake is still baking to see if it has risen early or not. Or they can wait until it finishes baking and sample it to see if it tastes good. They can only do one of these tests on each cake – if they wait until it finishes baking to taste it, they lose the chance to check whether it rose early, and if they check partway through baking to see if it has risen early, they disturb the cake (maybe it’s a soufflé) and they can’t test whether it tastes good later. (These two mutually exclusive tests are an example of “non-commuting measurements”, an important concept in quantum mechanics.)

Lucy and Ricardo each flip coins to randomly choose which test to do for each of their cakes. After testing cakes all morning, they then get together to compare their results. Because of the coin flips, sometimes they happened to do the same test on a pair of cakes and sometimes different tests. When they happened to do different tests, they notice a correlation: if Lucy’s cake tasted good then Ricardo’s always rose early, and vice versa. This isn’t so strange – maybe the cakes are made from the same batter, and maybe batter that rises early always tastes good. Now, in the cases where they both happened to check the cake early, Lucy and Ricardo find that in 9% of those tests, both cakes had risen early. So how often should both cakes taste good, when they both waited to taste them? (Go on, try to work it out.)

The answer is at least 9% of the time, right? We know that when one cake rises early, the other always tastes good, so as they both rise early 9% of the time, both cakes should taste good at least as often as they both rise early. However, Lucy and Ricardo are surprised to find that both cakes never taste good. This seems impossible – and it is, for normal cakes – but if the pairs of cakes were in a particular entangled quantum state, it could happen! Of course, physicists can’t really make entangled cakes (well, not yet), but they can make entangled photons and other particles with the same strange behaviour.

So why did we make the wrong prediction about how often both cakes must taste good? We assumed that random choices and outcomes on Lucy’s side shouldn’t affect what happens on Ricardo’s side, and vice versa, and that whether the cakes will taste good or rise early was already determined when they were put in the ovens. These seemingly obvious assumptions are together called local realism: the idea that all properties of a cake or a photon have a definite value even if we don’t measure them (realism), and the assumption that faraway events can’t influence each other, at least not faster than the speed of light (locality). In a local realistic world, both cakes have to taste good at least 9% of the time – nothing else makes sense. Observing fewer than 9% (or none at all) is evidence that at least one of the assumptions of local realism must be false.

Thirty years of ‘against measurement’

This imaginary quantum bakery is a version of a Bell test – an experiment that can check whether or not we live in a local realistic world. (Some physicists, notably Einstein, had already realized that entanglement seemed to defy local realism, but it was long thought to be a philosophical question about the interpretation of quantum theory rather than something to be tested in the lab.) In the half-century since Bell’s discovery that local realism can be tested, the experiment he proposed has been carried out in dozens of labs around the world using entangled particles, most commonly photons.

Photons don’t taste good or rise early, so instead physicists usually measure some other property, such as their polarization in two different measurement bases (horizontal/vertical and diagonal/anti-diagonal, for example). Like the two cake tests, these polarization measurements are “non-commuting”. Using a particular entangled quantum state and measurement directions, the “quantum cakes” experiment has actually been performed in the lab and found precisely the same percentages as the story. Bell tests can use other entangled states, and there are many different mathematical conditions for violating local realism, but the idea is the same. With some relatively simple optics equipment, undergraduates at the University of Illinois, US, can even do a Bell test in one afternoon for their modern physics lab.

Closing loopholes

Prior to 2015, every Bell test ever carried out was imperfect. Physicists weren’t able to rule out every “loophole” that could allow local realism to still be true even though the experimental results seem to violate it.

The first loophole can appear if not every photon or cake is measured. In the quantum cakes story, we implied that every single pair of cakes was tested. In an experiment with photons, this is never true, because there are no perfect single-photon detectors, and some fraction of the photons is always lost. This can open a loophole for local realism: if enough photons are not tested, then maybe the ones we missed would have changed the outcome of the experiment. (In the quantum cakes analogy, maybe the cakes come down the conveyor belt too fast to test all of them, so some of the pairs that were not tested might both taste good and Lucy and Ricardo wouldn’t know.) This is called the “detection loophole”, and to close it the experimenters must ensure they collect both entangled photons most of the time. In a common version of a Bell test, the minimum is two thirds.

Cosmic Bell test probes the reality of quantum mechanics

A second important loophole appears if some kind of signal could travel between different parts of the experiment to create the measured correlations, without transmitting information faster than the speed of light. Long distances and quick measurements are the keys to closing this “timing” loophole. In the quantum cakes example, imagine that vibrations are transmitted down the conveyer belt, so that whoever opens their oven first to taste their cake (which might taste good) always causes the other cake to collapse and taste bad. Then both cakes would never be found to taste good, without actually violating local realism. To avoid this, Lucy and Ricardo should be far enough apart that no signal could travel between them and influence their measurements, even at the speed of light. In special relativity this condition is called “space-like” separation. To rule out the possibility that the chef making the cakes could somehow influence the measurements, the two testers and the bakery itself should be space-like separated as well.

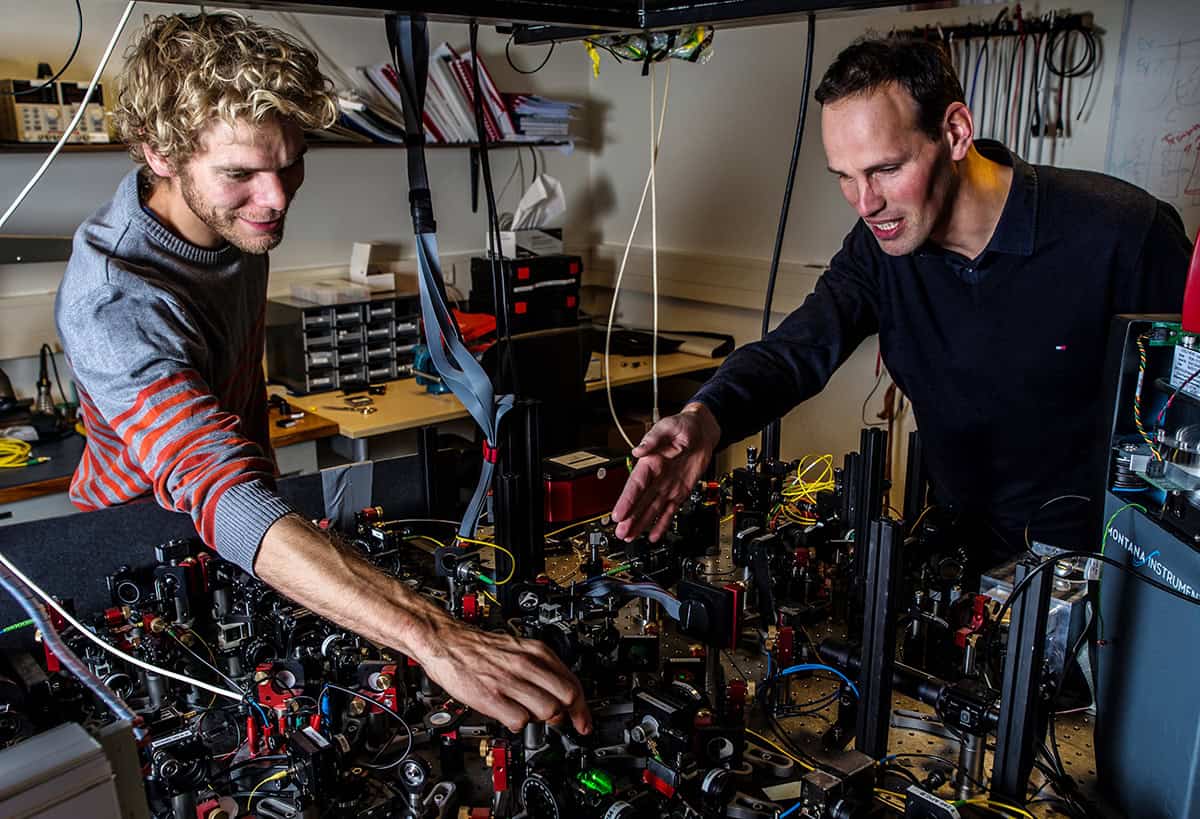

While both of these loopholes had been closed in separate experiments, closing them both in the same experiment was a challenge that remained unresolved for many decades. To successfully close the loopholes, experimentalists would need innovative experimental designs and equipment – including optical components with very low loss, fast random number generators and measurement switches, and high-efficiency single-photon detectors – and careful arrangement of the experiment in space and time. In 2015 three different groups in three countries successfully carried out loophole-free Bell tests for the first time: a team led by Ronald Hanson at Delft University of Technology in the Netherlands was first (Nature 526 682), followed by teams led by Krister Shalm at the National Institute of Standards and Technology (NIST) in Boulder, Colorado, US (Phys. Rev. Lett. 115 250402), and Anton Zeilinger at the University of Vienna, Austria (Phys. Rev. Lett. 115 250401).

The Delft team’s experiment used nitrogen vacancy centres, which are defects in diamond crystals that contain an isolated electron. The electron has spin, a quantum property that can point up, down or in a quantum superposition of the two. The electron can be made to emit a photon that is entangled with its spin direction. Using two of these nitrogen vacancy centres and combining the emitted photons with a beam splitter, the Delft team transferred this photon–spin entanglement to spin–spin entanglement between the two electrons. The spins of the two electrons could then be measured along two different directions (analogous to the two different types of cake tests or two different polarization measurements), and this process was repeated many times to carry out a Bell test. The two diamond crystals were placed in different buildings on the Delft campus, separated by about 1.3 km, so the entanglement creation and the spin measurements could be space-like separated. One advantage of this design is that the researchers were able to successfully measure the electron spins each time they were entangled, eliminating the detection loophole altogether. However, successfully entangling the two spins was difficult, and this made the experiment slow – over 18 days the researchers recorded only 245 spin measurements, still enough to violate the limit of local realism by two standard deviations.

The NIST and Vienna teams took a different approach, both using entangled photons, and a cake-factory-like design in which entangled pairs are produced and sent to two different measurement devices. To close the timing loophole, the measurements had to be far from the entangled pair source – more than 100 m at NIST and about 30 m in Vienna. (Finding suitable lab space was challenging – the Vienna experiment took place in the empty basement of a 13th-century palace.) The measurements also had to be chosen and carried out quickly, using ultrafast random number generators and polarization switches.

Advanced superconducting single-photon detectors were also critical to both experiments. The Vienna team used transition edge sensors, which use a thin piece of tungsten cooled to about 100 mK to detect photons. At this temperature, tungsten sits on the edge of its transition to superconductivity, hovering between normal resistance and the drop to zero resistance as it becomes superconducting. Any tiny amount of energy deposited by a single photon will cause a sudden and relatively large change in the resistivity of the metal. The resulting change in the electrical current through the detector is measured with a superconducting quantum interference device (SQUID) amplifier. Transition-edge-sensor detectors can be up to 98% efficient, a big improvement over other detectors such as single-photon avalanche diodes, but the low temperatures and special electronics required make them large, expensive and sensitive to noise (one researcher found he could only use them at night, because they picked up interference from cell phones in the busy classrooms below the lab). The NIST team used superconducting nanowire single-photon detectors, which are slightly less efficient than transition-edge-sensor detectors, but can be used at higher temperatures and are faster and less noisy. Both the NIST and Vienna loophole-free Bell tests found solid violations of local realism, with results 7–11 standard deviations from the expected limit.

Is local realism dead?

There is one possibility that may be impossible to truly eliminate: what if the outcomes of all the measurements were determined before the entangled particles were created?

Most physicists agree that these three experiments eliminated the most important loopholes, providing solid proof that local realism is dead. Since the first (imperfect) Bell tests in the 1980s, few people ever expected that a loophole-free test would give any other result, but the experiments of 2015 overcame remarkable technical challenges to put any doubts to rest. Loophole-free Bell tests also have some possible applications, including certifying the security of quantum cryptography systems even if the two parties can’t trust their own equipment, and verifying the independence of quantum random numbers. (NIST has plans to generate secure random numbers live and make them freely available online.)

But there is one possibility that, however unlikely, may be impossible to truly eliminate: what if the outcomes of all the measurements were determined before the entangled particles were created, or before the experiment even began, or before the experimenters were even born? If that were the case, local realism could still be law even though we seem to observe violations in Bell tests. At some point in the lifetime of the universe all the atoms and particles that make up the entangled photon sources, random number generators and measurement devices would have had a chance to “communicate”, no matter how far apart they are placed during the experiment (and indeed, according to the Big Bang model, all the matter in the universe was once in the same place at the same time). No-one has proposed exactly how this “cosmic conspiracy” would work, but it would not be forbidden by physics as we know it, as long as no information were transmitted faster than light.

One approach to this challenge is to try to narrow down how recently the parts of a Bell test experiment could have interacted. An experiment carried out earlier this year by the same Vienna group tried to do this by using light from two distant stars to choose the type of measurement on each photon in a Bell test (Phys. Rev. Lett. 118 060401). The idea is that the two stars, which are separated by hundreds of light-years, could not have exchanged information any more recently than the time it would take light to travel between them, placing a limit on how far any cosmic conspiracy must extend backwards in time. (Random fluctuations in the colour of the starlight were used as “coin flips” to decide which measurements to do on each pair of entangled photons.) In a Bell test using these random settings, the team did find a violation of local realism, and concluded that any pre-determined correlations must have been generated more than 600 years in the past. In principle, future experiments could use light from distant quasars to push this limit back millions or billions of years. These “cosmic” Bell tests are impressive experimental achievements, but they are still unable to eliminate the possibility that the local electronics used to measure the stellar photons – which could have communicated in the much more recent past – could produce correlations, which may limit their usefulness. Ultimately, these conspiracy-minded loopholes may have to be abandoned as fundamentally untestable.

Does the world look different, post-loopholes? Physicists have had decades to come to terms with the probable death of local realism, but it still seems like an obvious truth in daily life. That even unasked questions should have answers, and unmade measurements should have outcomes, is an unconscious assumption we make all the time. We do it whenever we talk about what would have happened – like “When both cakes were found to rise early, they would have tasted good,” which was key to our flawed reasoning about the quantum bakery – or even “If it didn’t rain today I would have been on time for work.” Local realistic thinking leads to wrong answers in quantum experiments. But entangled particles don’t often appear in everyday life, so outside the lab – if we choose – we’re probably OK to keep up the illusion of local realism.