Researchers from four universities in Shanghai, China, are developing a practical visual assistance system to help blind and partially sighted people navigate. The prototype system combines lightweight camera headgear, rapid-response AI-facilitated software and artificial “skins” worn on the wrists and finger that provide physiological sensing. Functionality testing suggests that the integration of visual, audio and haptic senses can create a wearable navigation system that overcomes current designs’ adoptability and usability concerns.

Worldwide, 43 million people are blind, according to 2021 estimates by the International Agency for the Prevention of Blindness. Millions more are so severely visually impaired that they require the use of a cane to navigate.

Visual assistance systems offer huge potential as navigation tools, but current designs have many drawbacks and challenges for potential users. These include limited functionality with respect to the size and weight of headgear, battery life and charging issues, slow real-time processing speeds, audio command overload, high system latency that can create safety concerns, and extensive and sometimes complex learning requirements.

Innovations in miniaturized computer hardware, battery charge longevity, AI-trained software to decrease latency in auditory commands, and the addition of lightweight wearable sensory augmentation material providing near-real-time haptic feedback are expected to make visual navigation assistance viable.

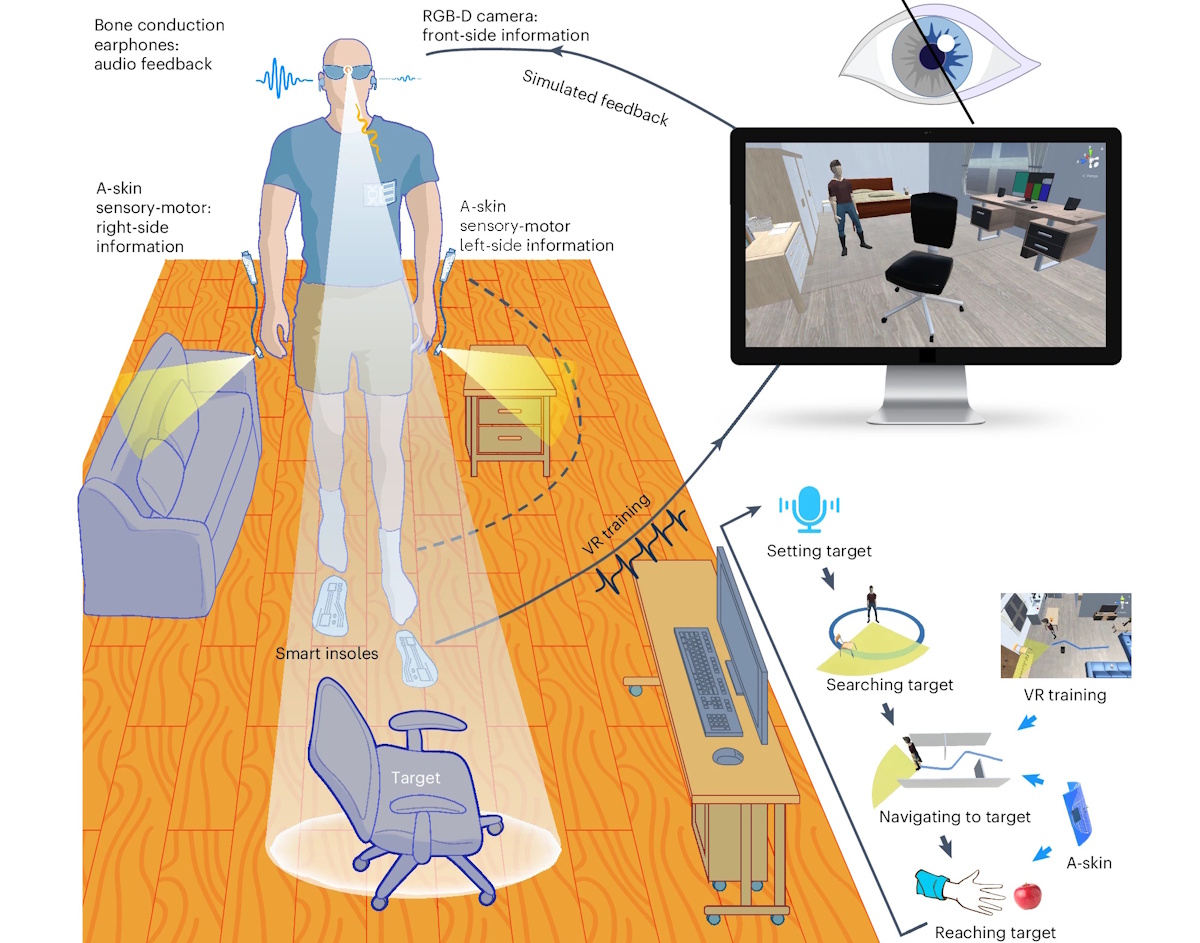

The team’s prototype visual assistance system, described in Nature Machine Intelligence, incorporates an RGB-D (red, green, blue, depth) camera mounted on a 3D-printed glasses frame, ultrathin artificial skins, a commercial lithium-ion battery, a wireless bone-conducting earphone and a virtual reality training platform interfaced via triboelectric smart insoles. The camera is connected to a microcontroller via USB, enabling all computations to be performed locally without the need for a remote server.

When a user sets a target using a voice command, AI algorithms process the RGB-D data to estimate the target’s orientation and determine an obstacle-free direction in real time. As the user begins to walk to the target, bone conduction earphones deliver spatialized cues to guide them, and the system updates the 3D scene in real time.

The system’s real-time visual recognition incorporates changes in distance and perspective, and can compensate for low ambient light and motion blur. To provide robust obstacle avoidance, it combines a global threshold method with a ground interval approach to accurately detect overhead hanging, ground-level and sunken obstacles, as well as sloping or irregular ground surfaces.

First author Jian Tang of Shanghai Jiao Tong University and colleagues tested three audio feedback approaches: spatialized cues, 3D sounds and verbal instructions. They determined that spatialized cues are the most rapid to convey and be understood and provide precise direction perception.

Real-world testing A visually impaired person navigates through a cluttered conference room. (Courtesy: Tang et al. Nature Machine Intelligence)

To complement the audio feedback, the researchers developed stretchable artificial skin – an integrated sensory-motor device that provides near-distance alerting. The core component is a compact time-of-flight sensor that vibrates to stimulate the skin when the distance to an obstacle or object is smaller than a predefined threshold. The actuator is designed as a slim, lightweight polyethylene terephthalate cantilever. A gap between the driving circuit and the skin promotes air circulation to improve skin comfort, breathability and long-term wearability, as well as facilitating actuator vibration.

Users wear the sensor on the back of an index or middle finger, while the actuator and driving circuit are worn on the wrist. When the artificial skin detects a lateral obstacle, it provides haptic feedback in just 18 ms.

Acoustic touch technology helps blind people ‘see’ using sound

The researchers tested the trained system in virtual and real-world environments, with both humanoid robots and 20 visually impaired individuals who had no prior experience of using visual assistance systems. Testing scenarios included walking to a target while avoiding a variety of obstacles and navigating through a maze. Participants’ navigation speed increased with training and proved comparable to walking with a cane. Users were also able to turn more smoothly and were more efficient at pathfinding when using the navigation system than when using a cane.

“The proficient completion of tasks mirroring real-world challenges underscores the system’s effectiveness in meeting real-life challenges,” the researchers write. “Overall, the system stands as a promising research prototype, setting the stage for the future advancement of wearable visual assistance.”