Peer review has been the backbone of scientific publishing for centuries, but many feel that the time has come for the system to be reformed. Michael Banks weighs up the options

“Peer review is similar to what Winston Churchill once noted about democracy,” says David Crotty, a senior editor at Oxford University Press, who writes for the Scholarly Kitchen – a leading blog about the scientific publishing industry. “It’s the worst system apart from all the others.” Since its introduction in the 1700s, peer review has formed the backbone of scientific publishing. Used to judge the suitability of scientific manuscripts submitted to a journal for publication it has, like democracy, so far stood the test of time.

That is mostly thanks to peer review being valued and trusted. A survey of 18,000 researchers, carried out last year by Nature Publishing Group (NPG) and Palgrave Macmillan, found that the quality of peer review offered by a journal was ranked third – behind journal reputation and relevance – in a list of factors that authors considered when submitting their research. When asked about the value that publishers bring, the top response to the 2015 Authors Insight Survey was “improving papers through constructive peer review”, ahead of rapid acceptance of papers and their discoverability.

Such findings are backed up by a study published late last year – Peer Review in 2015 – by the publisher Taylor and Francis, which found that the vast majority of academics support peer review and believe that it improves their manuscripts. With responses from more than 7400 academics worldwide, 68% noted that they have confidence in the academic rigour of published articles because of peer review.

While both these reports suggest that the peer-review system is not broken, researchers’ views about how peer review should operate in the 21st century are changing in the era of digital publishing. Some complain about a proliferation of low-quality journals – most of which are open access – that seek to boost publishers’ revenues by cutting corners on rigorous refereeing procedures. Other researchers, meanwhile, complain that refereeing is done by the community as an unpaid or unrewarded service.

Another issue is the difficulty of finding enough researchers who are enthusiastic and skilled at peer review. Those who are good at the job often end up being over-burdened by requests – increasing the danger that journals promote a “clique” of trusted referees. Some also claim that peer review takes far too long. A final version of a paper can appear in a journal months after it was first uploaded to the arXiv preprint server, where many physicists deposit their paper before, or at the same time as, sending it to be peer reviewed.

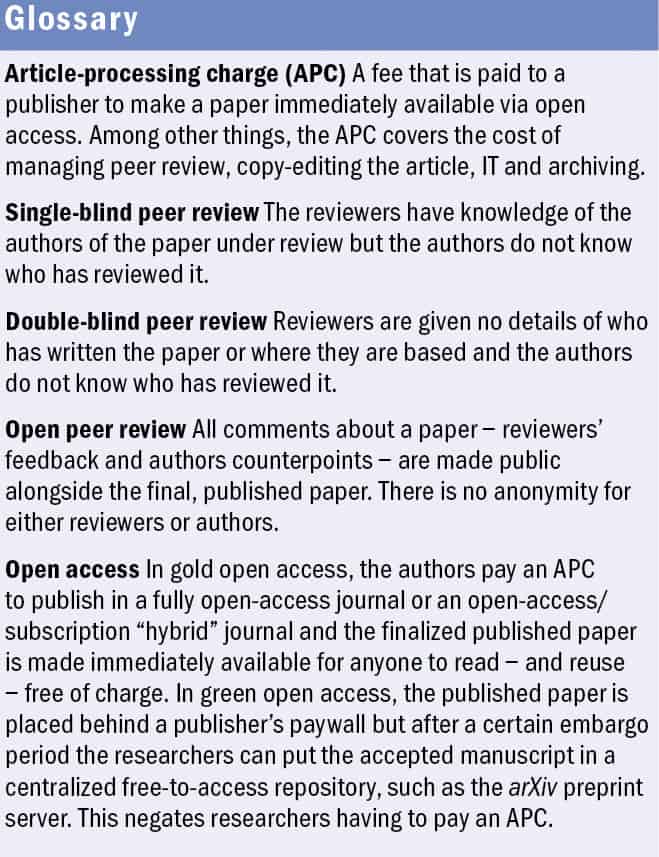

While Crotty believes that peer review works well in general, it could be “greatly improved” by making refinements to the current system. “Peer review isn’t perfect,” agrees medical physicist Penny Gowland of the University of Nottingham in the UK. “The public needs to recognize that and publishers need to recognize that.” She claims that gold open access, in which an author pays an “article-processing charge” (APC) to make it freely available to read upon acceptance, has weakened the peer-review process through the growth of publishers that exploit the system by accepting as many papers as possible to maximize their income. “It acts to undermine the scientific process and in the long term may undermine how the public views science,” she says.

Tweaks not reforms

When peer review was introduced as a means of testing the eligibility of a paper for publication, it was initially performed by the editor of the journal. That changed in the mid-20th century when papers began to be sent to external referees who were not involved in the work but active in the field. They pass a judgement on whether an article is scientifically credible and appropriate for the journal to which it has been submitted.

But one major criticism of peer review is that this refereeing process is opaque. “Peer review is a gatekeeper, but it’s also a bit of a black box, and lots of it is hidden,” says Daniel Ucko, an associate editor at the American Physical Society who is also doing a PhD on the philosophy of peer review at Stony Brook University. “The public is curious about the process and is interested to know why certain science is getting published, especially in fields such as medicine.”

Traditionally, peer review has been performed on a “single blind” basis, in which the reviewers know who has written the paper but the authors of the paper are not told who has reviewed it. But because reviewers know the authors and where they work, a reviewer may judge the paper based on that before reading it. That could lead to a bias against women, people in developing nations, early-career researchers as well as those at smaller, less-established institutions.

Ironically, one way of improving peer review is to make it less transparent. Crotty advocates double-blind peer review, in which the authors do not know who is reviewing their paper – like single-blind review – but the reviewers also do not know who has written the paper. Although this added anonymity has some advantages such as reducing the effect of bias, particularly gender-based, it is not foolproof. Reviewers could easily use arXiv or an Internet search to track down the author’s identity or institution – not hard at all in small communities – even if it is hidden in the peer-review process. Meanwhile, removing references to an author’s own work would be time-consuming.

Yet Gowland, who is a proponent of the double-blind process, disagrees that arXiv would stop effective blinding. “A properly motivated reviewer would not simply search arXiv to find the author,” she says. Indeed, Crotty says that studies of double-blind peer review have shown that reviewers actually fail to guess the authors most of the time. “Even if you manage to guess the lab, blinding the authors is good for gender bias,” he adds.

One way to counter the lack of transparency during the review process is “open” peer review, in which the reviewers’ comments – and authors’ counterpoints – are made public online together with the final accepted version of the paper. In January Nature Communications announced a year-long trial to publish all reviewer comments and author rebuttal letters for published papers in the journal, unless authors ask them not to do so. This “peer-review file” will be published along with the accepted version of the manuscript. The success of the trial will be determined by opt-out rates and other “monitoring parameters”.

Tim Smith, an associate director at IOP Publishing, which publishes Physics World, says that it would be relatively simple to publish such a peer-review file, but there would be practical issues to address such as the level of any editing required on referee comments to meet language criteria for publication.

In 2014 the European Union set up the first government-funded, multinational effort “to improve efficiency, transparency and accountability of peer review”. Chaired by Flaminio Squazzoni, a sociologist at the University of Brescia, Italy, PEERE will analyse peer review in science and evaluate different models of peer review as well as explore new incentive structures, rules and measures to improve the peer-review process.

Squazzoni, who is a fan of double-blind peer review, says there are, however, many advantages of open peer review. It would solve the transparency issue, increase the quality of reviews and encourage reviewers to stop making authors cite their own work. But Squazzoni admits it would be tricky to implement. “Imagine a junior scientist reviewing a manuscript from an established scientist. If the review is open, it might be difficult for them to reject it,” he says. “Then there is the issue of retaliation, and peer review must protect reviewers from that.”

Ucko agrees, adding that much of the effort of peer review is placed on junior scientists’ shoulders, who stand to lose by having their identities revealed. Indeed, Ucko believes that referees must remain unknown. “Anonymous referees provide candid critique,” he says. “They may have bias, but that is where the editor’s job comes in to safeguard the peer-review process.” That view is backed by Smith, who points out that IOP Publishing journals are run by in-house “peer-review experts” with responsibility for preserving the integrity of the referee selection and decision-making processes.

Credit where credit’s due

Another possible reform of the system is to incentivize reviewers to do a good job in the first place. “Peer review doesn’t have any kind of reward for scientists – except for making the authors cite your paper – so reviewers rationalize the time they spend on peer review,” says Squazzoni. “And the more people who think peer review is not the top of their agenda, the more difficult it will be to get people to do it.” While reviewers get to influence the community through peer review, as Crotty points out, “nobody would get tenure for being good at peer review”.

One way of crediting researchers for their efforts could be to pay them to do peer review. But Crotty says that would create problems. Apart from deciding who should pay, he fears a tiered system, with the best reviewers demanding more money. “That would end up being more trouble than it’s worth,” says Crotty, who adds that in March, NPG’s Scientific Reports started a trial in which authors could fast-track peer review for a fee of $750, around $100 of which went to each referee. Resignations from the journal’s editorial board over what would be a two-tiered system forced the journal to quickly backtrack.

Squazzoni agrees that financial reward is not the way forward, claiming that this would have a “crowding-out effect on intrinsic motivations of scientists”, while Ucko says anything more than a nominal financial reward would be a particular drain for journals published by learned societies, which often rely on journal income to fund their activities. Paying referees could end up increasing APCs and journal subscription costs.

Smith says that referees for IOP Publishing journals receive discounts on APCs, but admits more could be done by publishers to give individual credit to researchers for their overall refereeing activity. “Serving as a referee, editorial board member or guest editor has become an integral part of a researcher’s career and should be recognized as such,” he adds.

Another way of crediting researchers for peer review could be through the Open Researcher and Contributor ID (ORCID) system. This provides a “digital identifier” to distinguish scientists from one another and creates a record linking scientists to their research outputs such as papers and grant submissions. Some 1.8 million researchers have already registered for a unique identifier and Crotty says that the system could be expanded to include peer-review data such as introducing “peer-review citations”. Indeed, last month seven science publishers announced that as of 1 January they require researchers to identify themselves using the ORCID system when submitting papers.

For all the talk about reforming peer review, Squazzoni warns that a greater threat is the lack of data about the peer-review process itself. “We don’t have data about peer review and as a result it is poorly understood,” he says. Squazzoni says this is starting to change, with publishers offering their data to scientists to study (like in PEERE), but he says that more needs to be done. “Peer review is essential for how science works,” he continues. “And the more we know about the process, the better.”