The field of neuroscience has come a long way: beginning with single electrode electrophysiological recording of one neuron at a time and progressing to simultaneous recording of multiple neurons’ activity using tetrodes implanted in the brains of (typically) mice and monkeys. Today, advances in fluorescence microscopy and fluorescent protein engineering, combined with multielectrode recording, enable acquisition of the spatiotemporal details of electrical activity of neurons in vivo in real time.

In a recent study published in Nature, a team headed up at the Allen Institute went even further, recording the activity of hundreds of neurons at once to create the largest dataset of neurons’ electrical activity in the world.

Towards comprehensive mapping of brain activity

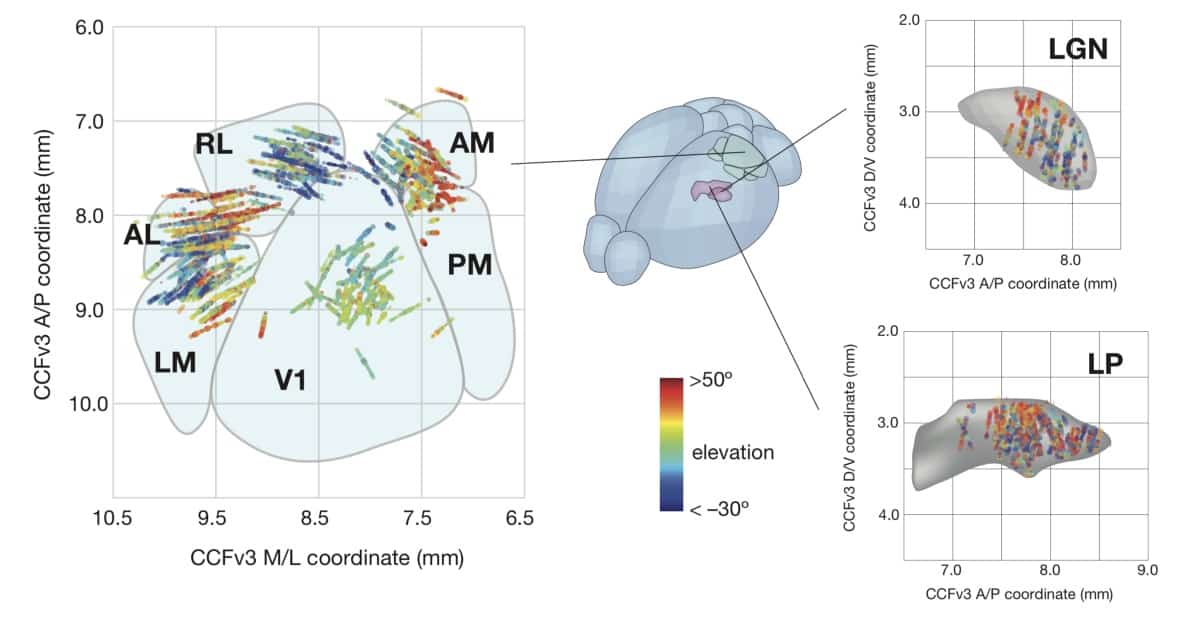

Joshua Siegle, Xiaoxuan Jia and colleagues took advantage of a previously developed technology called Neuropixels to achieve multichannel, large-volume, high-resolution spatiotemporal coverage of neuronal activity. Neuropixels is a silicon probe containing 384 recording channels; using only two probes, more than 700 well-isolated single neurons can be recorded simultaneously across different regions in the (mouse) brain.

The Allen Institute team, led by Shawn Olsen and Christof Koch, used Neuropixels to record activity from hundreds of neurons in up to eight different visual regions of the brain in awake, head-fixed mice viewing diverse visual stimuli. In contrast to sparse multichannel recording, localized large-volume coverage of several brain regions at once can reveal the information flow through the brain. In addition, capturing information from different areas in the brain simultaneously helps reveal how the brain operates through the interaction of these different areas.

Finding hierarchy during information processing

The team implanted Neuropixels probes up to 3.5 mm into the animals’ brains to measure responses from visual cortical (primary visual cortex and five higher cortical areas) and thalamic areas. During recording sessions, mice passively viewed a range of natural and artificial visual stimuli including drifting gratings and full field flashes. By measuring time delays in neuronal activity between different brain regions, as well as the size of visual field that each neuron responds to, the team observed that the information flow follows a hierarchical organization.

The researchers also carried out Neuropixels recordings in another set of mice that were trained to respond to a visual change. They found a similar hierarchical structure in activity during this behavioural task: neurons in visual areas higher in the hierarchy responded more strongly when the stimulus changed. The recordings enabled the researchers to infer the animal’s success in detecting a change in visual stimuli by just looking at the neuronal electrical activity. Interestingly, observing activity in the higher-order areas allowed the researchers to predict these successes with greater accuracy, suggesting that these areas are more likely to be involved in guiding behaviour.

In the absence of background light (removing the visual input from the mice), the same neurons still fired; however, the order of information flow was lost. This could mean that some sort of hierarchy is needed to process information and understand aspects of the world around us. Although mouse vision is not the same as humans, neuroscientists can still learn many working principles of sensory processing that are generalizable to how humans perceive and process information as some level.

“At a very high level, we want to understand why we need to have multiple visual areas in our brain in the first place,” says Siegle. “How are each of these areas specialized, and then how do they communicate with each other and synchronize their activity to effectively guide your interactions with the world?”