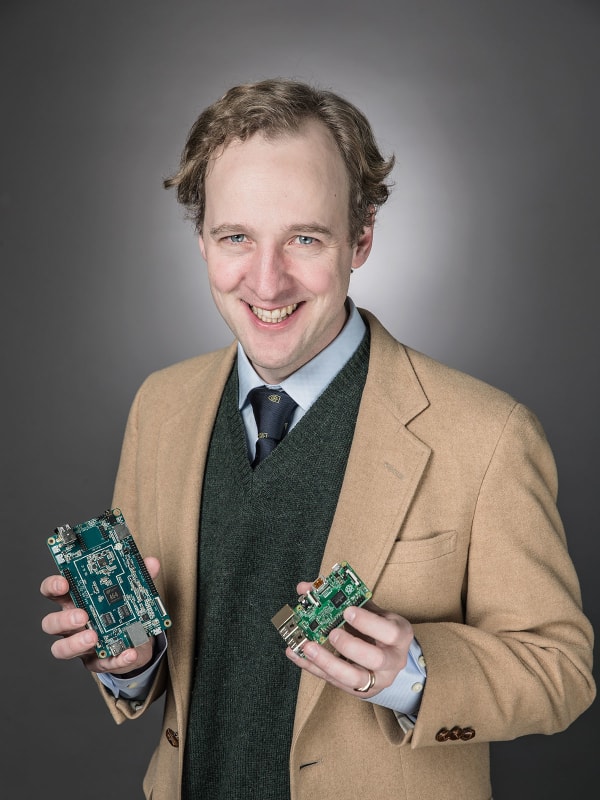

Anatole von Lilienfeld is a professor of physical chemistry at the University of Basel, Switzerland, and a project leader in the Swiss National Center for Computational Design and Discovery of Novel Materials (MARVEL). He is also the editor-in-chief of a new journal called Machine Learning: Science and Technology, which (like Physics World) is published by IOP Publishing. He spoke to Margaret Harris about the role that machine learning plays in science, the purpose of the journal and how he thinks the field will develop.

The term “machine learning” means different things to different people. What’s your definition?

It’s a term used by many communities, but in the context of physics I would stick to a rather technical definition. Machine learning can be roughly divided into two different domains, depending on the problems one wants to attack. One domain is called unsupervised learning, which is basically about categorizing data. This task can be nontrivial when you’re dealing with high-dimensional, heterogeneous data of varying fidelity. What unsupervised learning algorithms do is try to determine whether these data can be grouped into different clusters in a systematic way, without any bias or heuristic, and without introducing spurious artefacts.

Problems of this type are ubiquitous: all quantitative sciences encounter them in one way or another. But one example involves proteins, which fold in certain shapes that depend on their amino acid sequences. When you measure the X-ray spectra of protein crystals, you find something interesting: the number of possible folds is large, but finite. So if somebody gave you some sequences and their corresponding folds, a good unsupervised learning algorithm might be able to cluster new sequences to help you determine which of them are associated with which folds.

The second branch of machine learning is called supervised learning. In this case, rather than merely categorizing the data, the algorithms also try to predict values outside the dataset. An example from materials science would be that if you have a bunch of materials for which a property has been measured – the formation energy of some inorganic crystals, say – you can then ask, “I wonder what the formation energy would be of a new crystal?” Supervised learning can give you the statistically most likely estimate, based on the known properties of the existing materials in the dataset.

These are the two main branches of machine learning, and the thing they have in common is a need for data. There’s no machine learning without data. It’s a statistical approach, and this is sort of implied when you’re talking about machine learning: these techniques are mathematically rigorous ways to arrive at statistical statements in a quantitative manner.

You’ve given a couple of examples of machine-learning applications within materials science. I know this is the subject closest to your heart, but the new journal you’re working on covers the whole of science. What are some applications in other fields?

Of course, I’m biased towards materials science, but other domains face similar problems. Here’s an example. One of the most important equations in materials science is the electronic Schrödinger equation. This differential equation is difficult to solve even with computers, but machine learning enables us to circumvent the need to solve it for new materials. Similarly, many scientific domains require solutions to the Navier-Stokes equations in various approximations. These equations can describe turbulent flow, which matters for combustion, for climate modelling, for engineering aerodynamics or for ship construction (among other areas). These equations are also hard to solve numerically, so this is a place where machine learning can be applied.

We have a unique opportunity to give people from all these different domains a place to discuss developments of machine learning in their fields

Anatole von Lilienfeld

Another area of interest is medical imaging. The scanning techniques used to detect tumours and malignant tissues are good applications of unsupervised learning – you want to cluster healthy tissue versus unhealthy tissue. But if you think about it, there is hardly any quantitative domain within the physical sciences where machine learning cannot be applied.

With this journal, we have a unique opportunity to give people from all these different domains a place to discuss developments of machine learning in their fields. So if there’s a major advancement in image recognition of, say, lung tumours, maybe materials scientists will learn something from it that will help them interpret X-ray spectra, or vice versa. Traditionally, people would publish such work within their own disciplines, so it would be hidden from everyone else.

You talked about machine learning as an alternative to computation for finding solutions to equations. In your editorial for the first issue of Machine Learning: Science and Technology, you say that machine learning is emerging as a fourth pillar of science, alongside experimentation, theory and computation. How do you see these approaches fitting together?

Humans began doing experimentation very early. You could view the first tools as being the result of experiments. Theory developed later. Some would say the Greeks started it, but other cultures also developed theories; the Maya, for example, had theories of stellar movement and calendars. All this work culminated in the modern theories of physics, to which many brilliant scientists contributed.

But that wasn’t the end, because many of these brilliant theories had equations that could not be solved using pen and paper. There’s a famous quote from the physicist Paul Dirac where he says that all the equations predicting the behaviour of electrons and nuclei are known. The troubled was that no human could solve those equations. However, with some reasonable approximations, computers could. Because of this, simulation has gained tremendous traction over the last decades, and of course it helps that Moore’s Law has meant that you can buy an exponentially increasing amount of computing power for a constant number of dollars.

I think the next step is to use machine learning to build on theory, experiment and computation, and thus to make even better predictions about the systems we study. When you use computation to find numerical solutions to equations, you need a big computer. However, the outcome of that big computation can then feed into a dataset and be used for machine learning, and you can feed in experimental data alongside it.

Neural networks, explained

Over the next few years, I think we’ll start to see datasets that combine experimental results with simulation results obtained at different levels of accuracy. Some of these datasets may be incredibly heterogeneous, with a lot of “holes” for unknown quantities and different uncertainties. Machine learning offers a way to integrate that knowledge, and to build a unifying model that enables us to identify areas where the holes are the largest or the uncertainties are the greatest. These areas could then be studied in more detail by experiments or by additional simulations.

What other developments should we expect to see in machine learning?

I think we’ll see a feedback loop develop, similar to the one we have now between experiment and theory. As experiments progress, they create an incentive for proposing hypotheses, and then you use that theory to make a prediction that you can verify experimentally. Historically, some experiments were excluded from that because the equations were too difficult to solve. But then computation arrived, and suddenly the scope of experimental design widened tremendously.

I think the same thing is going to happen with machine learning. We’re already seeing it in materials science, where – with the help of supervised learning – we’ve made predictions within milliseconds about how a new material will behave, whereas previously it would have taken hours to simulate on a supercomputer. I believe that will soon be true for all the physical sciences. I’m not saying we will be able to perform all possible experiments, but we’ll be able to design a much broader range of experiments than we could previously.