Physicists in Canada and the US have devised a new and much faster way of measuring how noise degrades the performance of quantum computers. The technique was used to evaluate a device made from three quantum bits and involved measuring only three parameters, rather than the 4096 needed by previous methods. The research could lead to a better understanding of how noise affects the operation of practical quantum computers and ultimately help physicists develop more robust quantum logic devices and algorithms (Science 317 1893).

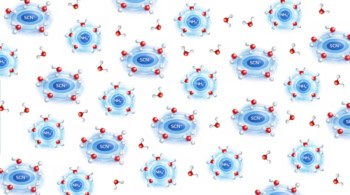

Quantum computers exploit the fact that a quantum system can be in a superposition of two states, say 1 and 0, at the same time. N such quantum bits (qubits) could be combined or “entangled” to represent 2N values simultaneously, which could lead to the parallel processing of information on a massive scale. However, qubits are very fragile and any residual noise – which is present in any practical quantum computer — can degrade the quantum nature of the qubits. This process is called decoherence, and if left unchecked, will prevent a quantum computer from working.

Fortunately, decoherence can be kept at an acceptable level using “error-correction” schemes that use several noise-prone qubits to perform the role of one noise-free qubit. These schemes require a good understanding of how noise affects the qubits, which depends on the specific design of the computer. A computer using trapped ions as qubits, for example, could be affected very differently by noise than a computer that uses nuclear spins.

The effects of noise can be measured using “quantum process tomography”, which involves measuring the output of a quantum computer for all possible input states. For an N-qubit system this involves 24N measurements. As a result a system with eight qubits would, in principle, require over four billion measurements.

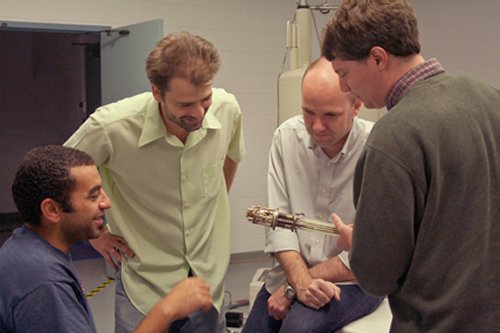

Now, a team at the University of Waterloo led by Joseph Emerson along with David Cory at the Massachusetts Institute of Technology have come up with a new way of looking at decoherence that focuses on a select few noise parameters that most greatly affect the performance of a quantum computer — without having to make large numbers of mostly irrelevant measurements.

When applied to a three-qubit system, the technique involves measuring three probabilities — namely that no qubits; one qubit; and two qubits will fail during a quantum calculation. These values are determined by having the system perform specific sets of operations and watching how it responds. The measurements are repeated several times until the probabilities are obtained to a desired level of uncertainty.

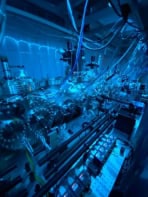

The team applied their technique to an experimental “quantum memory” comprising three nuclear spins as qubits that were controlled using nuclear magnetic resonance. Using their new technique, the team were able to characterize the system in a matter of days – something that would have taken several months to do using quantum process tomography. The team then used the probabilities to develop optimized control techniques for maintaining the quantum nature of the memory.

According to Emerson, the technique can be used on any type of quantum computer and could provide a very efficient way of comparing the performance of different architectures. He also believes that it could help physicists gain a better understanding of the different types of noise that can occur in a quantum computer, leading to better designs. Emerson told physicsweb.org that he is very keen see the technique on an eight-qubit quantum computer based on ion traps that has been built at the University of Innsbruck in Austria.