The Earth is warming up, with potentially disastrous consequences. Computer climate models based on physics are our best hope of predicting and managing climate change, as Adam Scaife, Chris Folland and John Mitchell explain

It is official: the Earth is getting hotter, and it is down to us. This month scientists from over 60 nations on the Intergovernmental Panel on Climate Change (IPCC) released the first part of their latest report on global warming. In the report the panel concludes that it is very likely that most of the 0.5 °C increase in global temperature over the last 50 years is due to man-made emissions of greenhouse gases. And the science suggests that much greater changes are in store: by 2100 anthropogenic global warming could be comparable to the warming of about 6 °C since the last ice age.

The consequences of global warming could be catastrophic. As the Earth continues to heat up, the frequency of floods and droughts is likely to increase, water supplies and ecosystems will be placed under threat, agricultural practices will have to be changed and millions of people may be displaced as the sea level rises. The global economy could also be severely affected. The recent Stern Review, which was commissioned by the UK government to assess the economic impact of climate change, warns that 5–20% of the world’s gross domestic product could be lost unless large cuts in greenhouse-gas emissions are made soon. But how do we make predictions of climate change, and why should we trust them?

The climate is an enormously complex system, fuelled by solar energy and involving interactions between the atmosphere, land and oceans. Our best hope of understanding how the climate changes over time and how we may be affecting it lies in computer climate models developed over the past 50 years. Climate models are probably the most complex in all of science and have already proved their worth with startling success in simulating the past climate of the Earth. Although very much a multidisciplinary field, climate modelling is rooted in the physics of fluid mechanics and thermodynamics, and physicists worldwide are collaborating to improve these models by better representing physical processes in the climate system.

Not a new idea

Long before fears of climate change arose, scientists were aware that naturally occurring gases in the atmosphere warm the Earth by trapping the infrared radiation that it emits. Indeed, without this natural “greenhouse effect” – which keeps the Earth about 30 °C warmer than it would otherwise be – life may never have evolved. Mathematician and physicist Joseph Fourier was the first to describe the greenhouse effect in the early 19th century, and a few decades later John Tyndall realized that gases like carbon dioxide and water vapour are the principal causes, rather than the more abundant atmospheric constituents such as nitrogen and oxygen.

Carbon dioxide (CO2) gas is released when we burn fossil fuels, and the first person to quantify the effect that CO2 could have in enhancing the greenhouse effect was 19th-century Swedish chemist Svante Arrhenius. He calculated by hand that a doubling of CO2 in the atmosphere would ultimately lead to a 5–6 °C increase in global temperature – a figure remarkably close to current predictions. More detailed calculations in the late 1930s by British engineer Guy Callendar suggested a less dramatic warming of 2 °C, with a greater effect in the polar regions.

Meanwhile, at the turn of the 20th century, Norwegian meteorologist Vilhelm Bjerknes founded the science of weather forecasting. He noted that given detailed initial conditions and the relevant physical laws it should be possible to predict future weather conditions mathematically. Lewis Fry Richardson took up this challenge in the 1920s by using numerical techniques to solve the differential equations for fluid flow. Richardson’s forecasts were highly inaccurate, but his methodology laid the foundations for the first computer models of the atmosphere developed in the 1950s. By the 1970s these models were more accurate than forecasters who relied on weather charts alone, and continued improvements since then mean that today three-day forecasts are as accurate as one-day forecasts were 20 years ago.

But given that weather forecasts are unreliable for more than a few days ahead, how can we hope to predict climate, say, tens or hundreds of years into the future? Part of the answer lies in climate being the average of weather conditions over time. We do not need to predict the exact sequence of weather in order to predict future climate, just as in thermodynamics we do not need to predict the path of every molecule to quantify the average properties of gases.

In the 1960s researchers based at the Geophysical Fluid Dynamics Laboratory in Princeton, US, built on weather-forecasting models to simulate the effect of anthropogenic CO2 emissions on the Earth’s climate. Measurements by Charles Keeling at Mauna Loa, Hawaii, starting in 1957 had shown clear evidence that the concentration of CO2 in the atmosphere was increasing. The Princeton model predicted that doubling the amount of CO2 in the atmosphere would warm the troposphere – the lowest level of the atmosphere – but also cool the much higher stratosphere, while producing the greatest warming towards the poles, in agreement with Callendar’s early calculations.

The nuts and bolts of a climate model

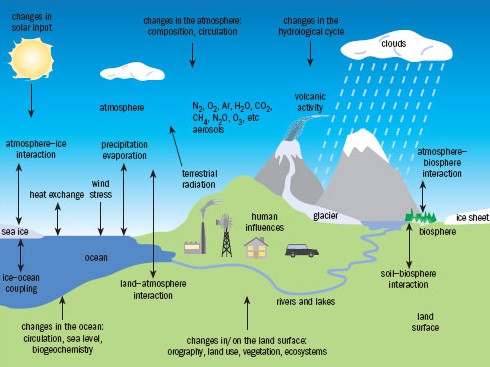

The climate system consists of five elements: the atmosphere; the ocean; the biosphere; the cryosphere (ice and snow) and the geosphere (rock and soil). These components interact on many different scales in both space and time, causing the climate to have a large natural variability; and human influences such as greenhouse-gas emissions add further complexity (figure 1). Predicting the climate at a certain time in the future thus depends on our ability to include as many of the key processes as possible in our climate models.

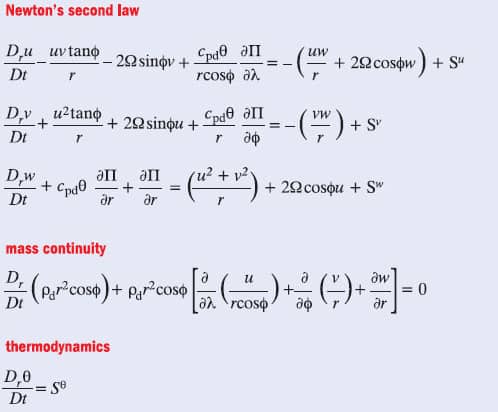

At the heart of climate models and weather forecasts lie the Navier–Stokes equations, a set of differential equations that allows us to model the dynamics of the atmosphere as a continuous, compressible fluid. By transforming the equations into a rotating frame of reference in spherical coordinates (the Earth), we arrive at the basic equations of motion for a “parcel” of air in each of the east– west, north–south and vertical directions. Additional equations describe the thermodynamic properties of the atmosphere (see figure 2).

Unfortunately, there is no known analytical solution to the Navier–Stokes equations; indeed, finding one is among the greatest challenges in mathematics. Instead, the equations are solved numerically on a 3D lattice of grid points that covers the globe. The spacing between these points dictates the resolution of the model, which is currently limited by available computing power to about 200 km in the horizontal direction and 1 km in the vertical, with finer vertical resolution near the Earth’s surface. Much greater vertical than horizontal resolution is needed because most atmospheric and oceanic structures are shallow compared with their width. The Navier–Stokes equations allow climate modellers to calculate the physical parameters – temperature, humidity, wind speed and so on – at each grid point at a single moment based on their values some time earlier. The time interval or “timestep” used must be short enough to give solutions that are accurate and numerically stable; but the shorter the timestep, the more computer time is needed to run the model. Current climate models use timesteps of about 30 min, while the same basic models but with shorter timesteps and higher spatial resolution are used for weather forecasting.

However, some processes that influence our climate occur on smaller spatial or shorter temporal scales than the resolution of these models. For example, clouds can heat the atmosphere by releasing latent heat, and they also interact strongly with infrared and visible radiation. But most clouds are hundreds of times smaller than the typical computer-model resolution. If clouds were modelled incorrectly, climate simulations would be seriously in error.

Climate modellers deal with such sub-resolution processes using a technique called parametrization, whereby small-scale processes are represented by average values over one grid box that have been worked out using observations, theory and case studies from high-resolution models. Examples of cloud parametrization include “convective” schemes that describe the heavy tropical rainfall that dries the atmosphere through condensation and warms it through the release of latent heat; and “cloud” schemes that use the winds, temperatures and humidity calculated by the model to simulate the formation and decay of the clouds and their effect on radiation.

Parametrizing interactions in the climate system is a major part of climate-modelling research. For instance, the main external input into the Earth’s climate is electromagnetic radiation from the Sun, so the way the radiation interacts with the atmosphere, ocean and land surface must be accurately described. Since this radiation is absorbed, emitted and scattered by non-uniform distributions of atmospheric gases such as water vapour, carbon dioxide and ozone, we need to work out the average concentration of different gases in a grid box and combine this with spectroscopic data for each gas. The overall heating rate calculated adds to the “source term” in the thermodynamic equation (see figure 2).

The topography of the Earth’s surface, its frictional properties and its reflectivity also vary on scales smaller than the resolution of the model. These are important because they control the exchange of momentum, heat and moisture between the atmosphere and the Earth’s surface. In order to calculate these exchanges and feed them into source terms in the momentum and thermodynamic equations, climate modellers have to parametrize atmospheric turbulence. Numerous other parametrization schemes are now being included and improved in state-of-the-art models, including sea ice, soil characteristics, atmospheric aerosols and atmospheric chemistry.

In addition to improving parametrizations, perhaps the biggest advance in climate modelling in the past 15 years has been to couple atmospheric models to dynamic models of the ocean. The ocean is crucial for climate because it controls the flux of water vapour and latent heat into the atmosphere, as well as storing large amounts of heat and CO2. In a coupled model, the ocean is fully simulated using the same equations that describe the motion of the atmosphere. This is in contrast to older “slab models” that represented the ocean as simply a stationary block of water that can exchange heat with the atmosphere. These models tended to overestimate how quickly the oceans warm as global temperature increases.

Forcings and feedbacks

The most urgent issue facing climate modellers today is the effect humans are having on the climate system. Parametrizing the interactions between the components of the climate system allows the models to simulate the large natural variability of the climate. But external factors or “radiative forcings” – which also include natural factors like the eruption of volcanoes or variations in solar activity – can have a dramatic effect on the radiation balance of the climate system.

The major anthropogenic forcing is the emission of CO2. The concentration of CO2 in the atmosphere has risen from 280 ppm to 380 ppm since the industrial revolution, and because it lasts for so long in the atmosphere (about a century) CO2 has a long-term effect on our climate.

While earlier models could tell us the eventual “equilibrium” warming due to, say, a doubling in CO2 concentration, they could not predict accurately how the temperature would change as a function of time. However, because coupled ocean–atmosphere models can simulate the slow warming of the oceans, they allow us to predict this “transient climate response”. Crucially, these state-of-the-art models also allow us to input changing emissions over time to predict how the climate will vary as the anthropogenic forcing increases.

Carbon dioxide is not the only anthropogenic forcing. For example, in 1988 Jim Hansen at the Goddard Institute for Space Studies in the US and colleagues used a climate model to demonstrate the importance of other greenhouse gases such as methane, nitrous oxide and chlorofluorocarbons (CFCs), which are also separately implicated in depleting the ozone layer. Furthermore, in the 1980s sulphate aerosol particles in the troposphere produced by sulphur in fossil-fuel emissions were found to scatter visible light back into space and thus significantly cool the climate. This important effect was first included in a climate model in 1995 by one of the authors (JM) and colleagues at the Hadley Centre. Aerosols also have an indirect effect on climate by causing cloud droplets to become smaller and thus increasing the reflectivity and prolonging the lifetime of clouds. The latest models include these indirect effects, as well as those of natural volcanic aerosols, mineral dust particles and non-sulphate aerosols produced by burning fossil-fuels and biomass.

To make matters more complex, the effect of climate forcings can be amplified or reduced by a variety of feedback mechanisms. For example, as the ice sheets melt, the cooling effect they produce by reflecting radiation away from the Earth is reduced – a positive-feedback process known as the ice–albedo effect. Another important feedback process that has been included in models in the past few years involves the absorption and emission of greenhouse gases by the biosphere. In 2000 Peter Cox, then at the Hadley Centre, showed that global warming could lead to the death of vegetation in regions such as the Amazonian rainforests through reduced rainfall; as well as increased respiration from bacteria in the soil. Both will release additional CO2 to the atmosphere, leading, in turn, to further warming.

Improvements in computing power since the 1970s have been crucial in allowing additional processes to be included. Although current models typically contain a million lines of code, we can still simulate years of model time per day, allowing us to run simulations many times over with slightly different values of physical parameters (see for example www.climateprediction.net). This allows us to assess how sensitive the predictions of climate models are to uncertainties in these values. As computing power and model resolution increase still further, we will be able to resolve more processes explicitly, reducing the need for parametrization.

The accuracy of climate models can be assessed in a number of ways. One important test of a climate model is to simulate a stable “current climate” for thousands of years in the absence of forcings. Indeed, models can now produce climates with tiny changes in surface temperature per century but with year-on-year, seasonal and regional changes that mimic those observed. These include jet streams, trade winds, depressions and anticyclones that would be difficult for even the most experienced forecaster to distinguish from real weather, and even major year-on-year variations like the El Niño–Southern Oscillation.

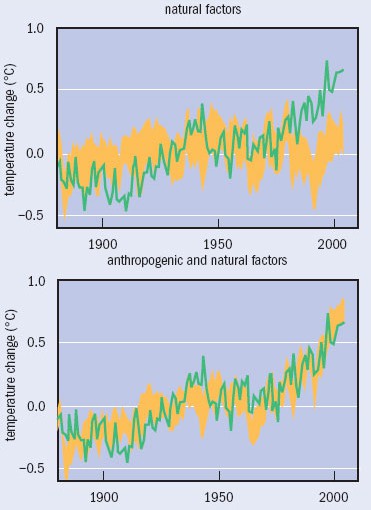

Another crucial test for climate models is that they are able reproduce observed climate change in the past. In the mid-1990s Ben Santer at the Lawrence Livermore National Laboratory in the US and colleagues strengthened the argument that humans are influencing climate by showing that climate models successfully simulate the spatial pattern of 20th-century climate change only if they include anthropogenic effects. More recently, Peter Stott and co-workers at the Hadley Centre showed that this is also true for the temporal evolution of global temperature (see figure 3). Such results demonstrate the power of climate models in allowing us to add or remove forcings one by one to distinguish the effects humans are having on the climate.

Climate models can also be tested against very different climatic conditions further in the past, such as the last ice age about 9000 years ago and the Holocene warm period that followed it. As no instrumental data are available from this time, the models are tested against “proxy” indicators of temperature change, such as tree rings or ice cores. These data are not as reliable as modern-day measurements, but climate models have successfully reproduced phenomena inferred from the data, such as the southward advance of the Sahara desert over the last 9000 years.

Predicting the future

Having made our models and tested them against current and past climate data, what do they tell us about how the climate might change in years to come? First, we need to input a scenario of future emissions of greenhouse gases. Many different scenarios are used, based on estimates of economic and social factors, and this is one of the major sources of uncertainty in climate prediction. But even if greenhouse-gas emissions are substantially reduced, the long atmospheric lifetime of CO2 means that we cannot avoid further climate change due to CO2 already in the atmosphere.

Predictions vary between the different climate models developed worldwide, and due to the precise details of parametrizations within those models. Cloud parametrizations in particular contribute to the uncertainty because clouds can both cool the atmosphere through reflection or warm it by reduced radiative emissions. Such uncertainties led to a best estimate given in the third IPCC report in 2001 of global warming in the range 1.4–5.8 °C by 2100 compared with 1990.

Despite the uncertainties, however, all models show that the Earth will warm in the next century, with a consistent geographical pattern (figure 4). For example, positive feedback from the ice–albedo effect produces greater warming near the poles, particularly in the Arctic. Oceans, on the other hand, will warm more slowly than the land due to their large thermal inertia. Average rainfall is expected to increase because warmer air can hold a greater amount of water before becoming saturated. However, this extra capacity for atmospheric moisture will also allow more evaporation, drying of the soil and soaring temperatures in continental areas in summer.

Sea levels are predicted to rise by about 40 cm (with considerable uncertainty) by 2100 due largely to thermal expansion of the oceans and melting of land ice. This may seem like a small rise, but much of the human population live in coastal zones where they are particularly at risk from enhanced storm flooding – in Bangladesh, for example, many millions of people could be displaced. In the longer term, there are serious concerns over melting of the Greenland and West Antarctic ice sheets that could lead to much greater increases in sea level.

We still urgently need to improve the modelling and observation of many processes to refine climate predictions, especially on seasonal and regional scales. For example, hurricanes and typhoons are still not represented in many models and other phenomena such as the Gulf Stream are poorly understood due to lack of observations. We are therefore not confident of how hurricanes and other storms may change as a result of global warming, if at all, or how close we might be to a major slowing of the Gulf Stream.

Though they will be further refined, there are many reasons to trust the predictions of current climate models. Above all, they are based on established laws of physics and embody our best knowledge about the interactions and feedback mechanisms in the climate system. Over a period of a few days, models can forecast the weather skilfully; they also do a remarkable job of reproducing the current worldwide climate as well as the global mean temperature over the last century. They also simulate the dramatically different climates of the last ice age and the Holocene warm period, which were the result of forcings comparable in size to the anthropogenic forcing expected by the end of the 21st century.

Although there may be a few positive aspects to global warming – for instance high-latitude regions may experience extended growing seasons and new shipping routes are likely to be opened up in the Arctic as sea ice retreats – the great majority of impacts are likely to be negative. Hotter conditions are likely to stress many tropical forests and crops; while outside the tropics, events like the 2003 heatwave that led to the deaths of tens of thousands of Europeans are likely to be commonplace by 2050. This year is already predicted to be the hottest on record.

We are at a critical point in history where not only are we having a discernible effect on the Earth’s climate, but we are also developing the capability to predict this effect. Climate prediction is one of the largest international programmes of scientific research ever undertaken and it led to the 1997 Kyoto Protocol set up by the United Nations to address greenhouse-gas emissions. Although the protocol has so far led to few changes in atmospheric greenhouse-gas concentrations, the landmark agreement paves the way for further emissions cuts. Better modelling of natural seasonal and regional climate variations are still needed to improve our estimates of the impacts of anthropogenic climate change. But we are already faced with a clear challenge: to use existing climate predictions wisely and develop responsible mitigation and adaptation policies to protect ourselves and the rest of the biosphere.

At a glance: Climate modelling

- The scientific consensus is that the observed warming of the Earth during the past half-century is mostly due to human emissions of greenhouse gases

- Predicting climate change depends on sophisticated computer models developed over the past 50 years

- Climate models are based on the Navier–Stokes equations for fluid flow, which are solved numerically on a grid covering the globe

- These models have been very successful in simulating the past climate, giving researchers confidence in their predictions

- The most likely value for the global temperature increase by 2100 is in the range 1.4–5.8 °C, which could have catastrophic consequences

More about: Climate modelling

www.metoffice.gov.uk/research/hadleycentre

www.ipcc.ch

www.climateprediction.net

J T Houghton 2005 Climate Change: The Complete Briefing (Cambridge University Press)

K McGuffie and A Henderson-Sellers 2004 A Climate Modelling Primer (Wiley, New York)