Part of our International Year of Quantum Science and Technology coverage

Isabelle Dumé reports from the Q2B quantum conference in Paris, France

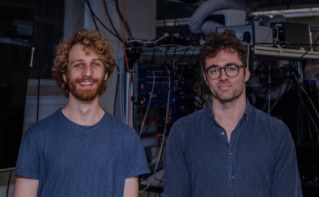

This year’s Q2B meeting took place at the end of last month in Paris at the Cité des Sciences et de l’Industrie, a science museum in the north-east of the city. The event brought together more than 500 attendees and 70 speakers – world-leading experts from industry, government institutions and academia. All major quantum technologies were highlighted: computing, AI, sensing, communications and security.

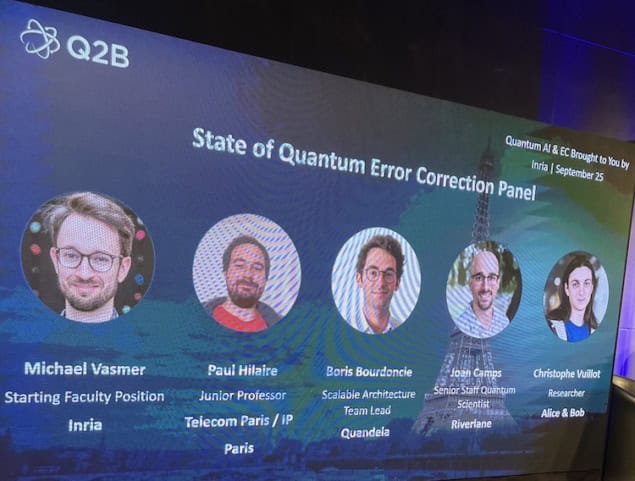

Among the quantum computing topics was quantum error correction (QEC) – something that will be essential for building tomorrow’s fault-tolerant machines. Indeed, it could even be the technology’s most important and immediate challenge, according to the speakers on the State of Quantum Error Correction Panel: Paul Hilaire of Telecom Paris/IP Paris, Michael Vasmer of Inria, Quandela’s Boris Bourdoncle, Riverlane’s Joan Camps and Christophe Vuillot from Alice & Bob.

As was clear from the conference talks, quantum computers are undoubtedly advancing in leaps and bounds. One of their most important weak points, however, is that their fundamental building blocks (quantum bits, or qubits) are highly prone to errors. These errors are caused by interactions with the environment – also known as noise – and correcting them will require innovative software and hardware. Today’s machines are only capable of running on average a few hundred operations before an error occurs; but in the future, we will have to develop quantum computers capable of processing a million error-free quantum operations (known as a MegaQuOp) or even a trillion error-free operations (TeraQuOps).

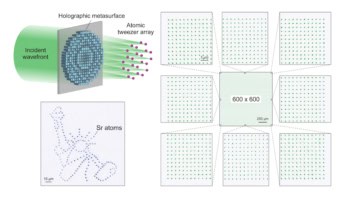

QEC works by distributing one quantum bit of information – called a logical qubit – across several different physical qubits, such as superconducting circuits or trapped atoms. Each physical qubit is noisy, but they work together to preserve the quantum state of the logical qubit – at least for long enough to perform a calculation. It was Peter Shor who first discovered this method of formulating a quantum error correcting code by storing the information of one qubit onto a highly entangled state of nine qubits. A technique known as syndrome decoding is then used to diagnose which error was the likely source of corruption on an encoded state. The error can then be reversed by applying a corrective operation depending on the syndrome.

While error correction should become more effective as the number of physical qubits in a logical qubit increases, adding more physical qubits to a logical qubit also adds more noise. Much progress has been made in addressing this and other noise issues in recent years, however.

“We can say there’s a ‘fight’ when increasing the length of a code,” explains Hilaire. “Doing so allows us to correct more errors, but we also introduce more sources of errors. The goal is thus being able to correct more errors than we introduce. What I like with this picture is the clear idea of the concept of a fault-tolerant threshold below which fault-tolerant quantum computing becomes feasible.”

Developments in QEC theory

Speakers at the Q2B25 meeting shared a comprehensive overview of the most recent advancements in the field – and they are varied. First up, concatenated error correction codes. Prevalent in the early days of QEC, these fell by the wayside in favour of codes like surface code, but are making a return as recent work has shown. Concatenated codes can achieve constant encoding rates and a quantum computer operating on a linear, nearest-neighbour connectivity was recently put forward. Directional codes, the likes of which are being developed by Riverlane, are also being studied. These leverage native transmon qubit logic gates – for example, iSWAP gates – and could potentially outperform surface codes in some aspects.

The panellists then described bivariate bicycle codes, being developed by IBM, which offer better encoding rates than surface codes. While their decoding can be challenging for real-time applications, IBM’s “relay belief propagation” (relay BP) has made progress here by simplifying decoding strategies that previously involved combining BP with post-processing. The good thing is that this decoder is actually very general and works for all the “low-density parity check codes” — one of the most studied class of high performance QEC codes (these also include, for example, surface codes and directional codes).

There is also renewed interest in decoders that can be parallelized and operate locally within a system, they said. These have shown promise for codes like the 1D repetition code, which could revive the concept of self-correcting or autonomous quantum memory. Another possibility is the increased use of the graphical language ZX calculus as a tool for optimizing QEC circuits and understanding spacetime error structures.

Hardware-specific challenges

The panel stressed that to achieve robust and reliable quantum systems, we will need to move beyond so-called hero experiments. For example, the demand for real-time decoding at megahertz frequencies with microsecond latencies is an important and unprecedented challenge. Indeed, breaking down the decoding problem into smaller, manageable bits has proven difficult so far.

There are also issues with qubit platforms themselves that need to be addressed: trapped ions and neutral atoms allow for high fidelities and long coherence times, but they are roughly 1000 times slower than superconducting and photonic qubits and therefore require algorithmic or hardware speed-ups. And that is not all: solid-state qubits (such as superconducting and spin qubits) suffer from a “yield problem”, with dead qubits on manufactured chips. Improved fabrication methods will thus be crucial, said the panellists.

Collaboration between academia and industry

The discussions then moved towards the subject of collaboration between academia and industry. In the field of QEC, such collaboration is highly productive today, with joint PhD programmes and shared conferences like Q2B, for example. Large companies also now boast substantial R&D departments capable of funding high-risk, high-reward research, blurring the lines between fundamental and application-oriented research. Both sectors also use similar foundational mathematics and physics tools.

At the moment there’s an unprecedented degree of openness and cooperation in the field. This situation might change, however, as commercial competition heats up, noted the panellists. In the future, for example, researchers from both sectors might be less inclined to share experimental chip details.

Last, but certainly not least, the panellists stressed the urgent need for more PhDs trained in quantum mechanics to address the talent deficit in both academia and industry. So, if you were thinking of switching to another field, perhaps now could be the time to jump.