Artificial intelligence (AI) has the potential to generate a sea change in the practice of radiology, much like the introduction of radiology information system (RIS) and picture archiving and communication system (PACS) technology did in the late 1990s and 2000s. However, AI-driven software must be accurate, safe and trustworthy, factors that may not be easy to assess.

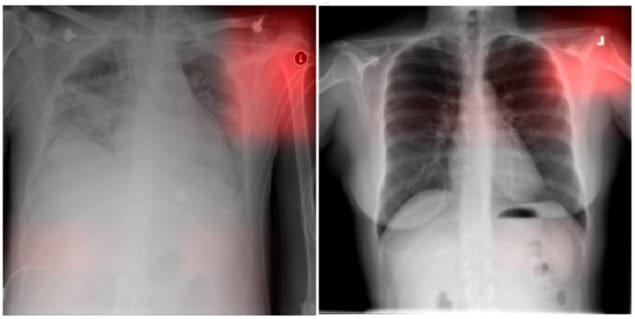

Machine learning software is trained on databases of radiology images. But these images might lack the data or procedures needed to prevent algorithmic bias. Such algorithmic bias can cause clinical errors and performance disparities that affect a subset of the analyses that the AI performs, unintentionally disadvantaging certain groups of patients.

A multinational team of radiology informaticists, biomedical engineers and computer scientists has identified potential pitfalls in the evaluation and measurement of algorithmic bias in AI radiology models. Describing their findings in Radiology, the researchers also suggest best practices and future directions to mitigate bias in three key areas: medical image datasets; demographic definitions; and statistical evaluations of bias.

Medical imaging datasets

The medical image datasets used for training and evaluation of AI algorithms are reflective of the population from which they are acquired. It is natural that a dataset acquired in a country in Asia will not be representative of the population in a Nordic country, for example. But if there’s no information available about the image acquisition location, how might this potential source of bias be determined?

Lead author Paul Yi, of St. Jude Children’s Research Hospital in Memphis, TN, and coauthors advise that many existing medical imaging databases lack a comprehensive set of demographic characteristics, such as age, sex, gender, race and ethnicity. Additional potential confounding factors include the scanner brand and model, the radiology protocols used for image acquisition, radiographic views acquired, the hospital location and disease prevalence. In addition to incorporating these data, the authors recommend that raw image data are collected and shared without institution-specific post-processing.

The team advise that generative AI, a set of machine learning techniques that generate new data, provides the potential to create synthetic imaging datasets with more balanced representation of both demographic and confounding variables. This technology is still in development, but might provide a solution to overcome pitfalls related to measurement of AI biases in imperfect datasets.

Defining demographics

Radiology researchers lack consensus with respect to how demographic variables should be defined. Observing that demographic categories such as gender and race are self-identified characteristics informed by many factors, including society and lived experiences, the authors advise that concepts of race and ethnicity do not necessarily translate outside of a specific society and that biracial individuals reflect additional complexity and ambiguity.

They emphasize that ensuring accurate measurements of race- and/or ethnicity-based biases in AI models is important to enable accurate comparison of bias evaluations. This not only has clinical implications, but is also essential to prevent health policies being established in error from erroneous AI-derived findings, which could potentially perpetuate pre-existing inequities.

Statistical evaluations of bias

The researchers define bias in the context of demographic fairness and how it reflects differences in metrics between demographic groups. However, establishing consensus on the definition of bias is complex, because bias can have different clinical and technical meanings. They point out that in statistics, bias refers to a discrepancy between the expected value of an estimated parameter and its true value.

As such, the radiology speciality needs to establish a standard notion of bias, as well as tackle the incompatibility of fairness metrics, the tools that measure whether a machine learning model treats certain demographic groups differently. Currently there is no universal fairness metric that can be applied to all cases and problems, and the authors do not think there ever will be one.

The different operating points of predictive AI models may result in different performance that could lead to potentially different demographic biases. These need to be documented, and thresholds should be included in research and by commercial AI software vendors.

Key recommendations

The authors suggest some key courses of action to mitigate demographic biases in AI in radiology:

- Improve reporting of demographics by establishing a consensus panel to define and update reporting standards.

- Improve dataset reporting of non-demographic factors, such as imaging scanner vendor and model.

- Develop a standard lexicon of terminology for concepts of fairness and AI bias concepts in radiology.

- Develop standardized statistical analysis frameworks for evaluating demographic bias of AI algorithms based on clinical contexts

- Require greater demographic detail to evaluate algorithmic fairness in scientific manuscripts relating to AI models.

Yi and co-lead collaborator Jeremias Sulam, of Hopkins BME, Whiting School of Engineering, tell Physics World that their assessment of pitfalls and recommendations to mitigate demographic biases reflect years of multidisciplinary discussion. “While both the clinical and computer science literature had been discussing algorithmic bias with great enthusiasm, we learned quickly that the statistical notions of algorithmic bias and fairness were often quite different between the two fields,” says Yi.

Generative AI speeds medical image analysis without impacting accuracy

“We noticed that progress to minimize demographic biases in AI models is often hindered by a lack of effective communication between the computer science and statistics communities and the clinical world, radiology in particular,” adds Sulam.

A collective effort to address the challenges posed by bias and fairness is important, notes Melissa Davis of Yale School of Medicine, in an accompanying editorial in Radiology. “By fostering collaboration between clinicians, researchers, regulators and industry stakeholders, the healthcare community can develop robust frameworks that prioritize patient safety and equitable outcomes,” she writes.