Sending an email, typing a text message, streaming a movie. Many of us do these activities every day. But what if you couldn’t move your muscles and navigate the digital world? This is where brain–computer interfaces (BCIs) come in.

BCIs that are implanted in the brain can bypass pathways damaged by illness and injury. They analyse neural signals and produce an output for the user, such as interacting with a computer.

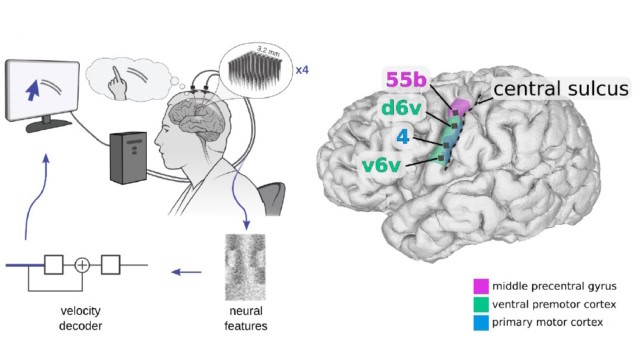

A major focus for scientists developing BCIs has been to interpret brain activity associated with movements to control a computer cursor. The user drives the BCI by imagining arm and hand movements, which often originate in the dorsal motor cortex. Speech BCIs, which restore communication by decoding attempted speech from neural activity in sensorimotor cortical areas such as the ventral precentral gyrus, have also been developed.

Researchers at the University of California, Davis recently found that the same part of the brain that supported a speech BCI could also support computer cursor control for an individual with amyotrophic lateral sclerosis (ALS). ALS is progressive neurodegenerative disease affecting the motor neurons in the brain and spinal cord.

“Once that capability [to control a computer mouse] became reliably achievable roughly a decade ago, it stood to reason that we should go after another big challenge, restoring speech, that would help people unable to speak. And from there – and this is where this new paper comes in – we recognized that patients would benefit from both of these capabilities [speech and computer cursor control],” says Sergey Stavisky, who co-directs the UC Davis Neuroprosthetics Lab with David Brandman.

Their clinical case study suggests that computer cursor control may not be as body-part-specific as scientists previously believed. If results are replicable, this could enable the creation of multi-modal BCIs that restore communication and movement to people with paralysis. The researchers share information about their cursor BCI and the case study in the Journal of Neural Engineering.

The study participant, a 45-year-old man with ALS, had previous success working with a speech BCI. The researchers recorded neural activity from the participant’s ventral precentral gyrus while he imagined controlling a computer cursor, and built a BCI to interpret that neural activity and predict where and when he wanted to move and click the cursor. The participant then used the new cursor BCI to send texts and emails, watch Netflix, and play The New York Times Spelling Bee game on his personal computer.

“This finding, that the tiny region of the brain we record from has a lot more than just speech information, has led to the participant also being able to control his own computer on a daily basis, and get back some independence for him and his family,” says first author Tyler Singer-Clark, a graduate student in biomedical engineering at UC Davis.

The researchers found that most of the information driving cursor control came from one of the participant’s four implanted microelectrode arrays, while click information was available on all four of the BCI arrays.

Plug me in: the physics of brain–computer interfaces

“The neural recording arrays are the same ones used in many prior studies,” explains Singer-Clark. “The result that our cursor BCI worked well given this choice makes it all the more convincing that this brain area (speech motor cortex) has untapped potential for controlling BCIs in multiple useful ways.”

The researchers are working to incorporate more computer actions into their cursor BCI, to make the control faster and more accurate, and to reduce calibration time. They also note that it’s important to replicate these results in more people to understand how generalizable the results of their case study may be.

The research was conducted as part of the BrainGate2 clinical trial.