A new computer algorithm, to correct optical aberrations that appear while imaging the back of the eye, has been demonstrated by researchers in the US. The team’s method should allow the benefits of adaptive optics, more commonly used in astronomy, to be brought more readily into clinics. It does not need expensive optical hardware and, according to the researchers, could help diagnose degenerative eye and neurological diseases earlier, making their treatment more successful.

Optical coherence tomography is an interferometry-based medical imaging technique analogous to ultrasound imaging, but using light instead of sound. It is the standard of care for diagnosing and monitoring a number of medical conditions such as age-related macular degeneration, in which the tissue underneath the retina begins to thicken, leading to nutrient starvation and eventual death of photoreceptors.

Reduced resolution

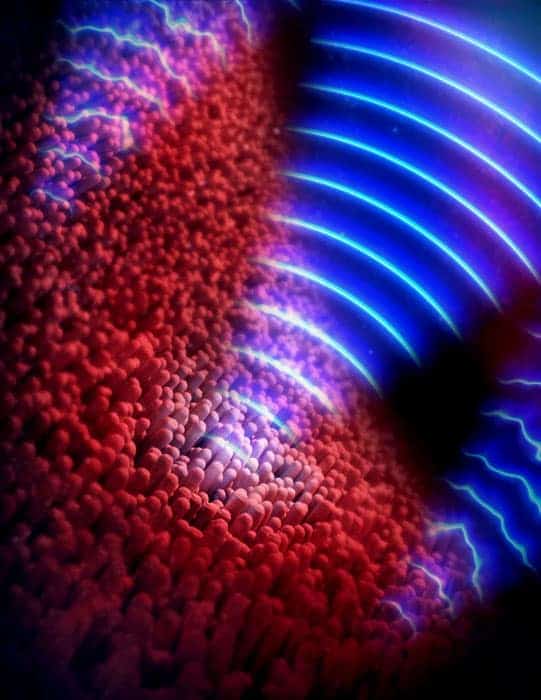

However, light used to image the retina has to pass through a patient’s eye, and imperfections specific to each patient create aberrations in the image, thereby reducing the resolution until it is impossible to image individual receptors and researchers have to infer the microscopic progress of a disease from macroscopic details. In adaptive optics – first developed for removing the distortions introduced into astronomical images by the atmosphere – the distortion of the reflected wavefronts is measured by a wavefront sensor, and a mirror is constantly deformed to correct for these.

These corrections can improve the image resolution dramatically, but the sophisticated optical hardware required slows down the imaging speed and adds considerably to the cost, nearly doubling it. Also, tiny involuntary eye movements can alter the phase of the incoming waves and blur interferometric images, making it difficult to identify optical aberrations for correction.

Stephen Boppart and colleagues at the University of Illinois at Urbana-Champaign, as well as competing researchers, have in recent years produced several papers on so-called “computational adaptive optics”, in which these corrections are applied by image-processing software rather than optical hardware. In their new research, they unveil a multi-stage algorithm for enhancing retinal images that can be run on the graphics card of a high-end desktop computer, and use it to probe the retina with unprecedented detail for images made without adaptive optics.

Fixing imperfections

First, they correct the phase, allowing them to clearly identify the optical aberrations. Second, an electronic technique to identify and correct large bulk aberrations reveals a few obvious photoreceptors, which appear blurred. Finally, the algorithm calculates the detailed corrections needed to display these correctly and applies these to the image. In astronomical adaptive optics, stars are sometimes used to guide these corrections.

The researchers imaged the eye of a volunteer, looking at an area near the centre of the retina called the fovea. They produced extremely detailed images showing, for example, the decreasing density of photoreceptors as the distance from the fovea becomes greater. “We agree that, given the images we’ve seen from the hardware [adaptive optics] systems, our computational approaches are equivalent to those,” says Boppart, “In addition, we think we could do better by correcting the finer aberrations and by being able to manipulate the data post-acquisition, which gives us a lot more flexibility.”

Pablo Artal of the University of Madrid describes the research as “impressive” and the images obtained as “beautiful”. He remains sceptical, however, both about the researchers’ estimates of the cost of integrating adaptive optics into a properly developed commercial system and about the effectiveness of software to substitute them, especially in more complex cases in which noise presents more problems, although he agrees this warrants further study. In any case, he says, there may be many cases in which image processing is “good enough, and in that case this can have a lot of value”. A more interesting application, he says, may lie in combining the two methods to obtain even better image quality than either would achieve alone.

Boppart’s research is now focused in just this direction. “We’re going to integrate hardware adaptive optics with our computational system,” he says, “and really do that direct comparison to see if computation can completely replace hardware or if there’s some synergy in having both present.” The team also wants to use the system to obtain images of nerve fibres in the eye, as the collapse of the myelin sheath can be a key indicator of multiple sclerosis.

The research is published in Nature Photonics.