Similar to auditory perception when listening to music, imagining a song in your head also produces neuronal signals in specific regions of the brain. Understanding how the brain encodes such “auditory imagery” could impact the development of brain-machine interfaces for patients who have lost the ability to speak.

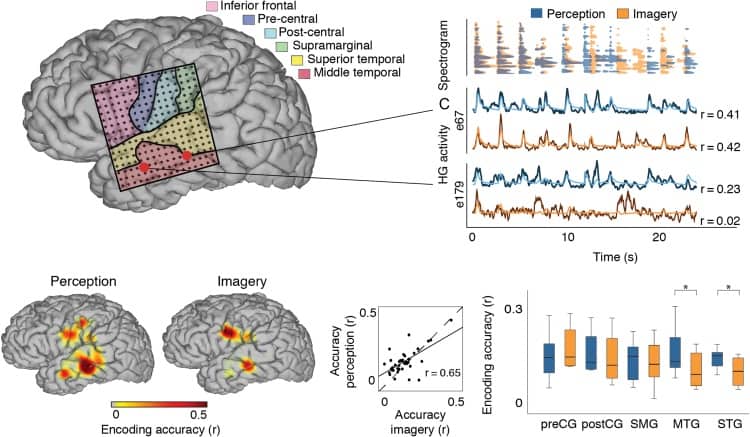

Researchers from EPFL and UC Berkeley, in cooperation with other institutions in Switzerland, Germany and the USA, have designed an experiment that, for the first time, quantified and compared neural activity during auditory perception (listening) and auditory imagery (imagining) of a song. The authors found that the neural activity under these two conditions substantially overlapped in frequency and cortical location. Using models that decode the neural activity during auditory imagery, they then reconstructed the spectrogram (a plot of time versus frequency) of the imagined song (Cerebral Cortex 10.1093/cercor/bhx277).

Novel experimental approach

Studying auditory imagery is challenging because it is difficult to align the measured neural activity with the auditory imagery stimuli due to the subjective nature of mental representations. In this study, the authors implemented a novel approach that allowed them to measure neural activity with high spatial and temporal resolution and simultaneously synchronize it with either auditory perception or imagery.

The participant was an epileptic patient who had undergone electrocorticography – an invasive procedure used to treat epilepsy – which involved implantation of electrode arrays in the left hemisphere of the brain covering regions of the auditory cortex, temporal lobe and sensorimotor cortex. During the experiment, the participant, who was also a professional piano player, was asked to play a song under two conditions.

In the first condition, the participant played a song with the sound of the piano turned on, whereas in the second, he played the same song with the piano sound turned off. In both cases, the researchers recorded the piano spectrogram and the neural activity of the participant. The neural activity recorded in the first condition corresponded to auditory perception, whereas in the second condition it corresponded to auditory imagery.

Shared neural representations

The authors compared the neural activities measured during perception and imagery conditions to that predicted from neural encoding models based on the spectrogram of the recorded song. They found that the prediction accuracies of neural activity during auditory perception and imagery were correlated.

They also observed that the two neural activities shared cortical locations and displayed similarities in temporal and frequency tuning. However, the overlap was not complete: some cortical regions (such as the superior temporal and middle temporal gyrus) showed lower prediction accuracy for the imagery condition, as well as differences in temporal and frequency tuning.

Decoding neural activity

In this study, the authors developed decoding models and reconstructed an auditory spectrogram of the played song from the neural activity measured for each condition. Their results showed the potential use of auditory imagery for decoding specific acoustic features in a song.

Future research based on these findings, along with the development of less invasive technology for measuring neural activity with high spatial and temporal resolution, will have implications for the development of brain-machine interfaces. For instance, these interfaces could be used to decode speech directly from the mental representations of patients with neurological impairments that affect their ability to speak.