The use of artificial intelligence is already becoming commonplace in physics, but could physics also help AI? Tara Shears examines the relationship between the two fields following a survey and report by the Institute of Physics

To paraphrase Jane Austen, it is a truth universally acknowledged that a research project in possession of large datasets must be in want of artificial intelligence (AI).

The first time I really became aware of AI’s potential was in the early 2000s. I was one of many particle physicists working at the Collider Detector at Fermilab (CDF) – one of two experiments at the Tevatron, which was the world’s largest and highest energy particle collider at the time. I spent my days laboriously sifting through data looking for signs of new particles and gossiping about all things particle physics.

CDF was a large international collaboration, involving around 60 institutions from 15 countries. One of the groups involved was at the University of Karlsruhe (now the Karlsruhe Institute of Technology) in Germany, and they were trying to identify the matter and antimatter versions of a beauty quark from the collider’s data. This was notoriously difficult – backgrounds were high, signals were small, and data volumes were massive. It was also the sort of dataset where for many variables, there was only a small difference between signal and background.

In the face of such data, Michael Feindt, a professor in the group, developed a neural-network algorithm to tackle the problem. This type of algorithm is modelled on the way the brain learns by combining information from many neurons, and it can be trained to recognize patterns in data. Feindt’s neural network, trained on suitable samples of signal and background, was able to more easily distinguish between the two for the data’s variables, and combine them in the most effective way to identify matter and antimatter beauty quarks.

At the time, this work was interesting simply because it was a new way of trying to extract a small signal from a very large background. But the neural network turned out to be a key development that underpinned many of CDF’s physics results, including the landmark observation of a Bs meson (a particle formed of an antimatter beauty quark and a strange quark) oscillating between its matter and antimatter forms.

Versions of the algorithm have since been used elsewhere, including by physicists on three of the four main experiments at CERN’s Large Hadron Collider (LHC). In every case, the approach allowed researchers to extract more information from less data, and in doing so, accelerated the pace of scientific advancement.

What was even more interesting is that the neural-network approach didn’t just benefit particle physics. There was a brief foray applying the network to hedge fund management and predicting car insurance rates. A company Phi-T (later renamed Blue Yonder) was spun out from the University of Karlsruhe and applied the algorithm to optimizing supply-chain logistics. After a few acquisitions, the company is now award-winning and global. The neural network, however, remained free for particle physicists to use.

From lab to living room

Many types of neural networks and other AI approaches are now routinely used to acquire and analyse particle physics data. In fact, our datasets are so large that we absolutely need their computational help, and their deployment has moved from novelty to necessity.

To give you a sense of just how much information we are talking about, during the next run period of the LHC, its experiments are expected to produce about 2000 petabytes (2 × 1018 bytes) of real and simulated data per year that researchers will need to analyse. This dataset is almost 10 times larger than a year’s worth of videos uploaded to YouTube, 30 times larger than Google’s annual webpage datasets, and over a third as big as a year’s worth of Outlook e-mail traffic. These are dataset sizes very much in want of AI to analyse.

Particle physics may have been an early adopter, but AI has now spread throughout physics. This shouldn’t be too surprising. Physics is data-heavy and computationally intensive, so it benefits from the step up in speed and computational complexity to analyse datasets, simulate physical systems, and automate the control of complicated experiments.

For example, AI has been used to classify gravitational-lensing images in astronomical surveys. It has helped researchers interpret the resulting distributions of matter they infer to be there in terms of different models of dark energy. Indeed, in 2024 it improved Dark Energy Survey results equivalent to quadrupling their data sample (see box “An AI universe”).

AI has even helped design new materials. In 2023 Google DeepMind discovered millions of new crystals that could power future technologies, a feat estimated to be equivalent to 800 years of research. And there are many other advances – AI is a formidable tool for accelerating scientific progress.

But AI is not limited to complex experiments. In fact, we all use it every day. AI powers our Internet searches, helps us understand concepts, and even leads us to misunderstand things by feeding us false facts. Nowadays, AI pervades every aspect of our lives and presents us with challenges and opportunities whenever it appears.

An AI universe

AI approaches have been used by the Dark Energy Survey (DES) collaboration to investigate dark energy, the mysterious phenomenon thought to drive the expansion of the universe.

DES researchers had previously mapped the distribution of matter in the universe by relating distortions in light from galaxies to the gravitational attraction of matter the light passes through before being measured. The distribution depends on visible and dark matter (which draws galaxies closer), and dark energy (which drives galaxies apart).

In a 2024 study researchers used AI techniques to simulate a series of matter distributions – each based on a different value for variables describing dark matter, dark energy and other cosmological parameters that describe the universe. They then compared these simulated findings with the real matter distribution. By determining which simulated distributions were consistent with the data, values for the corresponding dark energy parameters could be extracted. Because the AI techniques allowed more information to be used to make the comparison than would otherwise be possible, the results are more precise. Researchers were able to improve the precision by a factor of two, a feat equivalent to using four times as much data with previous methods.

Physicists have their say

It’s this mix of challenge and opportunity that makes now the right time to examine the relationship between physics and AI, and what each can do for the other. In fact, the Institute of Physics (IOP) has recently published a “pathfinder” study on this very subject, on which I acted as an adviser. Pathfinder studies explore the landscape of a topic, identifying the directions that a subsequent, deeper and more detailed “impact” study should explore.

This current pathfinder study – Physics and AI: a Physics Community Perspective – is based on an IOP member survey that examined attitudes towards AI and its uses, and an expert workshop that discussed future potential for innovation. The resulting report, which came out in April 2025, revealed just how widespread the use of AI is in physics.

About two thirds of the 700 people who replied to the survey said they had used AI to some degree, and every physics area contained a good fraction of respondents who had at least some level of familiarity with it. Most often this experience involved different machine-learning approaches or generative AI, but respondents had also worked with AI ethics and policy, computer vision and natural language processing. This is a testament to the many uses we can find for AI, from very specific pattern recognition and image classification tasks, to understanding its wider implications and regulatory needs.

Proceed with caution

Although it is clear that AI can really accelerate our research, we have to be careful. As many respondents to the survey pointed out, AI is a powerful aid, but simply using it as a black box and imagining it does the right thing is dangerous. AI tools and the challenges we put them to are complex – we need to ensure we understand what they are doing and how well they are doing it to have confidence in their answers.

There are any number of cautionary tales about the consequences of using AI badly and obtaining a distorted outcome. A 2017 master’s thesis by Joy Adowaa Buolamwini from Massachusetts Institute of Technology (MIT) famously analysed three commercially available facial-recognition technologies, and uncovered gender and racial bias by the algorithms due to incomplete training sets. The programmes had been trained on images predominantly consisting of white men, which led to women of colour being misidentified nearly 35% of the time, while white men were correctly classified 99% of the time. Buolamwini’s findings prompted IBM and Microsoft to revise and correct their algorithms.

Even estimating the uncertainty associated with the use of machine learning is fraught with complication. Training data are never perfect. For instance, simulated data may not perfectly describe equipment response in an experiment, or – as with the example above – crucial processes occurring in real data may be missed if the training dataset is incomplete. And the performance of an algorithm is never perfect; there may be uncertainties associated with the way the algorithm was trained and its parameters chosen.

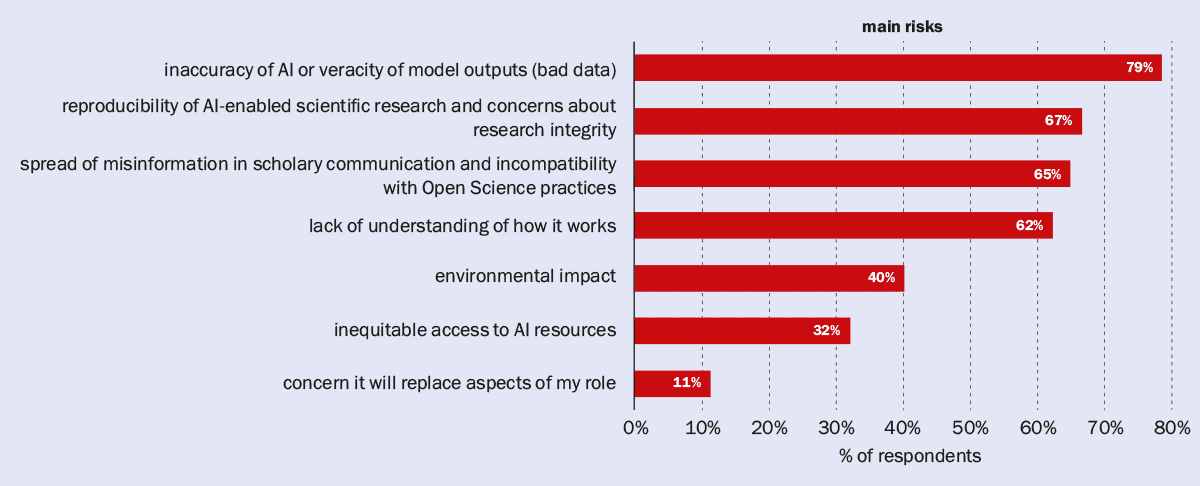

Indeed, 69% of respondents to the pathfinder survey felt that AI poses multiple risks to physics, and one of the main concerns was inaccuracy due to poor or bad training data (figure 1). It’s bad enough getting a physics result wrong and discovering a particle that isn’t really there, or missing a new particle that is. Imagine the risks if poorly understood AI approaches are applied to healthcare decisions when interpreting medical images, or in finance where investments are made on the back of AI-driven model suggestions. Yet despite the potential consequences, the AI approaches in these real-world cases are not always well calibrated and can have ill-defined uncertainties.

1 Uncertain about uncertainties

The Institute of Physics pathfinder survey asked its members, “Which are your potential greatest concerns regarding AI in physics research and innovation?” Respondents were allowed to select multiple answers, and the prevailing worry was about the inaccuracy of AI.

New approaches are being considered in physics that try to separate out the uncertainties associated with simulated training data from those related to the performance of the algorithm. However, even this is not straightforward. A 2022 paper by Aishik Ghosh and Benjamin Nachman from Lawrence Berkeley National Laboratory in the US (Eur. Phys. J. C 82 46) notes that devising a procedure to be insensitive to the uncertainties you think are present in training data is not the same as having a procedure that is insensitive to the actual uncertainties that are really there. If that’s true, not only is measurement uncertainty underestimated but, depending on the differences between training data and reality, false results can be obtained.

The moral is that AI can and does advance physics, but we need to invest the time to use it well so that our results are robust. And if we do that, others can benefit from our work too.

How physics can help AI

Physics is a field where accuracy is crucial, and we are as rigorous as we can be about understanding bias and uncertainty in our results. In fact, the pathfinder report highlights that our methodologies to quantify uncertainty can be used to advance and strengthen AI methods too. This is critical for future innovation and to improve trust in AI use.

Advances are already under way. One development, first introduced in 2017, is physics-informed neural networks. These impose consistency with physical laws in addition to using training data relevant to their particular applications. Imposing physical laws can help compensate for limited training data and prevents unphysical solutions, which in turn improves accuracy. Although relatively new, it’s a rapidly developing field, finding applications in sectors as diverse as computational fluid dynamics, heat transfer, structural mechanics, option pricing and blood pressure estimation.

AI and the future of physics

Another development is in the use of Bayesian neural networks, which incorporate uncertainty estimates into their predictions to make results more robust and meaningful. The approach is being trialled in decision-critical fields such as medical diagnosis and stock market prediction.

But this is not new to physics. The neural network developed at CDF in the 2000s was an early Bayesian neural network, developed to be robust against outliers in data, avoid issues in training caused by statistical fluctuations, and to have a sound probabilistic basis to interpret results. All the features, in fact, that make the approach invaluable for analysing many other systems outside physics.

So physics benefits from AI and can drive advances in it too. This is a unique relationship that needs wider recognition, and this is a good moment to bring it to the fore. The UK government has said it sees AI as “the defining opportunity of our generation”, driving growth and innovation, and that it wants the UK to become a global AI superpower. Action plans and strategies are already being implemented. Physics has a unique perspective to offer help and make this happen. It’s time for us to include it in the conversation.

In the words of the pathfinder report, we need to articulate and showcase what AI can do for physics and what physics can do for AI. Let’s make this the start of putting physics on the AI map for everyone.

AI terms and conditions

Artificial intelligence (AI)

Intelligent behaviour exhibited by machines. But the definition of intelligence is controversial so a more general description of AI that would satisfy most is: the behaviour of a system that adapts its actions in response to its environment and prior experience.

Machine learning

As a group of approaches to endow a machine with artificial intelligence, machine learning is itself a broad category. In essence, it is the process by which a system learns from a training set so that it can deliver autonomously an appropriate response to new data.

Artificial neural networks

A subset of machine learning in which the learning mechanism is modelled on the behaviour of a biological brain. Input signals are modified as they pass through networked layers of neurons before emerging as an output. Experience is encoded by varying the strength of interactions between neurons in the network.

Training data

A set of real or simulated data used to train a machine-learning algorithm to recognize patterns in data indicative of signal or background.

Generative AI

A type of machine-learning algorithm that creates new content, such as images or text, based on the data the algorithm was trained on.

Computer vision

A branch of AI that analyses, interprets and extracts meaningful data from images to identify and classify objects and patterns.

Natural language processing

A branch of AI that analyses, interprets and generates human language.