Cars that drive themselves may one day improve road safety by reducing human error – and hopefully deaths by accidents too. However, the hardware and software behind the technology opens up a range of opportunities to hackers, as Stephen Ornes finds out

One morning in March 2019, a brand new, cherry-red Tesla Model 3 sat in front of a Sheraton hotel in Vancouver, Canada. Once they were inside the car, Amat Cama and Richard Zhu, both tall and lean twentysomethings, needed only a few minutes. They exploited a weakness in the browser of the “infotainment” system to get inside one of the car’s computers. Then they used the system to run a few lines of their own code, and soon their commands were appearing on the screen.

They’d hacked the Tesla.

Cama and Zhu got the car, but they weren’t thieves. They’re a pair of legendary “white hats” – good-guy hackers who find, exploit and reveal vulnerabilities in devices that connect to the Internet, or other devices. A car packed with self-driving features could be the ultimate prize for hackers who can’t resist a challenge.

Hacking the Model 3 was the final test in the 2019 round of the prestigious Pwn2Own annual hacking event. The competition is lucrative: during the 2019 Pwn2Own, Cama and Zhu, who work together under the team name Fluoroacetate, won most of the hacking challenges they entered and left Vancouver with $375,000. And, of course, the car.

As per the tradition at Pwn2Own, the security flaw was reported to Tesla, and the car company soon issued a patch so that other hackers couldn’t replicate Fluoroacetate’s method. But that’s not to say that the car is invincible, or ever will be. Rigging a car to drive by itself – or use related technologies like self-parking or lane-change monitoring – needs an array of diverse hardware and software to be installed and co-ordinated. It also requires that a car can get online to access traffic data, connect with other cars to make strategic decisions, and download necessary safety patches.

Left unsecured, these “attack surfaces”, as they’re called by researchers who work on autonomous vehicles, offer a veritable cyber-buffet of ways to break into a smart car. “There are so many different systems all communicating with each other in a car,” says computer scientist Simon Parkinson, who directs the Centre for Cyber Security at the University of Huddersfield, UK. “To really understand all the ways that somebody could try to abuse a system is very complex. There will always be a risk.”

Physicists, mathematicians and computer scientists have fortunately built models that simulate some of the possible vulnerabilities but they are by no means exhaustive. “No matter how hard we try and how complex we make the security solutions on vehicles, it is impossible to make something perfectly secure and unhackable,” noted mathematician and security researcher Charlie Miller, now at Cruise Automation in San Francisco, California, in a 2019 essay for IEEE Design&Test.

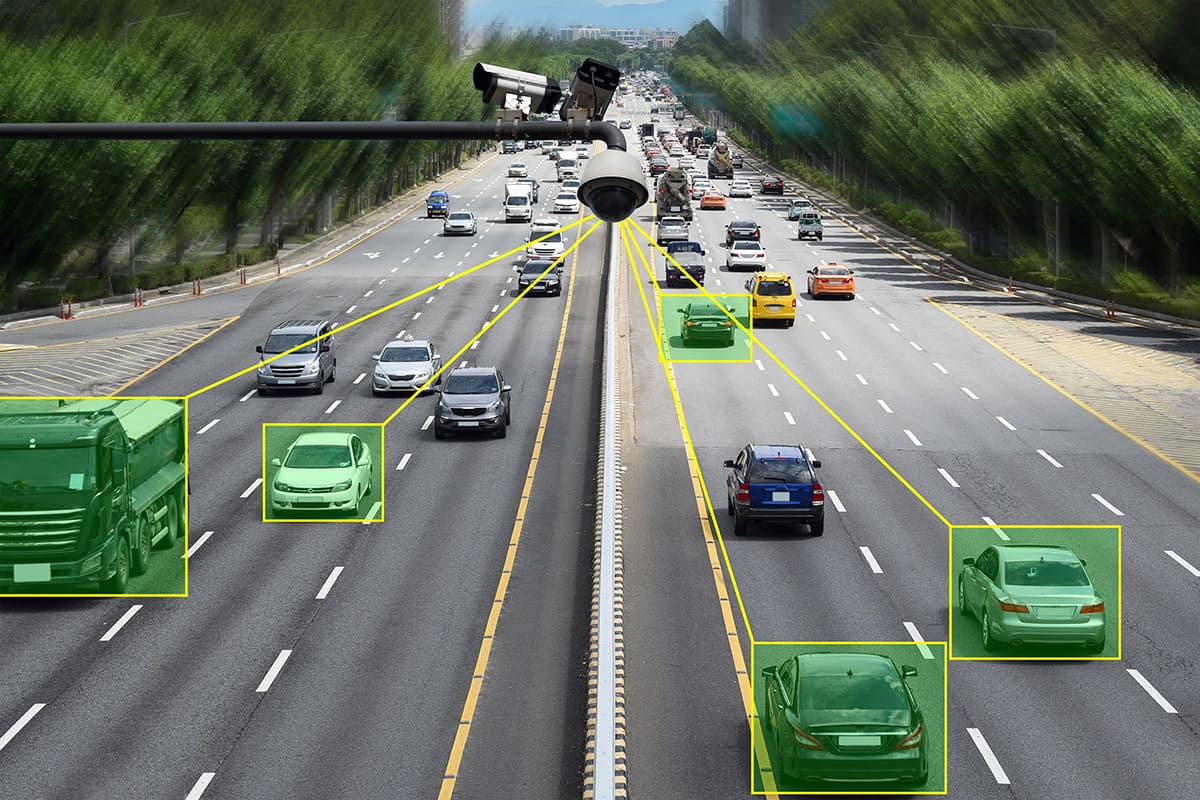

Self-driving car technologies, say the most vocal proponents, may improve safety and save lives. But the same technology exposes human passengers to new and untested varieties of cyber-threats and risks. Recent studies have shown, not surprisingly, that hacking increases the likelihood of collisions and life-threatening hazards. Skanda Vivek, a physicist from Georgia Gwinnett College in Atlanta, US, has even turned to statistical physics to predict possible outcomes from hacking scenarios, using his findings to develop and propose solutions too. He says any car built with devices that connect to the Internet is vulnerable to a hack, but the threat to autonomous cars is particularly high because computers control so many functions.

So far, car manufacturers have not produced fully autonomous vehicles that can legally and safely drive all the world’s highways. While that’s the dream, it is also the great unknown. The complexity of these cars means researchers don’t know all the possible risks or hacks, which makes it tricky to know how to optimize safety.

“Once you have access to a vehicle, you pretty much have access to any part,” says Vivek, who runs an autonomous vehicle consulting service called Chaos Control. “If you get to one control unit – like the entertainment system, for example – then with some reverse engineering you could control the steering wheel.”

Defining autonomous

The term “autonomous vehicle” is historically ill-defined, but in 2014 SAE International (formerly known as the Society of Automotive Engineers) introduced a classification system for technologies that enable a car to have some self-driving features.

- Level 0 systems have no control over the vehicle but may sound warnings or help in emergencies.

- Level 1 systems include adaptive cruise control and lane-change assistance. Under some circumstances, the car can control speed or the steering wheel, but not at the same time.

- Level 2 systems expand on level 1, allowing the car to take over steering and speed at the same time under some conditions. Self-parking technology falls in this category; so does Tesla’s Autopilot system.

- Level 3 systems can drive the vehicle, though a person is required to remain in the driver seat and take control when requested by the car. Audi had originally planned to debut the first level 3 system in 2020, but in April announced it was abandoning the effort.

- Level 4 systems are almost completely autonomous. They can drive on prescribed routes under certain conditions – not during inclement weather or at high speeds, for example. But a human driver needs to be available to take the wheel.

- A level 5 autonomous car can drive anywhere in the world without requiring human intervention.

The arrival of car computers

For most of their first century on the market, cars were mechanical, physical objects that relied on the most basic ideas of physics to turn fuel into motion. A combustion engine delivered power; the driver used a throttle to control speed; four wheels and a steering wheel turned; disc brakes, some controlled with levers, slowed down the wheels.

But that changed in the 1970s as computer code began to be introduced to car systems. Vehicles started to include electronic control units, or ECUs, to run increasingly complicated electronic systems. Since then, the number of ECUs has soared, with cars sold today including anywhere from 70 to 150 such devices. They monitor the crankshafts and camshafts; they deploy airbags; they receive and relay signals from flat tyres and emptying gas tanks. Importantly – especially to hackers – they talk to each other via the Controlled Area Network, or CAN bus, which essentially functions like a nervous system for the car.

These sophisticated computer systems have made it possible to advance on another unmet dream that’s been around almost as long as cars have been on the roads – the driverless car. In the 1920s engineers first navigated a remote-controlled car through New York City traffic. The Futurama exhibit – brainchild of the American industrial designer Norman Bel Geddes – at the 1939 World’s Fair suggested a future in which people travelled between cities by giant superhighways in autonomous vehicles that could navigate using electromagnetic fields embedded in roads. In the 1940 book Magic Motorways, Bel Geddes also wrote that the cars should drive themselves.

By the early 21st century, self-driving cars seemed less a far-fetched dream and more the inevitable evolution of the automobile. Some developers had begun to regard cars not only as modes of transportation, but as platforms for building new tech applications. And this shift in thinking led some researchers to explore what new vulnerabilities these changes brought with them.

Hacking hall of fame

In 2009 researchers from the University of California San Diego and the University of Washington in the US bought two new cars, laden with complex electronics, and took them to an abandoned airstrip to test the limits of manipulation. (They didn’t identify the make and models of the cars.) They attached a laptop to a port in the dashboard and, using specially developed software called CARSHARK, they began to send their own messages through the car’s CAN bus to see what they could change.

A lot, it turned out. Publishing their results in May 2010 in the IEEE Symposium on Security and Privacy, they had found a way to manipulate the display and volume of the radio. They falsified the fuel gauge, switched the windscreen wipers on and off, played an array of chimes and chirps through the speakers, locked and unlocked the doors, popped the boot open, honked the horn, sprayed windscreen fluid at random intervals, locked the brakes, and flicked the lights on and off. They also wrote a short computer program, using only 200 lines of code, that initiated a “self-destruct” sequence, which began with a displayed countdown from 60 to 0 and culminated with killing the engine and locking the doors.

In August 2011, after critics and carmakers downplayed the threat because the hack was executed from inside the car, the same team showed that they could similarly hijack the CAN bus remotely, through either the Bluetooth or cellular connection. Miller described that demonstration as ground-breaking: it showed for the first time that, in theory, any car could be hacked from anywhere.

But it still didn’t convince automakers to change anything, Vivek says. In 2012, using a grant from DARPA (the research and development arm of the US Department of Defense), Miller and his colleague Chris Valasek, also now at Cruise Automation, demonstrated how to hack a 2010 Ford Escape and a 2010 Toyota Prius. The hack, like the first one in 2010, required physical access to the car. Toyota responded with a press release noting that it was only concerned about wireless hacks.

“We believe our systems are robust and secure,” the Japanese car giant stated.

In 2014, again using a DARPA grant, Miller and Valasek upped the game. They analysed computer information on a range of cars, looking for one with ample attack surfaces and a fairly simple network structure to allow for widespread mayhem. They settled on a 2014 Jeep Cherokee.

In what’s become one of the most famous hacks of cars, in 2015 they showed how, from the comfort of home, they could take control of the car while it was driving on a highway. Then, to add terror to nightmare, they scanned other nearby cars and found that 2695 vehicles on the road, at the same time, had the same vulnerability. Hacking them all, simultaneously, would not have been difficult.

“In many ways, this was the worst scenario you could imagine,” Miller wrote in 2019. “From my living room, we could compromise one of any of 1.4 million vehicles located anywhere in the United States.”

Safety in the rearview mirror

In the aftermath of the 2015 hack, which was well-publicized, Jeep issued a software patch for its Cherokee. That same year, other groups of white-hat hackers found ways to take control of GM vehicles and disable the brakes of a Corvette. In March 2016 the Federal Bureau of Investigation issued a warning – the first – about the cybersecurity risk to cars.

Back at Huddersfield, Parkinson says he’s seen advocacy groups, government agencies and even some carmakers start to address hacking as a high-profile risk. But the supply chain for making a car is long and complicated, and manufacturers often enlist other companies to build technological features. Cars from Tesla, Audi, Hyundai, Mercedes and others rely on software developed by third parties, which may enlist dozens – if not hundreds – of coders to contribute to the final project. As with other connected devices, the rush to get a gadget to market can railroad efforts to make it safe.

“Functionality takes priority over security because that’s what sells,” says Parkinson. And even if carmakers do address problems as they arise, he says they’re doing so in a reactive manner rather than anticipating problems – and solving them – before the car hits the pavement.

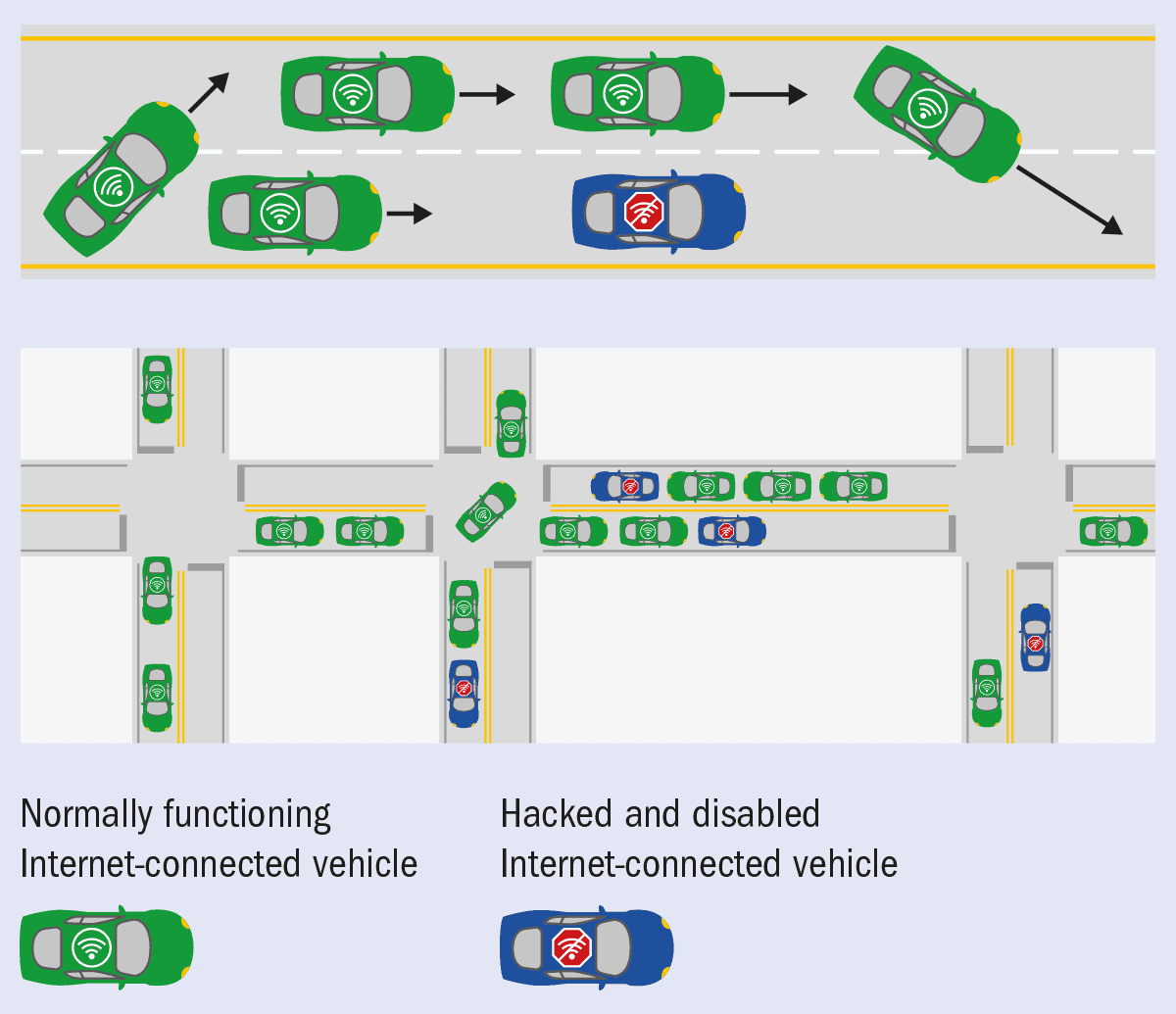

Vivek, in Georgia, says that notable hacks in the past have exposed risks to individual cars, but researchers are still trying to grasp how a multi-car hack might unfold in a real-world situation. He and colleagues from the Georgia Institute of Technology, where Vivek was a postdoc, set out to model a worst-case scenario in which hackers disabled many Internet-connected vehicles at once.

There’s a rich tradition of physicists taking a hard look at traffic. For at least two decades, researchers have been studying traffic flow as a many-body system in which the constituent particles interact strongly with each other. (This is easy to see during rush hour, as the slowing or accelerating behaviour of one car affects those behind it.) They’ve modelled the conditions under which traffic jams form, shown how jams are similar to shock waves described by nonlinear wave equations, and predicted interventions (like keeping ample space between cars) that could boost fuel efficiency. One traffic model, called the Intelligent Driver Model (IDM), simulates drivers who obey equations of motion.

In their work, Vivek and his colleagues used IDM and other models to simulate traffic as an active-matter system including two kinds of “particles” – some driven by humans, and some driven autonomously. They also gave the car-particles the ability to switch lanes, and the motion of individual cars was governed by equations known to accurately represent the real-world conditions of traffic. For lane changing, they integrated a framework that allowed a car to switch lanes if that resulted in the car getting closer to its programmed speed.

Before they introduced hacking into the experiment, they validated the set-up by running simulations on a three-lane road using varying speeds and densities of cars; finding that the results matched observed patterns that emerge on real-world roads. Then, they ran simulations to see what behaviours would emerge as different fractions of cars simply stopped moving as a result of a widespread hack, targeting the cars at random.

In their initial analysis, the researchers tried to determine what would happen if connected cars were all disabled at the same time. In classical models of flow, at least two phenomena lead to congestion. One is clogging, where a small number of particles might stop moving but interactions with other particles produce a gradual slow-down. (In traffic, this can be seen when a car breaks down near the side of the road.) It’s a phenomenon of movement. The other is percolation, a geometrical phenomenon in which some large block simply prohibits motion all at once.

Vivek and his colleagues found that if only 10–20% of cars stop moving during rush hour, half of Manhattan would come to a standstill. The disruption was more like percolation – sudden and geometric – than like clogging. Vivek says the sudden blockage would not only inconvenience drivers; emergency services like fire engines, ambulances and police cars wouldn’t get through either. Most of the scenarios he’s studied, in fact, ultimately lead to a situation where hacked cars stop moving and become major traffic obstacles (figure 1).

His team has continued to run more complicated simulations, like tracking how the effects – even of smaller numbers of hacked cars – change over time. “What we’re finding now is a little more complicated,” he says. “We already knew that just a few vehicles can cause a traffic jam. But now we see that a much lower percentage can cause a significant effect. Even with just 5% of cars hacked, a five-by-five grid could be gridlocked within 15 minutes.”

But he’s also expanded his work to find efficient interventions that could reduce the risk or quickly remedy a hacked-car scenario. For example, Vivek and his collaborators have found that if connected cars in an area don’t all connect to the same network, and instead connect to smaller, more localized networks, then the work of a would-be hacker would increase dramatically.

Spotting a hack

A hack can take many forms, and Vivek says experts likely haven’t found them all. A car with an infected computer might share malware or a virus with another connected car via Bluetooth, wireless or a cell phone connection. Or it might hack the manufacturer’s computer, since connected cars (self-driving or not) regularly download software updates and patches. A hacker might use a centrally located hotspot – which cars use to connect to the Internet – to breach many cars at once. And as with personal devices, malicious software can be unknowingly invited to a car by someone who downloads an unsecured app from the car’s browser.

Parkinson says there’s another risk just waiting to be exploited. For the most part, the people who buy and drive cars with self-driving technology simply don’t understand how they work, which means they won’t recognize the warning signs if something’s gone wrong. “We need to help users understand what normal behaviour looks like,” he says. Over the last few decades computer and mobile-phone users have learned to recognize situations when their devices or data may be compromised – the same thing needs to happen for drivers, Parkinson explains, because in the wrong hands a car becomes a weapon. “The driver may not have time to react to a hack,” he says. And because they open up new surfaces of attack, technologies designed to improve the experience can actually make it more dangerous. He compares the advent of these cars to the introduction of autopilot features in aeroplanes.

“We actually gave pilots more things to be responsible for,” he continues. “They really have to understand the system and be able to take control.” But that’s a high hurdle to expect from every buyer and user of an autonomous car. “Computers understand the code and translate inputs to outputs, but computer code is not optimized for humans to read.”

Meanwhile, benevolent hacks of autonomous cars continue to pile up. Keen Security Lab, which is owned by the Chinese technology giant Tencent, remotely took over a Tesla Model S in 2017 and a Tesla Model X in 2018. In March 2020 the company announced it had also uploaded malicious code into the computer of a Lexus NX300. The 2020 Pwn2Own competition was supposed to include an automotive hack event, but it was scrapped when the conference went online because of the COVID-19 pandemic.

As cars approach autonomy, says Vivek, risks will multiply. Some experts predict that nearly 750,000 autonomous-ready cars will hit the roads in the year 2023, which means they’ll be vulnerable to attack. That represents, by some estimates, more than two-thirds of cars on the road, riddled with attack surfaces both known and not. “If it can be hacked,” says Vivek, “it will be hacked.”

Hack the hack

If you have an autonomous car, here are six ways to lower the hacking risk.

- Change your password. The simplest fix might be the most powerful. In April 2019 a hacker called L&M reported a successful hack of GPS tracking apps in tens of thousands of cars and was consequently able to turn the cars off. The hacker’s secret? They used the default password – in this case “123456” – which the users never changed.

- Deploy lots of small networks. Physicist Skanda Vivek from Georgia Gwinnett College in the US has shown that if a hacker can break into the network used by connected cars in a city, they can cause gridlock. The risk could be mitigated if cities installed many small networks instead of one big one, so that one hack wouldn’t wreak so much havoc.

- Update your software. Drivers can lower their own risk of hacking by using the latest software, which should include patches for all known vulnerabilities.

- Make security a priority. App developers have long followed the directive to “Release it now, fix it later,” but that needs to stop, says Simon Parkinson at the University of Huddersfield. Car companies should insist that whoever is designing the app focus on security – and making the apps hack-proof – before they’re ever installed in a car.

- Turn off GPS. GPS spoofing is a kind of attack where someone uses a radio signal to interfere with a GPS location system. Until military-grade protections come on the market, the only way to protect against this hack is to use GPS sparingly. Results of a GPS spoofing experiment, released last year, showed that a hacker brought an autonomous vehicle to a halt by convincing the on-board GPS that the car had arrived at its destination.

- Get to know your self-driving car. People with ordinary cars can tell when something’s gone wrong, but autonomous vehicles are different. They run on algorithms and software, and malfunctions may be more difficult to see. Self-driving car users should get to know their cars – and find ways to flag problems before they become dangerous.