Bukweon Kim and colleagues from Yonsei University have developed a machine learning-based method for automated analysis of foetal ultrasound images. They report excellent levels of accuracy in determining foetal biometric parameters in this way (Physiol. Meas. 39 105007).

Currently, measurements of foetal head circumference and abdominal circumference (AC), for instance, are estimated manually from ultrasound images by skilled clinicians. These parameters are useful benchmarks to gauge gestational age, but the process can be time consuming and laborious. To get around those problems, the research team has developed a machine learning method that takes into account clinicians’ decisions in order to automate the estimation process.

In comparison, previous efforts to automate foetal biometric estimates have relied on image intensity. This often leads to good segmentation of well contrasted anatomical structures but can fail when measuring low-contrast features.

Substituting clinicians with a machine-learning algorithm

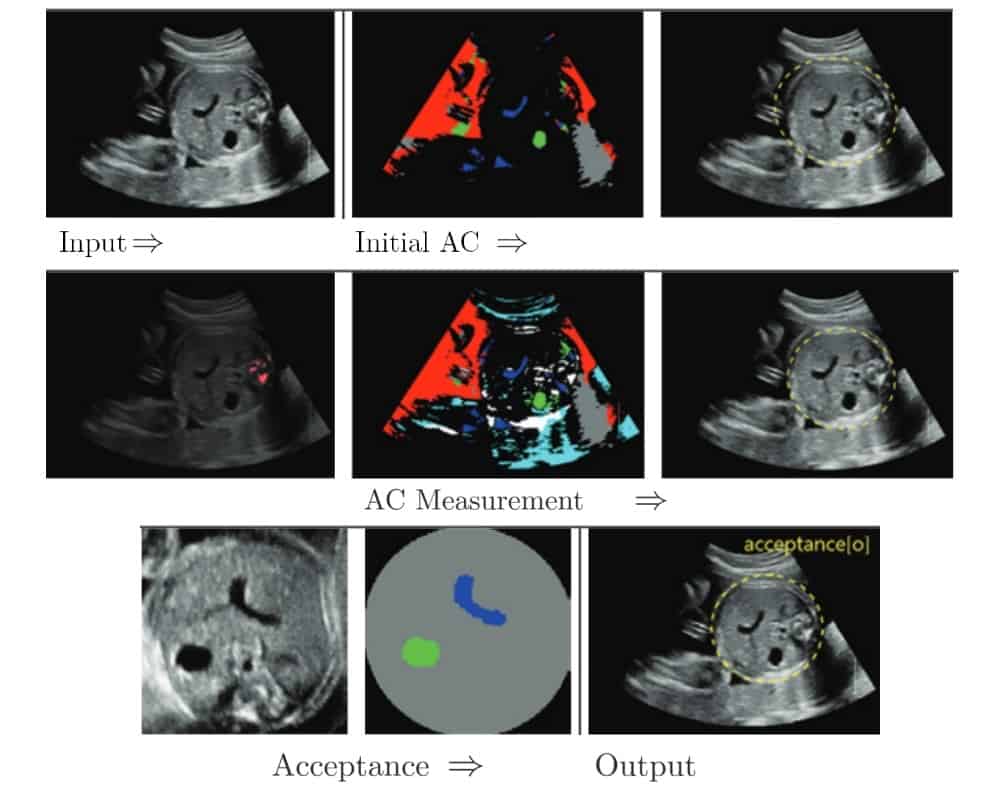

The researchers used a three-stage approach. In the first step, they obtained an initial estimate of the AC. They used a convolutional neural network (CNN) to determine the stomach bubble, amniotic fluid and umbilical vein in the ultrasound image, and from these three features, derived an estimate of the AC.

The novelty of Kim’s approach is contained in the second step, where the images together with the AC estimates were fed into a second CNN. This CNN then used these data to estimate the position of bony structures such as the mother’s ribs. This information was then used to refine the initial AC estimate.

In the final step, the researchers passed the final AC measurement along with the ultrasound images to a specific class of CNN known as a U-net. The U-net decided whether or not the ultrasound images, together with the AC estimate, are accepted or rejected, in a manner that mimics the decision of the clinician. In this way, the machine learns what to look for.

Kim and colleagues used 112 images to train each CNN and the U-net, and 62 images to evaluate abdominal circumference. They obtained accurate segmentation of the ultrasound images, including images rejected by the machine because they showed the wrong anatomical plane, in 87.10% of the verification cases.

More details for better diagnostics

Commercial systems are available to estimate the abdominal cavity volume from ultrasound images. However, these methods often fall short of clinical requirement due their inability to utilize structural information within the ultrasound image, such as shadowing artefacts caused by the ribs. By using a combination of several CNNs and a U-net, Kim and colleagues have shown that machines have the capacity to learn how to provide this structural information, which can in turn assist clinicians by automatically segmenting images with a good degree of accuracy.