Artificial intelligence has potential to improve the operation of many essential tasks in various fields of medicine and biomedicine – from dealing with the massive amount of data generated by medical imaging, to understanding the evolution of cancer in the body, to helping design and optimize patient treatments. At last week’s APS March Meeting, a dedicated focus session examined some of the latest medical applications of artificial intelligence and machine learning.

In-depth image analysis

Opening the session, Alison Deatsch from the University of Wisconsin, Madison, discussed the use of deep learning for diagnosing and monitoring brain disease. “Brain disorders and neurodegenerative disease are some of the most costly diseases, both in terms of human suffering and economic costs,” she explained.

The reason is that most of these conditions – which include Alzheimer’s and Parkinson’s disease, autism spectrum disorder and mild cognitive impairment (MCI), among others – lack reliable tools for diagnosis and progression monitoring and, as such, are often misdiagnosed. And for monitoring the neurological effects of cancer or chemotherapy, there are no standardized diagnostic tools at all, Deatsch noted.

Neuroimaging, using modalities such as MRI, functional MRI, PET and SPECT, could fill this gap. “However, when it comes to analysing these images, they are rarely brought to their full potential in the clinic,” said Deatsch. “This is due in part to the time it takes to manually curate or quantify data and some inherent uncertainty.”

To address this obstacle, the field is moving from visual-based image analysis towards a more quantitative approach, exploiting computational techniques to maximize information output from neurological images. This includes deep learning methods such as convolutional neural networks (CNNs), which are the most prevalent in medical imaging, as well as recurrent neural networks (RNNs) that use time series data. This shift could both advance our understanding of neurodegenerative disease and enhance clinical decision making.

“Deep learning has seen a significant increase in its use for neuroimaging in the last five years,” Deatsch said, presenting some recent clinical examples. CNNs, for instance, have been used with MRI data to identify Alzheimer’s disease, predict the progression of MCI to Alzheimer’s and assess Huntington’s disease severity, with reported accuracies of between 70 and 90%. Deep learning has also been employed to analyse PET and SPECT data for Alzheimer’s or Parkinson’s disease diagnosis, with similar high performance. Deatsch also highlighted some multimodality studies using CNNs to analyse combined MRI and PET data, with accuracies of more than 80%.

But despite the success achieved to date, challenges remain, which Deatsch and colleagues hoped to address in a recent project. They developed a novel deep learning model that can distinguish brain scans of patients with Alzheimer’s disease from normal controls, and investigated how various factors affected the model’s performance.

The team trained a CNN, with or without a cascaded RNN, to analyse 18F-FDG-PET and T1-weighted MRI scans. The CNN learns the spatial features and outputs a prediction of normal or Alzheimer’s disease. For patients with two or more scans, the RNN then learns temporal features and also outputs a classification for each patient. After training the model on several hundred PET and MRI scans, it achieved a maximum area under the ROC curve (AUC) of 0.93 and an accuracy of 81%.

Next, the researchers examined whether the imaging modality influences the model’s performance. They saw significantly better performance using PET data in both model types (with and without the RNN), possibly due to the larger variation between MR images. They also assessed whether adding longitudinal data has an impact and found that incorporating these data significantly improved performance for PET scans, but not for MRI.

To validate the CNN’s generalizability, they tested it on an external data set, where it performed equally well on the new unseen data. Finally, they checked the interpretability of their model by generating attention heatmaps showing the brain regions responsible for the model’s decision. They note that such maps provide a step towards identifying a quantitative imaging biomarker for Alzheimer’s disease.

“There is a tonne of promise for deep learning with neuroimaging for neurological diseases,” Deatsch concluded. “There are still a few limitations to address, but I hope I’ve shown the significant potential contained within this field and that many future studies will continue.”

Radiotherapy safety check

Artificial intelligence can play many roles in medical imaging – not just for diagnostics, but also in tasks such as image registration and segmentation, to help in radiotherapy treatment planning, for example. This naturally leads to the incorporation of machine learning-based methods in other applications, such as ensuring the safety of radiation therapy.

Qiongge Li from Johns Hopkins University School of Medicine presented such an application: an innovative anomaly detection algorithm designed to increase patient safety. The idea is to use the new tool to ensure appropriate radiation dose schemes are delivered to every patient. “It is important to address the detection of prescription errors in radiotherapy, even if this is a rare event,” she explained.

Quality assurance checks of radiotherapy plans are usually performed via a peer-review chart round in which physicians reach a consensus on each patient’s dosage. This is a manual and time consuming process, however, and doesn’t catch every error. Li described one study in which simulated plan anomalies were inserted into the weekly peer-review chart round and only 67% of the prescription errors were detected.

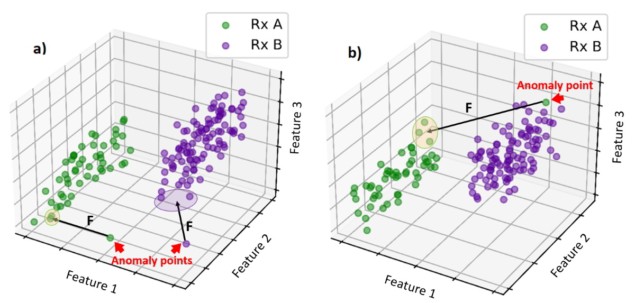

Li and colleagues have developed an anomaly detection tool that uses historical data to identify atypical radiotherapy prescriptions. At the heart of the tool is a distance model that compares patient data to historical databases and determines two dissimilarity metrics: the distance between the new patient’s prescription features (number of fractions and dose per fraction) and historical prescriptions; and the distance between other features (patient age, radiotherapy technique and energy, and clinical intent) and those of historical patients with similar prescriptions.

The model automatically flags any detected anomalies, such as a prescription that is very different to any seen before, or a mismatch between the prescription and other features. Thresholds for flagging were defined using mean feature distances between all patient pairs in the historical database.

The researchers trained their machine learning algorithm using historical data from 11062 thoracic cancer treatment plans. To validate the tool, they tested it on a set of unseen normal plans and plans created with simulated anomalies. These included, for instance, changing the number of fractions and dose per fraction to a non-standard combination, or changing the patient’s age from 90 to 10 and the treatment intent from cure to palliative – creating a mismatch between prescription and features.

The model demonstrated F1 scores (a combination of precision and recall) of 0.941, 0.727 and 0.875, for 3D conformal radiotherapy, intensity-modulated radiotherapy and stereotactic body radiotherapy plans, respectively. Three expert thoracic consultants also classified each case; the model and outperformed all three doctors in terms of recall, precision, F1 and accuracy.

Machine learning and advanced imaging improve prediction of heart attacks

Another benefit of the machine learning model is that it only takes around 1 s to run, compared with between 15 and 30 min required by the doctors. Li pointed out that training the model takes several days, but it only needs to be performed once. She also noted that a consensus between the three doctors performed slightly better than the model.

“Developing a fully automatic and data-driven tool for assisting peer-review chart rounds and providing that extra safety to our patients is in great need,” said Li.