Surgeons performing operations may orient themselves in the human body using sight and touch, but human senses can’t isolate things like small groups of cancer cells. Researchers at the University of Illinois at Urbana-Champaign tackled this challenge by developing a new image sensor that supplements a surgeon’s sight – and it’s based on how mantis shrimp see the world.

A multi-layered sensor the size of a postage stamp

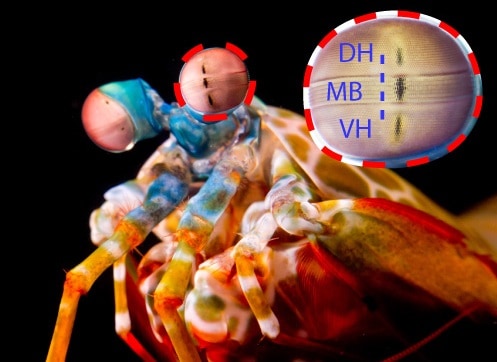

Mantis shrimp have the most complex visual systems ever studied (they even hold a world record). Their compound eyes have three layers of photoreceptor cells, and each layer responds to a slightly different wavelength of light. All in all, mantis shrimp have up to 16 different types of photoreceptors.

Humans, by contrast, have only three colour vision photoreceptors (red, green, blue) that respond to visible light. To accommodate human vision, conventional image sensors often separate a single layer of photosensitive material into sections. Since each section is sensitive to a different wavelength of light, how much a surgeon sees using a camera – and the resolution of the images produced – is limited by how many sections an image sensor has.

Steven Blair, a graduate student in the lab of Viktor Gruev and lead author of a study published in Science Translational Medicine, has developed an image sensor the size of a postage stamp that, like the mantis shrimp’s eye, has not one but three layers of photosensitive material.

A camera using the sensor can display, with high resolution and when combined with two light-filtering materials, up to six colours of visible and near-infrared light. This could enable surgeons to use a single camera to isolate structures in the human body that might otherwise go unseen.

Filters and dyes

Surgeons who want to see the otherwise unseen can inject fluorescent dyes into a patient. The dyes bind to hidden tumour cells, for example, and emit visible or near-infrared light from structures that might have tumour cells in them. Conventional image sensors collect the light and create a real-time video feed that displays images from either the visible or near infrared that surgeons can refer to while operating.

But what if surgeons need to see the visible and near infrared light at the same time, as is the case when structures are located both near the surface and deep within the body? The researchers solved this problem by depositing two light-filtering materials on the top layer of their sensor, allowing them to capture colour and near-infrared images simultaneously.

“You may want to distinguish multiple tissues in the operating room,” says Blair. “Our sensor can visualize multiple fluorescent dyes and can thus provide a surgeon with a map of all of these tissues.”

Bio-inspired camera in the OR

To check how their sensor performed, the researchers built a camera by attaching a lens, electronics and housing to the sensor. They connected the camera to an external display so they could see the real-time video feed of overlaid colour and near-infrared images. The researchers demonstrated that their camera could visualize two hallmarks of cancer, abnormal cell growth and abnormal glucose uptake, when two fluorescent dyes that target these hallmarks were injected into mice with prostate tumours. They also could detect the tumours with greater accuracy using both hallmarks (dyes) together than each one alone.

Next, they brought their camera into the operating room. They found that the camera could pick up weak near-infrared light emissions under strong surgical lighting, which might help surgeons identify potentially cancerous lymph nodes near human breast tumours.

Mantis shrimp inspires hyperspectral and polarimetric light sensor

Because the camera is compact (approximately the size of a digital SLR), it can be integrated into an operating room. Pending regulatory approval of targeted dyes, the camera could be used to identify tumour boundaries as well as tumours, which could improve patient outcomes and shorten recovery times after surgery.

“Nature has developed an incredible diversity of different visual systems that are suited for all sorts of environments,” says Blair. “We looked at the inspiration that nature provided us and the tools that were available to us as engineers, and we developed a sensor that sort of found the middle ground between nature and engineering.”