The world’s leading metrologists are keen to redefine the seven SI units in what is billed as the system’s biggest overhaul since the French Revolution. Robert P Crease reports from a recent meeting that discussed the proposed changes

The event at the Royal Society in London in January began precisely on time. But after the final delegates had taken their seats, Stephen Cox, the society’s executive director, noted sheepishly that the wall clock was running “a little slow” and promised to reset it. Cox knew the audience cared about precision and would appreciate his vigilance. As the world’s leading metrologists, they had gathered to discuss a sweeping reform of the scientific basis of the International System of Units (SI), in the most comprehensive revision yet of the international measurement structure that underpins global science, technology and commerce.

If approved, these changes would involve redefining the seven SI base units in terms of fundamental physical constants or atomic properties. The most significant of these changes would be to the kilogram, a unit that is currently defined by the mass of a platinum–iridium cylinder at the International Bureau of Weights and Measures (BIPM) in Paris, and the only SI unit still defined by an artefact. Metrologists want to make these changes for several reasons, including worries about the stability of the kilogram artefact, the need for greater precision in the mass standard, the availability of new technologies that seem able to provide greater long-term precision, and the desire for stability and elegance in the structure of the SI.

Over the two days of the meeting, participants expressed varied opinions about the force and urgency of these reasons. One of the chief enthusiasts and instigators of the proposed changes is former BIPM director Terry Quinn, who also organized the meeting. “This is indeed an ambitious project,” he said in his opening remarks. “If it is achieved, it will be the biggest change in metrology since the French Revolution.”

The metric system and the SI

The French Revolution did indeed bring about the single greatest change in metrology in history. Instead of merely reforming France’s unwieldy inherited weights and measures, which were vulnerable to error and abuse, the Revolutionaries imposed a rational and organized system. Devised by the Académie des Sciences, it was intended “for all times, for all peoples” by tying the length and mass standards to natural standards: the metre to one-forty-millionth of the Paris meridian and the kilogram to the mass of a cubic decimetre of water. But maintaining the link to natural standards proved impractical, and almost immediately the length and mass units of the metric system were enshrined instead using artefacts deposited in the National Archives in 1799. The big change now being championed is to achieve at last what was the aim in the 18th century – to base our standards on constants of nature.

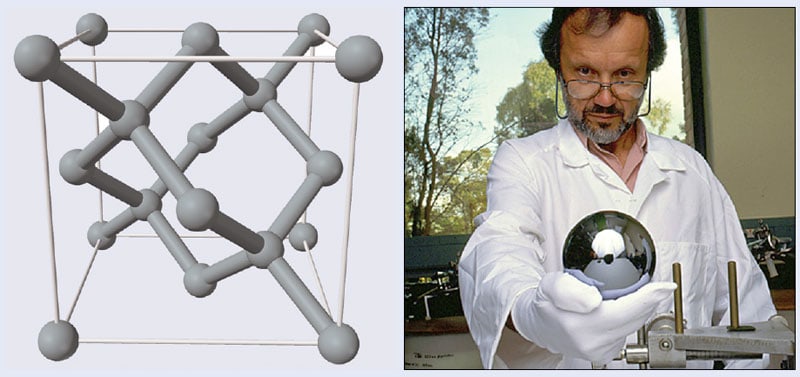

Despite its simplicity and rationality, the metric system took decades to implement in France. Other nations eventually began to adopt it for a mixture of motives: fostering national unity; repudiating colonialism; enhancing competitiveness; and as a precondition for entering the world community. In 1875 the Treaty of the Meter – one of the first international treaties and a landmark in globalization – removed supervision of the metric system from French hands and assigned it to the BIPM. The treaty also initiated the construction of new length and mass standards – the International Prototype of the Metre and the International Prototype of the Kilogram – to replace the metre and kilogram made by the Revolutionaries (figure 1). These were manufactured in 1879 and officially adopted in 1889 – but they were calibrated against the old metre and kilogram of the National Archives.

At first, the BIPM’s duties primarily involved caring for the prototypes and calibrating the standards of member states. But in the first half of the 20th century, it broadened its scope to cover other kinds of measurement issues as well, including electricity, light and radiation, and expanded the metric system to incorporate the second and ampere in the so-called MKSA system. Meanwhile, advancing interferometer technology allowed length to be measured with a precision rivalling that of the metre prototype.

In 1960 these developments culminated in two far-reaching changes made at the 11th General Conference on Weights and Measures (CGPM), the four-yearly meeting of member states that ultimately governs the BIPM. The first was to redefine the metre in terms of the light from an optical transition of krypton-86. (In 1983 the metre would be redefined again, in terms of the speed of light.) No longer would nations have to go to the BIPM to calibrate their length standards; any country could realize the metre, provided it had the technology. The International Prototype of the Metre was relegated to a historical curiosity; it remains in a vault at the BIPM today.

The second revision at the 1960 CGPM meeting was to replace the expanded metric system with a still greater framework for the entire field of metrology. The framework consisted of six basic units – the metre, kilogram, second, ampere, degree Kelvin (later the kelvin) and candela (a seventh, the mole, was added in 1971); plus a set of “derived units”, such as the newton, hertz, joule and watt, built from these six. It was baptized the International System of Units, or SI after its French initials. But it was still based on the same artefact for the kilogram.

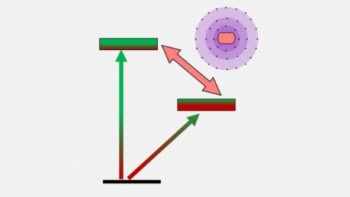

This 1960 reform was the first step towards the current overhaul, which seeks to realize a centuries-old dream of scientists – hatched long before the French Revolution – to tie all units to natural standards. More steps followed. With the advent of the atomic clock and the ability to measure atomic processes with precision, in 1967 the second was redefined in terms of the hyperfine levels of caesium-133. The strategy involved scientists measuring a fundamental property with precision, then redefining the unit in which the property was measured in terms of a fixed value of that property. The property then ceased to be measurable within the SI, and instead defined the unit.

The kilogram, however, stubbornly resisted all attempts to redefine it in terms of a natural phenomenon: mass proved exceedingly difficult to scale up from the micro- to the macro-world. But because mass is involved in the definitions of the ampere and mole, this also halted attempts for more such redefinitions of units. Indeed, in 1975, at a celebration of the 100th anniversary of the Treaty of the Meter in Paris, the then BIPM director Jean Terrien remarked that tying the kilogram to a natural phenomenon remained a “utopian” dream. It looked like the International Prototype of the Kilogram (IPK) was here to stay.

Drifting standard

A pivotal event took place in 1988, when the IPK was removed from its safe and compared with the six identical copies kept with it, known as the témoins (“witnesses”). The previous such “verification”, which took place in 1946, had revealed slight differences between these copies, attributable to chemical interactions between the surface of the prototypes and the air, or to the release of trapped gas. The masses of the témoins appeared to be drifting upwards with respect to that of the prototype. The verification in 1988 confirmed this trend: not only the masses of the témoins, but also those of practically all the national copies had drifted upwards with respect to that of the prototype, which differed in mass from them by about +50 µg, or a rate of change of about 0.5 parts per billion per year. The IPK behaved differently, for some reason, from its supposedly identical siblings.

Quinn, who became the BIPM’s director in 1988, outlined the worrying implications of the IPK’s apparent instability in an article published in 1991 (IEEE Trans. Instrum. Meas. 40 81). Because the prototype is the definition of the kilogram, technically the témoins are gaining mass. But the “perhaps more probable” interpretation, Quinn wrote, is that “the mass of the IPK is falling with respect to that of its copies”, i.e. the prototype itself is unstable and losing mass. Although the current definition had “served the scientific, technical and commercial communities pretty well” for almost a century, efforts to find an alternative, he suggested, should be redoubled. Any artefact standard will have a certain level of uncertainty because its atomic structure is always changing – in some ways that can be known and predicted and therefore compensated for, in others that cannot. Furthermore, the properties of an artefact vary slightly with temperature. The ultimate solution would be to tie the mass standard, like the length standard, to a natural phenomenon. But was the technology ready? The sensible level of accuracy needed to replace the IPK, Quinn said, was about one part in 108.

In 1991 two remarkable technologies – each developed in the previous quarter-century, and neither invented with mass redefinition in mind – showed some promise of being able to redefine the kilogram. One approach – the “Avogadro method” – realizes the mass unit using a certain number of atoms by making a sphere of single-crystal silicon and measuring the Avogadro constant. The “watt balance” approach, on the other hand, ties the mass unit to the Planck constant, via a special device that balances mechanical with electrical power. The two approaches are comparable because the Avogadro and Planck constants are linked via other constants the values of which are already well measured, including the Rydberg and fine-structure constants. Although in 1991 neither approach was close to being able to achieve a precision of one part in 108, Quinn thought at the time that it would not be long before one or both would be able to do this. Unfortunately, his optimism was misplaced.

The sphere…

The Avogadro approach (figure 2) defines the mass unit as corresponding to that of a certain number of atoms using the Avogadro constant (NA), which is about 6.022 × 1023 mol–1. It would, of course, be impossible to count that many atoms one by one, but instead it can be done by making a perfect enough crystal of a single chemical element and knowing the isotopic abundances of the sample, the crystal’s lattice spacing and its density. Silicon crystals are ideal for this purpose as they are produced by the semiconductor industry to high quality. Natural silicon has three isotopes – silicon-28, silicon-29 and silicon-30 – and initially it seemed that their relative proportions could be measured with sufficiently accurately. Although measuring lattice spacing proved harder, metrologists drew on a technique using combined optical and X-ray interferometers (COXI), which was pioneered in the 1960s and 1970s at the German national-standards lab (PTB) and the US National Bureau of Standards (NBS) – the forerunner of the National Institute of Standards and Technology (NIST). It relates X-ray fringes – hence metric length units – directly to lattice spacings. For a time in the early 1980s the results of the two groups differed by a full part per million (ppm). This disturbing discrepancy was finally explained by an alignment error in the NIST instrument, leading to an improvement in the understanding of how to beat back systematic errors in the devices.

The source of uncertainty that turned out to be much more difficult to overcome involved determining the isotopic composition of silicon. This appeared to halt progress toward greater precision in the measurement of the Avogadro constant at about three parts in 107. Not only that, but the first result, which appeared in 2003, showed a difference from the watt-balance results of more than 1 ppm. There was a strong suspicion that the difference stemmed from the measurements of the isotopic composition of the natural silicon used in the experiment. The leader of the PTB team, Peter Becker, then had a stroke of luck. A scientist from the former East Germany, who had connections to the centrifuges that the Soviet Union had used for uranium separation, asked Becker if it might be possible to use enriched silicon. Realizing that a pure silicon-28 sample would eliminate what was then thought to be the leading source of error, Becker and collaborators jumped at the opportunity. Although buying such a sample would be too costly for a single lab – an eye-watering €2m for 5 kg of the material – in 2003 representatives of Avogadro projects from around the world decided to pool their resources to buy the sample and form the International Avogadro Coordination (IAC). Becker at the PTB managed the group, parcelling out tasks such as characterization of purity, lattice spacing and surface measurements to other labs.

The result was the creation of two beautiful spheres. “It looks like what we’ve made is just another artefact like the kilogram – what we are trying to get away from,” Becker said at the January’s Royal Society meeting. “It’s not true – the sphere is only a method to count atoms.”

…and the balance

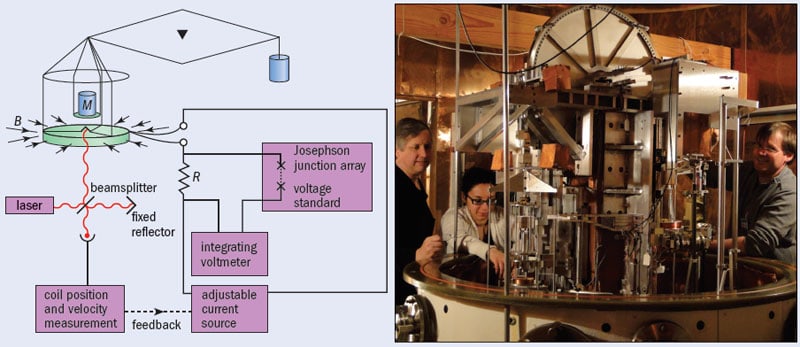

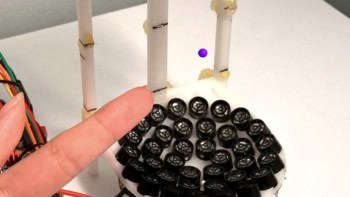

The second approach to redefining the kilogram involves an odd sort of balance. Whereas an ordinary balance compares one weight against another – a bag of apples, say, versus something else of known weight – the watt balance matches two kinds of forces: the mechanical weight of an object (F = mg) with the electrical force of a current-carrying wire placed in a strong magnetic field (F = ilB), where i is the current in the coil, l is its length and B is the strength of the field. The device (figure 3) is known as a watt balance because if the coil is moved at speed u, it generates a voltage V = Blu – and hence, by mathematical rearrangement of the above expressions, the electrical power (Vi) is balanced by mechanical power (mgu). In other words, m = Vi/gu.

In modern watt balances, the current, i, can be determined to a very high precision by passing it through a resistor and using the “Josephson effect” to measure the resulting drop in voltage. Discovered by Brian Josephson in 1962, this effect describes the fact that if two superconducting materials are separated by a thin insulating material, pairs of electrons in each layer couple in such a way that the microwave radiation of frequency, f, can create a voltage across the layer of V = hf/2e, where h is Planck’s constant and e is the charge on the electron. The resistance of the resistor, meanwhile, is measured using the “quantum Hall effect”, which describes the fact that the flow of electrons in 2D systems at ultralow temperatures is quantized, with the conductivity increasing in multiples of e2/h. The voltage, V, is also measured using the Josephson effect, while the speed of the coil, u, and the value of g can also be easily obtained.

What is remarkable about the watt balance is how it relies on several astonishing discoveries, none of which were made by scientists attempting mass measurements. One is the Josephson effect, which can measure voltage precisely. Another is the quantum Hall effect (discovered by Klaus von Klitzing in 1980), which can measure resistance precisely. The third is the concept of balancing mechanical and electrical power, which can be traced back to Bryan Kibble at the UK’s National Physical Laboratory (NPL) in 1975, who had actually been trying to measure the electromagnetic properties of the proton. These three discoveries can now be linked in such a way that the kilogram can be measured in terms of the Planck constant. By “bootstrapping”, the process could now in principle be reversed, and a specific value of the Planck constant used to define the kilogram.

In Michael Faraday’s famous popular talk “A chemical history of the candle”, he called candles beautiful because their operation economically interweaves all the fundamental principles of physics then known, including gravitation, capillary action and phase transition. A similar remark could be made of watt balances. Though not as pretty as polished silicon spheres, they nevertheless combine the complex physics of balances – which include elasticity, solid-state physics and even seismology – with those of electromagnetism, superconductivity, interferometry, gravimetry and the quantum in a manner that exhibits deep beauty.

Towards the “new SI”

The Avogadro approach and watt balances each have their own merits (see “A tale of two approaches” below), but as the 21st century dawned, neither had reached an accuracy of better than a few parts in 107, still far from Quinn’s target of one part in 108. Nevertheless Quinn, who stepped down as BIPM director in 2003, decided to pursue the redefinition. Early in 2005 he co-authored a paper entitled “Redefinition of the kilogram: a decision whose time has come” (Metrologia 42 71), the subtitle derived from a (by then ironic) NBS report of the 1970s heralding imminent US conversion to the metric system. “The advantages of redefining the kilogram immediately outweigh any apparent disadvantages,” Quinn and co-authors wrote, despite the then apparent inconsistency of 1 ppm between the watt balance and silicon results. They were so confident of approval by the next CGPM in 2007 that they inserted language for a new definition into “Appendix A” of the BIPM’s official SI Brochure. Furthermore, they wanted to define each of the seven SI base units in terms of physical constants or atomic properties. In February 2005 Quinn organized a meeting at the Royal Society to acquaint the scientific community with the plan.

The reaction ran from lukewarm to hostile. “We were caught off guard,” as one participant recalled. The case for the proposed changes had not been elaborated, and many thought it unnecessary, given that the precision available with the existing artefact system was greater than that of the two newfangled technologies. Not only were the uncertainties achieved by the Avogadro and watt-balance approaches at least an order of magnitude away from the target Quinn had set in 1991, but there was also still this difference of 1 ppm to be accounted for. Nevertheless, the idea had taken hold, and in October 2005 the BIPM’s governing board, the International Committee for Weights and Measures, adopted a recommendation that not only envisaged the kilogram being redefined as in Quinn’s 2005 paper, but also included redefinitions of four base units (kilogram, ampere, kelvin and mole) in terms of fundamental physical constants (h, e, the Boltzmann constant k and NA, respectively). Quinn and his colleagues then published a further paper in 2006 in which they outlined specific proposals for implementing the CIPM recommendation, with the idea that they be agreed at the 24th General Conference in 2011.

In recent years both approaches have realized significant progress. In 2004 enriched silicon in the form of SiF4 was produced in St Petersburg and converted into a polycrystal at a lab in Nizhny Novgorod. The polycrystal was shipped to Berlin, where a 5 kg rod made from a single crystal of silicon-28 was manufactured in 2007. The rod was sent to Australia to be fashioned into two polished spheres, and the spheres were measured in Germany, Italy, Japan and at the BIPM. In January this year the IAC reported a new measurement of its results with an uncertainty of 3.0 × 10–8, tantalizingly close to the target (Phys. Rev. Lett. 106 030801). The result, the authors write, is “a step towards demonstrating a successful mise en pratique of a kilogram definition based on a fixed NA or h value” and claim it is “the most accurate input datum for a new definition of the kilogram”.

Watt-balance technology, too, has been steadily developing. Devices with different designs are under development in Canada, China, France, Switzerland and at the BIPM. The results indicate the ability to reach an uncertainty of less than 107. Their principal problem, however, is one of alignment: the force produced by the coil and its velocity must be carefully aligned with gravity. And as the overall uncertainty is reduced, it gets ever harder to make these alignments. The previous difference of 1 ppm has been reduced to about 1.7 parts in 107 – close but not quite close enough.

Still, these results have led to a near-consensus in the metrological community that a redefinition is not only possible but likely. One BIPM advisory committee has proposed criteria for redefinition: there should be at least three different experiments, at least one from each approach, with an uncertainty of less than five parts in 108; at least one should have an uncertainty of less than two parts in 108; and all results should agree within a 95% confidence level. The proposal drafted by Quinn and colleagues, for what is called the “new SI”, is almost certain to be approved. It does not actually redefine the kilogram but “takes note” of the intention to do so. The redefinition is now in the hands of the experimentalists, who are charged with meeting the above criteria.

The greatest change of all?

These developments gave Quinn the confidence to organize another meeting. This time, he and his fellow organizers devised a careful strategy. The 150 participants at January’s Royal Society meeting included three Nobel laureates: John Hall of JILA (whose work contributed to the redefinition of the metre); Bill Phillips of NIST; and von Klitzing himself. Under the new proposals, no longer will these physical constants be measured as, within the SI, their numerical values are fixed (see “Towards a new SI”). Furthermore, the definitions are similar in structure and wording, and the connection to physical constants made explicit. The language makes it clear what these definitions really say – what it means to tie a unit to a natural constant – giving them a conceptual elegance. The proposed new definition for the kilogram, for example, is “The kilogram, kg, is the unit of mass; its magnitude is set by fixing the numerical value of the Planck constant to be equal to exactly 6.626,068…× 10–34 when it is expressed in the unit s–1 m2 kg, which is equal to J s.” (The dots indicate that the final value has not yet been determined.)

The metrological community is vast and diverse, and different groups tend to have different opinions about the proposals. Those who make electrical measurements tend to be enthusiastic; h and e now became exactly determined and much easier to work with. Moreover, the awkward split between the best available electrical units introduced by Kibble’s device and those available in the SI is eradicated. The only outright objection from this corner at the meeting, by von Klitzing, was tongue-in-cheek. “Save the von Klitzing constant!” he protested, pointing out that his eponymous constant, RK = h/e2, which has been conventionally set (outside the SI) at 25,812.807 Ω exactly for two decades, now becomes revalued in terms of e and h, making it long and unwieldy rather than short and neat. Yet he went on to express sympathy with the redefinition, citing Max Planck’s remark in 1900 that “with the help of fundamental constants we have the possibility of establishing units of length, time, mass and temperature, which necessarily retain their significance for all cultures, even unearthly and non-human ones” (Ann. Phys. 1 69).

The mass-measurement community tends to be less sanguine. Mass measurers can currently compare masses with about an order of magnitude greater precision – one part in 109 – than they can achieve by directly measuring a constant. The new definitions thus appear to introduce more uncertainty into mass measurements than exists at present; in place of careful traceability back to a precisely measurable mass, you now have traceability back to a complicated experiment at various national laboratories. As Richard Davis, the recently retired head of the BIPM mass division, remarked about the SI, “It’s got to be like a piece of Shaker furniture: not just beautiful but functional.” Advocates, however, point out that comparison measurements conceal the uncertainty present in the kilogram artefact itself, so that ultimately no new uncertainty is introduced. “Uncertainty is conserved,” as Quinn remarked.

One group not present at the meeting are the students, educators and other members of the public interested in metrology. As the Chicago Daily Tribune complained after the SI was created in 1960, “We get the feeling that important matters are being taken out of the hands, and even the comprehension, of the average citizen.” Woe to the colour-blind seamstress, it continued, who can use a tape measure but cannot tell an orange–red wavelength. The half-joke concealed the worry that measurement matters, which should be simple for the average person to understand, were about to become too complex for anyone except scientists. One of the attractions of science for students is that the concepts and practices are perspicuous, or aim to be – but the new SI seems to put the foundations of metrology out of reach of all but insiders. Woe to the butcher and grocer, someone may jest when the new SI takes effect, who is not proficient in quantum mechanics.

Still, each age bases its standards on the most solid ground it knows, and it is appropriate that in the 21st century this includes the quantum. None of the attendees at the Royal Society objected in principle to the idea that the kilogram should eventually be redefined in terms of the Planck constant. “It’s a scandal that we have this kilogram changing its mass – and therefore changing the mass of everything else in the universe,” Phillips remarked at one point. A few people were bothered that it now appeared impossible for scientists to detect whether certain fundamental constants were changing their values, though others pointed out that such changes would be detectable by other means.

Many participants, however, were troubled that the Avogadro and watt-balance teams have produced two measurements that are still not quite in sufficiently good agreement, throwing a spanner, if not perhaps a large one, into the attempt to pick a single value. “The person who has only one watch knows what time it is,” said Davis, citing an ancient piece of metrology wit. “The person who has two is not sure.”

Quinn appears confident that the discrepancy will be resolved in a few years. The only clear controversy on show at the meeting concerned what would happen if it is not. Quinn wants to plunge ahead with the redefinition anyway, given that the level of precision was so remote that it would not affect measurement practice. Some objected, fearing that there would be secondary effects, such as in legal metrology, which concerns legal regulations incorporated into national and international agreements. Others worry about the perception of metrology and metrological laboratories if a value is fixed prematurely and then the mass scale must be changed in the light of better measurements in the future. NPL director Brian Bowsher, referring to the current climate-change controversy in which sceptics leap on any hint of uncertainty in measurements, stressed the importance of being “the people who take the time to get it right”.

Calling the new SI the greatest change since the French Revolution may be hyperbole. The advent of the SI in 1960 was possibly just as important in that it introduced new units and tied existing ones to natural phenomena for the first time. The new changes will also have scarcely any impact on measurement practice, and are largely for pedagogical and conceptual reasons. Nevertheless, the change is breathtaking in its ambition – the most extensive reorganization in metrology since the creation of the SI in 1960 – and the realization of a centuries-old dream.

The new SI also represents a transformation in the status of metrology. When the SI was created in 1960, metrology was regarded as something of a backwater in science – almost a service occupation. Metrologists built the stage on which scientists acted. They provided the scaffolding – a well-maintained set of measuring standards and instruments, and a well-supervised set of institutions that cultivated trust – that enabled scientists to conduct research. The new SI, and the technologies that make it possible, connect metrology much more intimately with fundamental physics.

A tale of two approaches

Avogadro method

Best measurement

The lowest uncertainty is the 30 parts per billion measurement made by the International Avogadro Coordination (IAC)

Advantages

- The definition of the kilogram using a fixed value of the Avogadro constant is reasonably intuitive, but requires auxiliary defining conditions

Disadvantages

- It is a very difficult experiment and requires a worldwide consortium (the IAC) to make the measurements on the two silicon-28 spheres currently in existence

- The measurements are unlikely to be repeated, so the existing spheres will become a form of artefact standard subject to questions about their long-term stability

Watt-balance approach

Best measurement

The lowest uncertainty is the 36 parts per billion measurement made by the National Institute of Standards and Technology (NIST) in the US

Advantages

- It is not an artefact standard; the technique measures a range of masses and is not limited to multiples and sub-multiples of 1 kg

- A watt balance can be built and operated by a single measurement laboratory

- The results from a worldwide ensemble of watt balances can be compared and combined. This would provide the world with a robust mass standard, which is better than the individual contributions

- A fixed value of h, combined with a redefined ampere that fixes the value of the elementary charge, e, will be a big plus both for physics and high-precision electrical measurements

Disadvantages

- Although the principle is simple, the implementation is not, and requires significant investment of money, time and good scientists

- The definition of the kilogram using a fixed value of Planck’s constant is less intuitive than the current definition

Ian Robinson, National Physical Laboratory, UK