Microscopic objects and organisms can reveal a great deal about our world, and with today’s technology, you can even turn your mobile phone into a microscope. Of course, a phone-based microscope could never compare to a specialized laboratory microscope, right? Or perhaps it could.

A team of researchers at the University of California, Los Angeles, led by Aydogan Ozcan, specializes in techniques for imaging tiny objects outside of the research lab. In a recent report appearing online in Light: Science and Applications, the team used machine learning to extract phase information – which has many uses, including visualizing transparent objects and measuring object thicknesses – from images of complex biological samples taken with a hand-held portable camera. Thanks to their deep neural network algorithm, the team was able to reduce both the computing time and the number of images needed to perform biomedical imaging of patient samples (Light Sci. Appl. 7 e17141).

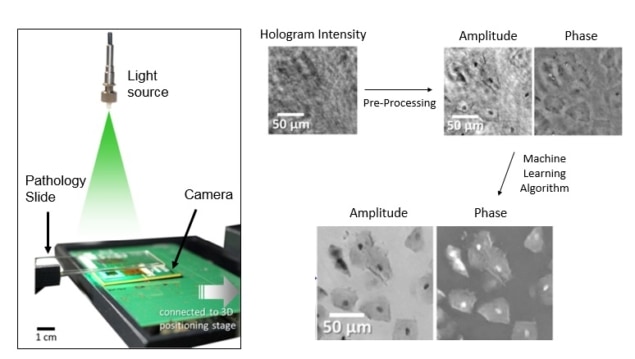

The researchers used a lens-free imaging device equipped with a camera similar to the one on a mobile phone (more details on lens-free imaging can be found in a recent Physics Todayfeature co-authored by Ozcan). In an unorthodox approach, they place the sample directly in front of the camera and shine light through it onto the camera using a simple LED. The light is distorted (scattered and phase-shifted) as it passes through the sample. The camera then captures the light, distortions and all, creating a hologram. The hologram is then processed computationally to reconstruct an image of the sample, exactly as it is would be seen through more expensive and bulky benchtop microscopes.

A major challenge in this form of imaging is recovery of phase information. When light waves hit a camera’s detector, they contain both phase and intensity information, but the phase information is lost because optical cameras only detect intensity. “Phase information is particularly important for transparent objects that are hard to see – like unstained tissue samples,” says Ozcan.

Several computational techniques for recovering phase-information from intensity images have been invented, but they suffer from the need to acquire multiple images and relatively long computation times. If you have a moving sample or need quick readouts – for tracking the swimming of sperm cells, for example – these long imaging and processing times can be problematic.

A key benefit of machine learning is the ability to solve complex problems without having a complete understanding of the physical processes underlying the data. “Remarkably, this deep learning-based phase recovery and holographic image reconstruction approach has been achieved without any modelling of light-matter interaction or wave interference,” the team writes. By training the machine-learning algorithm with a large dataset of images in which the correct phase was known, the team taught a neural network to reliably reconstruct images of biological samples, such as tissue sections used in pathology, for example.

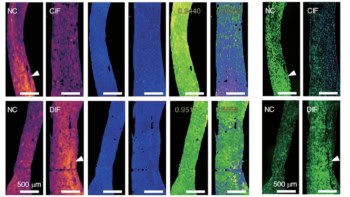

Using their algorithm, the researchers were able to reconstruct images of blood cells, Pap smears and breast cancer tissue slices. Notably, the team found that the machine learning algorithm only needed one image to reconstruct the phase information of the specimen at a quality comparable to that achieved with two to three images using the former state-of-the-art technique. In addition, the computation time was cut in half, and the algorithm could even handle out-of-focus images far better.

By requiring less sophisticated imaging and computing hardware, these advances enable the potential development of more affordable portable imaging devices. In a presentation at Biodetection and Biosensors 2017, Ozcan noted that camera pixel-count is increasing exponentially over-time in a manner similar to Moore’s law. Computational advances such as this one are critical to ensuring that today’s rapidly improving hardware can be fully exploited, and this work demonstrates that machine learning has a huge potential to impact this field.

“We have a line of exciting ongoing projects that use deep learning approaches for advancing imaging and sensing science,” concludes Ozcan.