Quantum mechanics is the most accurate theory we have to describe the world, but there is still much about it that we do not fully understand

Quantum mechanics is a great deal more than a theory; it is a whole new way of looking at the world. When it was developed in the 1920s, quantum mechanics was viewed primarily as a way of making sense of the host of observations at the level of single electrons, atoms or molecules that could not be explained in terms of Newtonian mechanics and Maxwellian electrodynamics. Needless to say, it has been spectacularly successful in this task.

Around 75 years later, as we enter the new millennium, most physicists are confident that quantum mechanics is a fundamental and general description of the physical world. Indeed, serious attempts have been made to apply quantum ideas not merely to laboratory-scale inanimate matter but also, for example, to the workings of human consciousness and to the universe as a whole. Yet despite this confidence, the nagging questions that so vexed the founding fathers of quantum theory – and which many of them thought had finally been laid to rest after years of struggle – have refused to go away. Indeed, as we shall see, in many cases these questions have returned to haunt us in even more virulent forms. It is probably fair to say that, in the final years of this century, interest in the foundations of quantum mechanics is more widespread, and more intellectually respectable, than at any time since the invention of quantum theory.

I shall not have space here to discuss all the interesting technical advances of recent years, such as work on Zeno’s paradox or the properties of “post-selected” states. Instead I will confine myself to two aspects of the quantum world-view that are particularly alien to classical physics: these are “entanglement” and “non-realization”. These two topics are commonly associated with two famous paradoxes – the Einstein-Podolsky-Rosen (EPR) paradox and Schrödinger’s cat.

Quantum mechanics is usually interpreted as describing the statistical properties of “ensembles” of similarly prepared systems, such as the neutrons in a neutron beam, rather than individual particles. The ensemble is described by a wavefunction that can be a function of both space and time. This wavefunction, which is complex, contains all the information that it is possible to know about the particles in the ensemble. The probability of a particle being at a particular position is given by the product of the wavefunction and its complex conjugate at that point. Energy, momentum and other quantities that can be measured in an experiment are represented by “operators”, and their distributions can be calculated if the wavefunction is known. The indeterminacy or uncertainty principle means that it is impossible to assign definite values of certain pairs of variables, such as position and momentum, with arbitrary precision.

In quantum mechanics it is possible for a particle such as an electron to be in two or more different quantum states or “eigenstates” at the same time. These eigenstates correspond to definite, but different, values of a particular quantity such as momentum. However, when the momentum of a particular particle is measured, a definite value is always found – as experiment confirms! In the conventional or Copenhagen interpretation of quantum theory, the particle in question “collapses” into the eigenstate corresponding to that value, and remains in this state for future measurements. Or, to put it more accurately, those particles that, on measurement, are found to have a particular value of the momentum constitute a new ensemble for future measurements, with properties different from the original ensemble.

Entanglement and the EPR paradox

Although the standard interpretation of quantum mechanics does not allow the particles in an ensemble to possess definite values of all measurable properties simultaneously, it can be shown that the experimental predictions made by the theory are nevertheless compatible with the simultaneous existence of these properties. (This view is contrary to a long-standing misconception that may have resulted from a misreading of some early work by John von Neumann.) However, the simultaneous existence of these properties does require that certain non-standard, but not obviously unreasonable, assumptions are made about the effects of the measurement process.

The phenomenon of “entanglement” refers to the fact that the most general quantum description of an ensemble of systems in which each system is composed of two or more subsystems (such as pairs of electrons or photons) does not permit us to assign a definite quantum state to each of the individual subsystems. This turns out to have a much more dramatic consequence: if quantum mechanics gives the correct predictions for experiment and we are not prepared to relax very basic ideas about causality, then independent of any theoretical interpretation, the individual particles cannot be conceived of as possessing “properties” in their own right.

To introduce the idea of “entanglement”, let us consider a single “spin-½” particle such as an electron. When the intrinsic angular momentum or “spin” of the particle is measured along any direction, the answer is always +h-bar/2 or – h-bar/2, where h-bar is the Planck constant divided by 2 pi. The most general pure quantum state of a spin-½ particle can be written as phi(n), where n is the unit vector in the direction along which the spin is guaranteed to be h-bar/2.

Now consider two distinguishable spin-½ particles: one possible pure state for this system would involve each particle being in a single-particle pure state. This is written formally as phi1(n1) x phi2(n2) where n1 is the unit vector for particle 1 and n2 is the unit vector for particle 2. In such a “product” state it is possible to view each particle as possessing properties in its own right.

Nothing really changes if we consider statistical mixtures of product states: the vectors n1 and n2 still exist for any given pair, but we may not know what these vectors are. For example, we may know that either particle 1 has its spin “up” and particle 2 has its spin “down”, or vice versa, without knowing which of these two possible states the system is in. In such a case the experimental properties are still compatible with the idea that the particles “possess” individual properties but we do not know what these properties are.

However, we can also form “quantum superpositions” of product states and, as we shall see below, these so-called entangled states can have unique and counterintuitive properties. The best-known example of an entangled state is that which corresponds to two spin-½ particles with a total spin of zero

Upsilon(1, 2) = (1/2½)[{phi1(n) x phi2(-n)} – {phi1(-n) x phi2(n)}]

where n is a unit vector in an arbitrary direction. If the spin of particle 1 along any axis is measured and found to be +½, then a measurement of the spin of particle 2 along the same axis is guaranteed to yield -½. This property, while surprising, is not the most important property of the entangled state. What is unique about the entangled state, by its very definition, is that it is impossible to assign a quantum state (even an unknown one!) to each of the particles individually. In other words, the individual particles cannot be regarded as possessing properties in their own right.

In 1964 the late John Bell showed that the possibility of entanglement had truly spectacular consequences. Bell considered an ensemble of pairs of spin-½ particles that have interacted in the past but are now so wide apart that they are space-like separated in the sense of special relativity (that is there is no time for a light signal to travel between them within the duration of the experiment). He then set out to find a description of the pairs, and their interactions with the measuring apparatus, which satisfied the three postulates that defined so-called “objective local” theories.

* Each particle is characterized by a set of variables. These variables might correspond to a quantum-mechanical wavefunction, but they do not have to. It is not excluded that the variables describing particles 1 and 2 have a strong statistical correlation.

* The statistical probability of a given outcome when the spin of particle 1 is measured along any axis is a function only of the properties of the relevant measuring apparatus and of the variables describing particle 1. In particular, the result is not physically affected either by the choice of measurement direction for particle 2, or by the outcome of that measurement. It is usually assumed that special relativity ensures, under appropriate experimental conditions, that this condition is satisfied.

* The properties of ensembles at a given time are determined only by the boundary conditions at earlier times: that is there is no “retrospective causality”.

Bell’s theorem effectively says that an objective local theory and quantum theory will give different predictions for the results of certain experiments (see box 1). The first step is to prepare an ensemble of pairs of quantum particles in such a way that we believe the correct quantum-mechanical description is given by an entangled state. If we then perform the experiment under suitably ideal conditions, and verify the predictions of quantum mechanics, we have shown that no theory of the objective local type can describe the physical world. Note that this conclusion is valid even if quantum mechanics is not the correct theory.

The history of experiments to compare the predictions of quantum mechanics and objective local theories goes back almost 30 years, including a remarkable series of experiments by Alain Aspect and co-workers at the Institut d’Optique in Paris in the early 1980s. With some exceptions that we believe we understand, these experiments have confirmed the statistical predictions of quantum mechanics under conditions that, although not 100% ideal, are sufficient to have convinced most physicists that nature cannot be described by any objective local theory.

Therefore, if one wishes to preserve the second and third postulates above – that is to preserve our usual conceptions about locality in special relativity and the “arrow of time” – we must then reject the first postulate. In other words we must accept that “isolated” physical subsystems need not possess “properties” in their own right. This conclusion, which again is independent of the validity of quantum mechanics, is highly counterintuitive.

In the last few years there have been a number of significant developments in this area. On the experimental side, Nicolas Gisin’s group at the University of Geneva has confirmed the quantum predictions at spatial separations of greater than 10 kilometres. These experiments, and indeed all of the experiments performed so far in this area, rely on statistical averages over a number of measurements. However, Daniel Greenberger, Michael Horne and Anton Zeilinger (GHZ) have shown that if one considers three particles, rather than pairs of particles, it is possible, in principle, to discriminate between the predictions of quantum mechanics and objective local theories with a single measurement. In the GHZ approach there exists, under certain conditions, an experiment such that objective local theories predict that the outcome is 100% “yes”, while quantum mechanics predicts 100% “no”. Experiments along these lines are currently being developed.

Entanglement has also played an important role in the emerging field of quantum information. For example, a quantum computer could, in principle, exploit entanglement to perform certain computational tasks much faster than a conventional or classical computer (see box 2).

1. Experimental tests of Bell's theorem

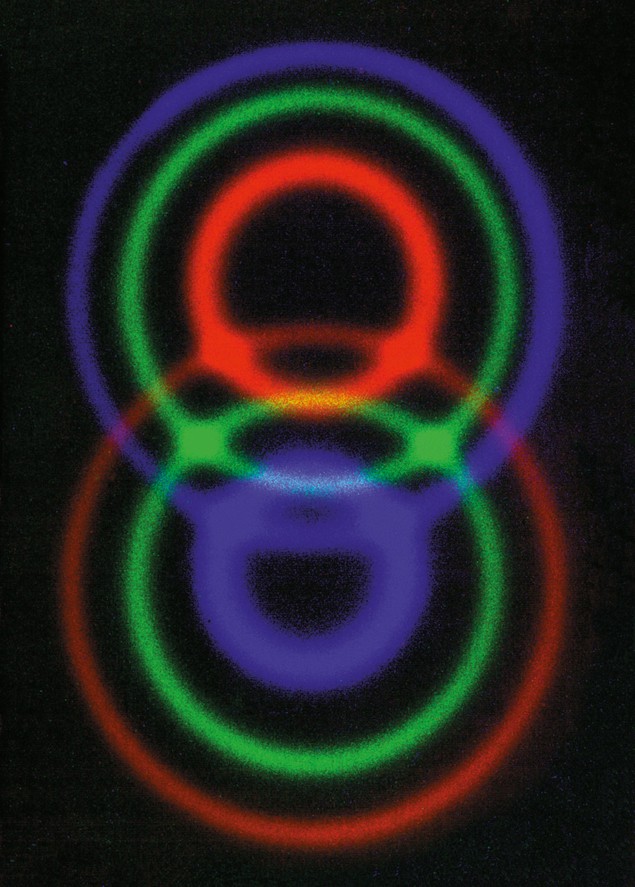

In a typical experimental test of Bell’s inequality, a source of entangled pairs of linearly polarized photons is placed between two polarizers. The probability of a photon passing through a polarizer depends on the angle between the polarization direction of the photon and the polarization axis of the polarizer. When this angle is zero, the photon always passes through. The probability of transmission falls as the angle increases, and reaches zero for an angle of 90°.

In the experiment we measure the probability that photon 1 passes through polarizer 1 and photon 2 passes through polarizer 2 as a function of the angle between the two axes of polarization. Quantum theory and objective local theories give different predictions for the result. In particular, objective local theories predicted that a particular combination of probabilities would be less than or equal to 2 – this is the famous Bell inequality. Quantum mechanics, on the other hand, predicts that the answer will be 2 x 2½. Experimental results to date strongly support the predictions of quantum theory.

2. Quantum information

One context in which entanglement has recently played an important role is the emerging field of “quantum information”. In a classical two-state system, a single binary digit can completely specify the state (e.g. 1 for “up”, 0 for “down”); the system thus carries one classical “bit” of information, and a set of L such classical systems carries L bits. A quantum two-state system, such as a single spin-½ particle, is already richer because two real numbers (e.g. the polar and azimuthal components of the unit vector n) are needed to specify the state. Moreover, these numbers can vary continuously between certain limits. The system is said to carry one quantum bit or “qubit” of information, and a set of L two-state quantum systems can carry L qubits. However, only one of the two binary values, 0 or 1, will be detected when the qubit is measured.

A classical 5-bit register can store exactly one of 32 different numbers: i.e. the register can be in one of 32 possible configurations 00000, 00001, 00010, … , 11111 representing the numbers 0 to 31. But a quantum register composed of 5 qubits can simultaneously store up to 32 numbers in a quantum superposition. Once the register is prepared in a superposition of many different numbers, we can perform mathematical operations on all of them at once. The operations are unitary transformations that entangle the qubits. During such an evolution each number in the superposition is affected, so we are performing a massive parallel computation.

This means that a quantum computer operating on L qubits can, in a single computational step, perform the same mathematical operation on 2L different input numbers, and the result will be a superposition of all the corresponding outputs. In order to accomplish the same task, any classical computer has to repeat the computation 2L times, or has to use 2L different processors working in parallel. In this way a quantum computer offers an enormous gain in the use of computational resources, such as time and memory, although only in certain types of computation.

Whether such a “quantum computer” can realistically be built with a value of L that is large enough to be of practical use is a topic of much debate. However, the mere possibility has led to an explosive renaissance of interest in the host of curious and classically counterintuitive properties associated with entangled states. Other phenomena that rely on nonlocal entanglement, such as quantum teleportation and various forms of quantum cryptography, have also been demonstrated in the laboratory (see further reading).

Non-realization and Schrödinger’s cat

The second major element of the quantum world-view that is also completely counterintuitive to classical thinking is “non-realization” or, as it is more commonly called, the quantum measurement paradox. This is most famously demonstrated by Schrödinger’s famous cat. In this thought experiment a cat is placed in a box, along with a radioactive atom that is connected to a vial containing a deadly poison. If the atom decays, it causes the vial to be smashed and the cat to be killed. When the box is closed we do not know if the atom has decayed or not. However, the atom is a quantum system, which means that it can be in both the decayed and non-decayed state at the same time. Therefore, the cat is also both dead and alive at the same time – which clearly does not happen in classical physics (or biology). However, when we open the box and look inside – that is when we make a measurement – the cat is either dead or alive.

Let us look at the situation more closely. Consider an ensemble of microscopic systems, such as electrons or photons, described by a state that is a quantum superposition of two orthogonal microstates, a and b. These microstates might be localized near one or other of the slits in a Young’s double-slit interference experiment. We know that any measurement that we carry out to discriminate between the states will always reveal that each individual system in the ensemble to be either in state a or in state b. There is, however, overwhelming experimental evidence that if a measurement is not made, then the system remains in a quantum superposition of the two states.

If now we introduce a device that will amplify the microstate a to produce some state A of the macroworld, and amplify microstate b to produce a state B that is macroscopically different from A, then this same device will amplify the superposition of a and b to produce a corresponding superposition of the macroscopically distinct states A and B. Moreover, if we continue to interpret the concept of superposition at the macrolevel in the same way that we do at the microlevel, we are apparently forced to conclude that the relevant part of the macroscopic world does not realize a definite macroscopic state until it is observed! In other words the cat can be both dead and alive at the same time before we look at it.

A standard reaction to this argument, which can be traced back to Heisenberg, relies on an idea called “decoherence”. In decoherence a macroscopic body interacts so strongly with its “environment” that its quantum state rapidly gets entangled with that of the environment. For example, the description of the cat by a superposition of “dead” and “alive” states is not realistic – we would certainly need, as a minimum, to “entangle” these states with the corresponding states of the vial containing the poison and so on. In such an entangled state quantum mechanics predicts that any measurement made on the system alone (i.e. with no corresponding measurement on the “environment”) should give statistical results identical to those that would be obtained from a classical probabilistic mixture of the states A and B. In this classical state each system in the ensemble is either definitely in state A or definitely in state B, but we do not know which. As a consequence, it is argued, we can legitimately say that by the time the amplification of the microstates has reached the macrolevel, each individual system “really is” in one state or the other.

There is no doubt that the phenomenon of decoherence is real – it has a very firm theoretical basis, and has been confirmed in a very elegant series of quantum-optics experiments by Serge Haroche, Jean-Michel Raimond and co-workers at the Ecole Normale Supérieure in Paris. The two states in these experiments differed by about 10 photons, so they are not perhaps “macroscopically” distinct, but the principle is the same.

The question is whether invoking decoherence really solves the quantum measurement problem. A substantial minority of physicists (including the current author) feel that it does not, and this has led to several interesting developments in both theory and experiment over the last couple of decades.

Theoretical work in this area can be classified into two broad areas. The first covers work that accepts that, in principle, the formalism of quantum mechanics gives a complete description of the physical world at all levels, including the macroscopic and even the cosmological levels. Physicists who adopt this approach seek to re-interpret the formalism in such a way as to avoid or reduce the problems associated with “non-realization”. Recent developments have included the “consistent-histories” interpretation and also its variants, the “Ithaca interpretation” of David Mermin, a vigorous revival of interest in the ideas of the late David Bohm, and various ideas in “quantum cosmology”.

The second broad area covers “alternative” theories that, in general, do not preserve all the experimental predictions of standard quantum mechanics. These theories generally try to preserve the predictions of quantum mechanics at the atomic level, where the theory has been well tested, while providing a physical mechanism that allows a single definite macroscopic outcome to occur. Experimental tests of the alternative theories might be possible in the near future. The best-developed theory of this type is that advocated by Giancarlo Ghirardi, Alberto Rimini, Tullio Weber and Philip Pearle.

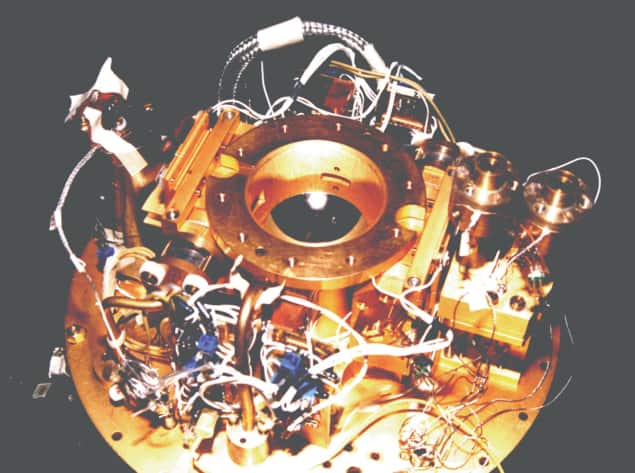

Over the last two decades it has become widely accepted that the traditional dogma – that is the belief that decoherence will always render macroscopic superpositions unobservable – may fail if the dissipative coupling between the system and the environment can be sufficiently controlled. Several experimental groups have therefore searched for evidence of macroscopic superpositions. The workhorse system for these experiments is a superconducting device that incorporates the Josephson effect, where it is not usually disputed that the states in question are indeed “macroscopically” distinct. These states differ in that 1015 or so electrons (about 1 microamp) are circulating in opposite directions: in one state the current is flowing in a clockwise direction, in the other the current is anticlockwise. What experimentalists look for in most of these experiments – and in related experiments on bio-magnetic molecules, mesoscopic devices and other systems – is evidence that quantum mechanics still gives reliable experimental predictions, even under conditions where the theory predicts superpositions of macroscopically distinct states. The evidence itself, however, is usually somewhat circumstantial in nature, involving phenomena such as tunnelling or resonance behaviour.

To date none of these experiments has produced any evidence for the breakdown of quantum mechanics. Indeed, in experiments where the parameters are well controlled, the agreement between experiment and the predictions of quantum theory has been quite satisfying. However, it is important to appreciate that no experiment to date has definitively excluded a “macrorealistic” view of the world in which a macroscopic object is in a definite macroscopic state at all times. The macrorealistic view is, needless to say, incompatible with the quantum picture. However, no one has shown so far that it makes experimental predictions that are incompatible with those of quantum theory for any experiments that have actually been performed. An experiment that, if successful, may be able to do this is currently being built by a group lead by Giordano Diambrini Palazzi of the University of Rome La Sapienza (www.roma1.infn.it/~webmqc/home.htm). If this experiment confirms the predictions of quantum mechanics, it will at the same time rule out the macrorealist view at the level of a Josephson device.

Quantum outlooks

Whither quantum mechanics in the next millennium? We do not know, of course, but here are two reasonable guesses for the short term. First, irrespective of whether or not “quantum computation” becomes a reality, the exploitation of the weird properties of entangled states is only in its infancy. Second, experimental work related to the measurement paradox will become progressively more sophisticated and eventually advance into the areas of the brain and of consciousness.

This, of course, assumes that physicists will maintain their current faith in quantum mechanics as a complete description of physical reality. This is something on which I would personally bet only at even odds for the year 2100, and bet heavily against as regards the year 3000!