Despite recent progress, quantum computers have not yet come of age. So why are companies selling software for them? Jon Cartwright finds out

When John Preskill coined the phrase “quantum supremacy” in 2011, the idea of a quantum computer that could outperform its classical counterparts felt more like speculation than science. During the 25th Solvay Conference on Physics, Preskill, a physicist at the California Institute of Technology, US, admitted that no-one even knew the magnitude of the challenge. “Is controlling large-scale quantum systems merely really, really hard,” he asked, “or is it ridiculously hard?”

Eight years on, quantum supremacy remains one of those technological watersheds that could be either just around the corner or 20 years in the future, depending on who you talk to. However, if claims in a recent Google report hold up (which they may not), the truth would seem to favour the optimists – much to the delight of a growing number of entrepreneurs. Across the world, small companies are springing up to sell software for a type of hardware that could be a long, long way from maturity. Their aim: to exploit today’s quantum machines to their fullest potential, and get a foot in the door while the market is still young. But is there really a market for quantum software now, when the computers that might run it are still at such an early stage of development?

Showing promise

Quantum computers certainly have potential. In theory, they can solve problems that classical computers cannot handle at all, at least in any realistic time frame. Take factorization. Finding prime factors for a given integer can be very time consuming, and the bigger the integer gets, the longer it takes. Indeed, the sheer effort required is part of what keeps encrypted data secure, since decoding the encrypted information requires one to know a “key” based on the prime factors of a very large integer. In 2009, a dozen researchers and several hundred classical computers took two years to factorize a 768-bit (232-digit) number used as a key for data encryption. The next number on the list of keys consists of 1024 bits (309 digits), and it still has not been factorized, despite a decade of improvements in computing power. A quantum computer, in contrast, could factorize that number in a fraction of a second – at least in principle.

Other scientific problems also defy classical approaches. A chemist, for example, might know the reactants and products of a certain chemical reaction, but not the states in between, when molecules are joining or splitting up and their electrons are in the process of entangling with each other. Identifying these transition states might reveal useful information about how much energy is needed to trigger the reaction, or how much a catalyst might be able to lower that threshold – something that is particularly important for reactions with industrial applications. The trouble is that there can be a lot of electronic combinations. To fully model a reaction involving 10 electrons, each of which has (according to quantum mechanics) two possible spin states, a computer would need to keep track of 210 = 1024 possible states. A mere 50 electrons would generate more than a quadrillion possible states. Get up to 300 electrons, and you have more possible states than there are atoms in the visible universe.

Classical computers struggle with tasks like these because the bits of information they process can only take definite values of zero or one, and therefore can only represent individual states. In the worst case, therefore, states have to be worked through one by one. By contrast, quantum bits, or qubits, do not take a definite value until they are measured; before then, they exist in a strange state between zero and one, and their values are influenced by whatever their neighbours are doing. In this way, even a small number of qubits can collectively represent a huge “superposition” of possible states for a system of particles, making even the most onerous calculations possible.

Near-term improvements

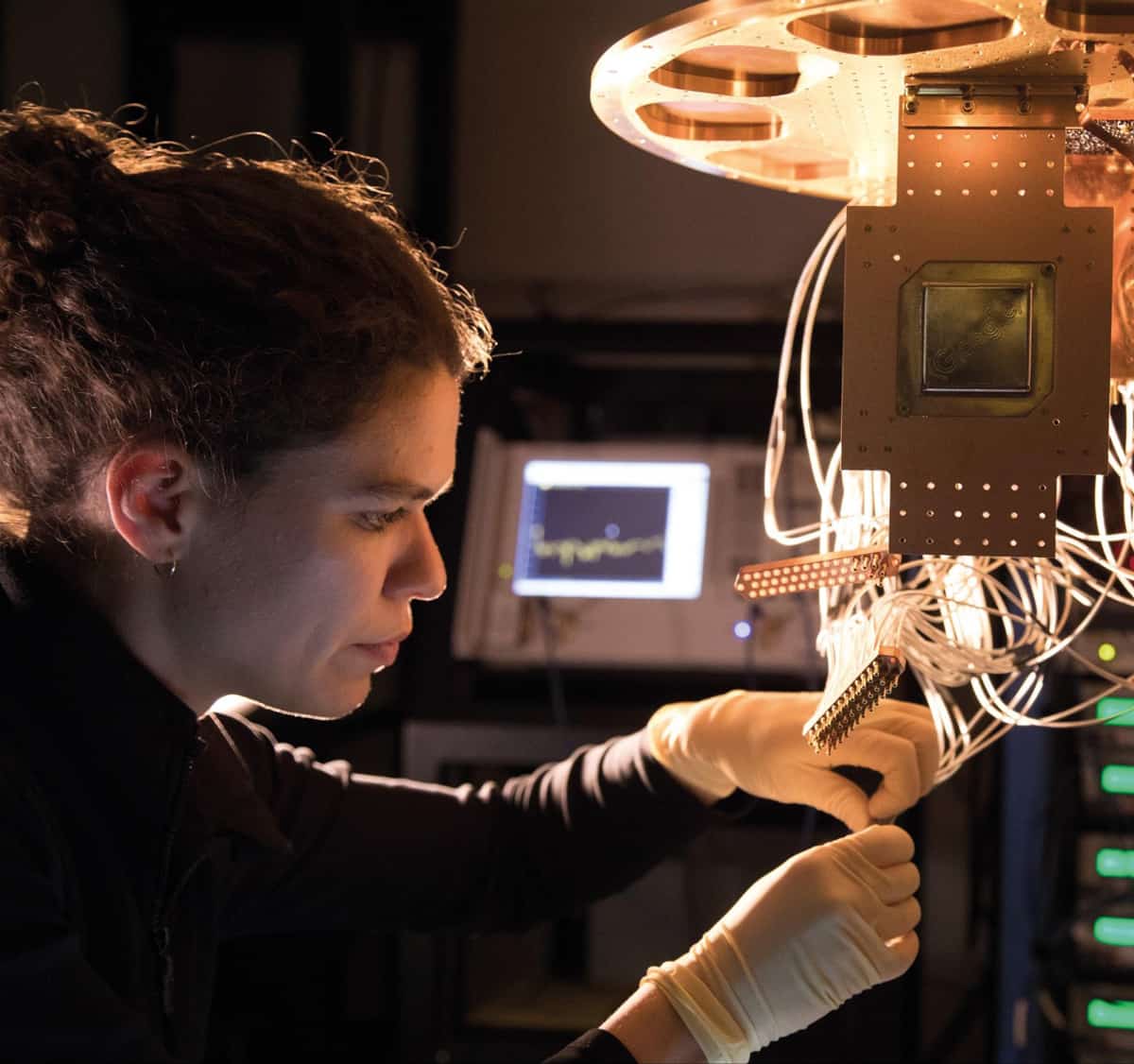

Today’s biggest universal quantum computer – or at least the biggest one anyone is talking about publicly – is Google’s Bristlecone, which consists of 72 superconducting qubits. In second place comes IBM’s 50 superconducting-qubit model. Other firms, including Intel, Rigetti and IonQ, have built smaller yet still sizeable quantum computers based on superconducting, electron, trapped-ion and other types of qubit. “In terms of advanced machines capable of processing more than one or two qubits, there are several dozen systems currently in existence globally,” says Michael Biercuk, a quantum physicist at the University of Sydney, Australia.

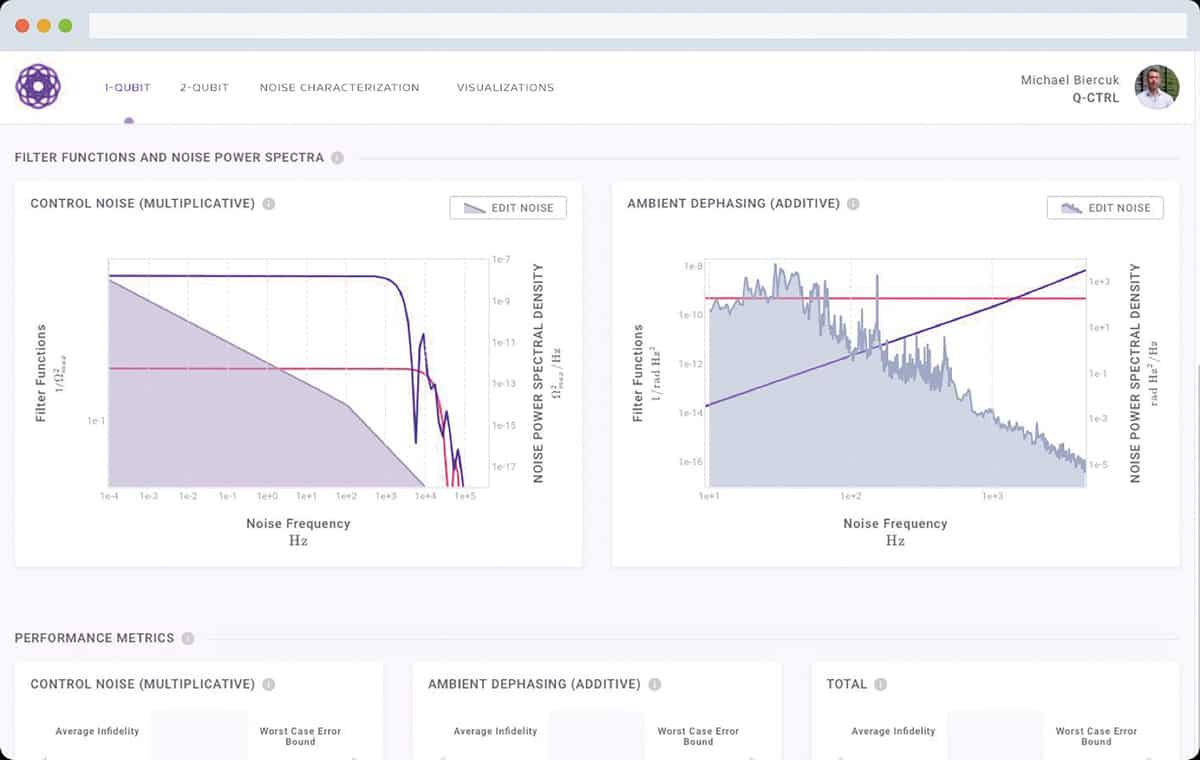

That might not sound like enough to constitute a market for quantum software. However, Biercuk argues that the overall market for quantum technologies – including not just computing but also sensing, metrology and imaging – could be worth billions of dollars in the next four years. In 2017, Biercuk gained “multi-million dollar” funding from venture capitalists to found a company, Q-CTRL, and take a slice of this pie. Now, two years later, the company has 25 employees, has reported an additional $15m in investment and is in the process of opening a second office in Los Angeles, US.

The reason for Q-CTRL’s strong start is partly that it is not waiting for the perfect quantum computer to come along. Instead, it is selling software designed to improve the quantum computers already in existence. The major potential for improvement lies in error correction. Whereas a transistor in a classical computer makes an error on average once in a billion years, a qubit in the average quantum computer makes an error once every thousandth of a second, usually because it interacts undesirably with another qubit (“cross talk”) or with the external environment (the quantum-mechanical effect known as decoherence). Quantum algorithms can make up for these errors by combining many fragile physical qubits into a single composite “logical” qubit, so that the effects of cross-talk and decoherence are diluted. Doing this, however, requires more qubits – thousands, even millions more – which are not in great supply to begin with.

Q-CTRL’s software builds on Biercuk’s academic research by employing machine-learning techniques to identify the logic operations most prone to qubit error. Once these bothersome operations are identified, the software replaces them with alternatives that are more nuanced but mathematically equivalent. As a result, the overall error rate drops without recourse to more qubits. According to Biercuk, these clever substitutions mean that a client’s algorithm can be executed in a way that minimizes the effects of decoherence, and enables longer, more complex programs to be run on a given quantum computer – without making any improvement in the hardware.

Though Biercuk declines to reveal how many clients his company has, he says they range from quantum-computing newcomers to expert research and development teams. “Overall, our objective is to educate where appropriate, and deliver maximum value to solve their problems, irrespective of their level of experience in our field,” he explains.

First applications

Research suggests that error-reduction software could play a major role in establishing near-term applications for quantum computers. The same year Q-CTRL was founded, scientists at Microsoft Research in Washington, US, and ETH Zurich in Switzerland investigated the number of qubits required to simulate the behaviour of an enzyme called nitrogenase. Bacteria use this enzyme to make ammonia directly from atmospheric nitrogen. If humans could understand this process well enough to reproduce it, the carbon footprint associated with producing nitrogen fertilizer would plummet. The good news, according to the Microsoft-ETH team, is that an error-free quantum computer would require only 100 or so qubits to simulate the nitrogenase reaction. The bad news is that once the team took into account the error rates of today’s quantum machines, the estimated number of qubits rose into the billions.

Reducing qubit numbers to something more manageable is a key priority for Steve Brierley. In 2017 Brierley, a mathematician at the University of Cambridge, UK, founded a company called Riverlane to develop quantum software, including error correction. Today, with £3.25m in seed funding from venture capital firms Cambridge Innovation Capital and Amadeus Capital Partners under his belt, Brierley is optimistic. “We now think we can do [the nitrogenase simulation] with millions of qubits, and soon we hope to do it with hundreds of thousands,” he says. “And that’s because of improvements in software, not hardware.”

Unlike Q-CTRL, Riverlane is developing software aimed at potential users of quantum computers, rather than the manufacturers of those computers. Although early applications are likely to be research-oriented – Brierley mentions the simulation of new, high-performance materials as well as problems in industrial chemistry – he chose to pursue them outside the university environment because it is easier to assemble a diverse range of expertise outside the silos of academia. In a university, he says, “You don’t typically see a physicist, a computational chemist, a mathematician and a computer scientist in the same room.” Then he laughs. “It sounds like the beginning of a joke.”

There is another reason Brierley chose to develop software for quantum computing outside academia. In a company, he explains, you are forced to focus on the problem at hand, rather than being distracted by tangential lines of research that could make an interesting paper. As a result, he says, “if we do our job well, we’ll get there in five years rather than 10.”

Brierley’s estimate, however, raises a question: if even the optimists think applications are five years away, why form a company now? In his answer, Brierley points out that there are precedents for companies founded before the technology they hope to profit from is ready. The US animation firm Pixar, for instance, was created in 1986, well before it was possible to make feature-length computer-animated films. Riverlane’s current clients, Brierley says, are “early movers” who want to understand how quantum computing will impact their field.

A quantum hype cycle?

Quite when that impact will happen is a matter of debate, and some observers think its imminence has been overstated. In 2017, scientists at Google, one of the biggest and most vocal quantum-computing developers, claimed that their universal quantum processor, which was then based on 49 qubits, stood a chance of demonstrating quantum supremacy by the end of that year. It didn’t happen. The following year, they repeated the prediction for their newer, 72-qubit computer. Again, it didn’t happen. Now they are pinning their hopes on what remains of 2019. In June, Hartmut Neven, engineering director of Google’s Quantum Artificial Intelligence lab, declared, “The writing is on the wall.”

Shortly before this article was published, a report from Google suggested that the US computing firm has finally made good on its promise. However, the name “quantum supremacy” can be misleading. To achieve it, a quantum computer would have to outperform the world’s most powerful classical supercomputer, which is currently IBM’s Summit at Oak Ridge National Laboratory in Tennessee, US. Technically, however, this milestone could be reached by running an algorithm that was specifically designed to exploit the rift between quantum and classical computing, and that had no practical use – as appears to be the case with Google’s result. Yudong Cao, a computer scientist and co-founder of Zapata Computing in Massachusetts, US, notes that there are other complicating factors in the debate. What if a quantum computer beats the most powerful classical computer, but then another, more powerful classical computer comes along? What if the quantum computer is fractionally faster, but the classical machine is more economical to use?

Despite questions surrounding the different “flavours of quantum usefulness”, as Cao puts it, he believes that some version of it could be just a few years away. “Eventually, we will get to a stage where we can say there is sufficient scientific evidence to say that a certain [quantum computation] has practical relevance, and is beyond anything a classical computer could do,” he says.

Cao’s his company was founded on such optimism. Zapata is targeting customers who believe they have problems that could be addressed by “near term” quantum computers – ones that operate with more than 100 high-quality qubits. In a typical case, says Cao, a customer will come to Zapata with the problem they are working on, and the people at Zapata will screen it to see which aspects have solutions obtainable with classical computing, and which with quantum. If part of the problem needs a quantum computer, the question then is whether that part is within the capacity of systems likely to be available in the next two to five years. Only a “small subset” of quantum-type problems fit into this category, Cao says.

For problems where the prospects are good, Zapata aims to create an operating system that will enable customers to run simulations. This operating system will automatically separate the simulation into its quantum and classical components, but it will only interact with the customer in a higher-level, classical language. Hence, the customer will not need to bother with the intricacies of qubits and quantum behaviour to simulate their problems.

According to Cao, Zapata has “quite a few” customers already, at various stages of development. “Some have ongoing projects, while others are in the process of being screened to see what aspects of their problem could be suitable,” he explains. Like other quantum-computing entrepreneurs, he declines to discuss specific problems he and his colleagues are working on.

Past precedent

Commercially driven secrecy is one reason why it is impossible to say when the quantum-computing revolution will truly arrive, and to what degree software will hasten it. The website quantumcomputingreport.com lists more than 100 private start-ups, focusing on applications ranging from finance, logistics, video rendering, drugs research and cyber security. The numbers suggest that demand is high, and Cao thinks the industry is at a similar stage to the classical-computing industry in the 1940s. Then, too, there were many ideas, but the best approaches were still far from being established. Some early computers were based on valves, for instance, while others were based on electromechanical switches. “In the same way, the programmers had to be very mindful of the limitations of the computers,” Cao says. “They had to be careful how to allocate memory, they had to pre-process the problem as much as they could, and send only the most tedious parts to the machine.”

If the comparison between quantum and classical computing is valid, it is worth pointing out how long it took for classical computing to really establish itself. After the transistor was invented in 1947, another 25 years passed before the first microprocessors hit the shelves. But Cao thinks that researchers in quantum computing have a distinct advantage over their predecessors. “Computational complexity theory did not exist. Numerical linear algebra did not exist. Cloud computing did not exist,” he says. “We have a lot more ammunition we can throw at the challenge.”