Brain–computer interfaces (BCIs) enable the flow of information between the brain and an external device such as a computer, smartphone or robotic limb. Applications range from use in augmented and virtual reality (AR and VR), to restoring function to people with neurological disorders or injuries.

Electroencephalography (EEG)-based BCIs use sensors on the scalp to noninvasively record electrical signals from the brain and decode them to determine the user’s intent. Currently, however, such BCIs require bulky, rigid sensors that prevent use during movement and don’t work well with hair on the scalp, which affects the skin–electrode impedance. A team headed up at Georgia Tech’s WISH Center has overcome these limitations by creating a brain sensor that’s small enough to fit between strands of hair and is stable even while the user is moving.

“This BCI system can find wide applications. For example, we can realize a text spelling interface for people who can’t speak,” says W Hong Yeo, Harris Saunders Jr Professor at Georgia Tech and director of the WISH Center, who co-led the project with Tae June Kang from Inha University in Korea. “For people who have movement issues, this BCI system can offer connectivity with human augmentation devices, a wearable exoskeleton, for example. Then, using their brain signals, we can detect the user’s intentions to control the wearable system.”

A tiny device

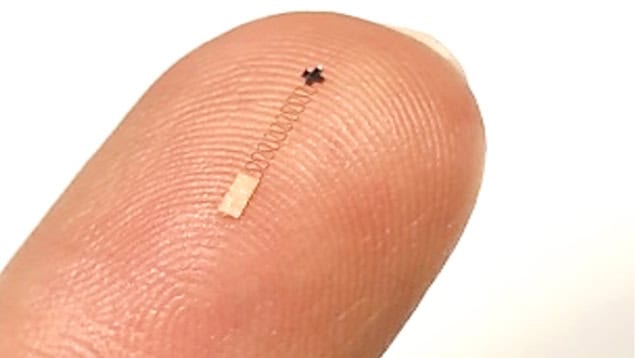

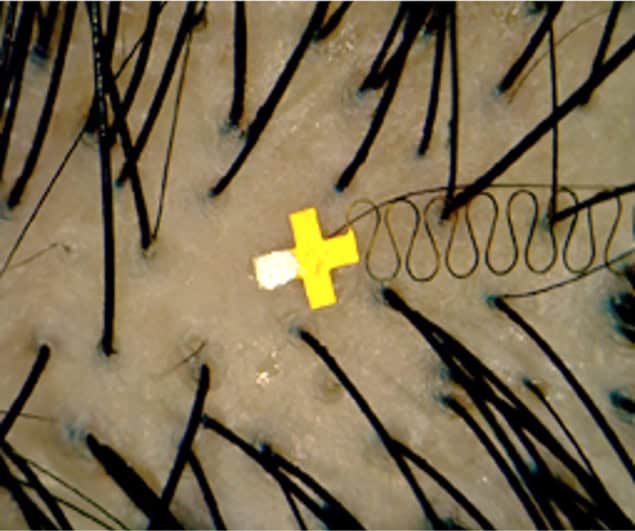

The microscale brain sensor comprises a cross-shaped structure of five microneedle electrodes, with sharp tips (less than 30°) that penetrate the skin easily with nearly pain-free insertion. The researchers used UV replica moulding to create the array, followed by femtosecond laser cutting to shape it to the required dimensions – just 850 x 1000 µm – to fit into the space between hair follicles. They then coated the microsensor with a highly conductive polymer (PEDOT:Tos) to enhance its electrical conductivity.

The microneedles capture electrical signals from the brain and transmit them along ultrathin serpentine wires that connect to a miniaturized electronics system on the back of the neck. The serpentine interconnector stretches as the skin moves, isolating the microsensor from external vibrations and preventing motion artefacts. The miniaturized circuits then wirelessly transmit the recorded signals to an external system (AR glasses, for example) for processing and classification.

Yeo and colleagues tested the performance of the BCI using three microsensors inserted into the scalp of the occipital lobe (the brain’s visual processing centre). The BCI exhibited excellent stability, offering high-quality measurement of neural signals – steady-state visual evoked potentials (SSVEPs) – for up to 12 h, while maintaining low contact impedance density (0.03 kΩ/cm2).

The team also compared the quality of EEG signals measured using the microsensor-based BCI with those obtained from conventional gold-cup electrodes. Participants wearing both sensor types closed and opened their eyes while standing, walking or running.

With the participant stood still, both electrode types recorded stable EEG signals, with an increased amplitude upon closing the eyes, due to the rise in alpha wave power. During motion, however, the EEG time series recorded with the conventional electrodes showed noticeable fluctuations. The microsensor measurements, on the other hand, exhibited minimal fluctuations while walking and significantly fewer fluctuations than the gold-cup electrodes while running.

Overall, the alpha wave power recorded by the microsensors during eye-closing was higher than that of the conventional electrode, which could not accurately capture EEG signals while the user was running. The microsensors only exhibited minor motion artefacts, with little to no impact on the EEG signals in the alpha band, allowing reliable data extraction even during excessive motion.

Real-world scenario

Next, the team showed how the BCI could be used within everyday activities – such as making calls or controlling external devices – that require a series of decisions. The BCI enables a user to make these decisions using their thoughts, without needing physical input such as a keyboard, mouse or touchscreen. And the new microsensors free the user from environmental and movement constraints.

The researchers demonstrated this approach in six subjects wearing AR glasses and a microsensor-based EEG monitoring system. They performed experiments with the subjects standing, walking or running on a treadmill, with two distinct visual stimuli from the AR system used to induce SSVEP responses. Using a train-free SSVEP classification algorithm, the BCI determined which stimulus the subject was looking at with a classification accuracy of 99.2%, 97.5% and 92.5%, while standing, walking and running, respectively.

The team also developed an AR-based video call system controlled by EEG, which allows users to manage video calls (rejecting, answering and ending) with their thoughts, demonstrating its use during scenarios such as ascending and descending stairs and navigating hallways.

Brain–computer interfaces: tailoring neurotechnology to improve patients’ lives

“By combining BCI and AR, this system advances communication technology, offering a preview of the future of digital interactions,” the researchers write. “Additionally, this system could greatly benefit individuals with mobility or dexterity challenges, allowing them to utilize video calling features without physical manipulation.”

The microsensor-based BCI is described in Proceedings of the National Academy of Sciences.