Placing numerical scores on research by UK universities has always been a controversial task, and the new system of “quality profiles” used to evaluate departments in the 2008 Research Assessment Exercise (RAE) should keep number-crunchers busy through the holidays and beyond.

While previous RAEs ranked departments using single numbers on a seven-point scale, this year’s results, released on 18 December, instead give the percentage of research activity rated at each of five levels, from a “world-leading” 4* to an unclassified “below standard.” Without a single number, it is harder to distinguish RAE “winners” at a glance. However, a close examination of the physics results reveals a few surprises.

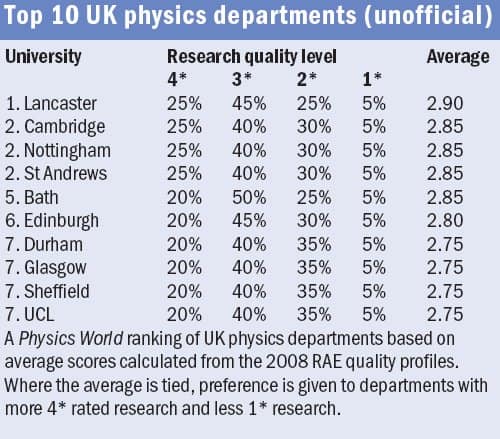

An unofficial Physics World ranking that lists departments according to their average research score shows Lancaster on top and Cambridge close behind. Both departments also received the maximum 5* rating in the last RAE in 2001, but the other 5* departments — Oxford, Southampton and Imperial College London — fell outside the top 10 this time round.

“The scores were very close, and there is not a lot of difference between the top few,” says Peter Ratoff, head of physics at Lancaster. Still, he adds, “our entire department is really pleased with the results.”

“We as a department are delighted with the outcome,” says Andrew Mackenzie, director of research in St Andrews’ physics department, which tied with Cambridge and Nottingham for the second-place average score. “Personally, I am a poignant combination of delighted and relieved”.

Missing out

Another indicator is the percentage of activity deemed “international quality,” a term that encompasses grades 2* and above. Within physics, 17 of the 42 departments — including Southampton, but not Oxford or Imperial College — demonstrated that 95% of their activity achieved this benchmark. Oxford and Imperial both produced high percentages of 4* research but also had 10% of their activity judged only “national quality.”

“It is difficult to comment on the results because there were so many variables,” says an Oxford University spokesperson. In league tables that factor in the number of academics submitted for evaluation, she noted, “Oxford physics has done extremely well.”

Switching to a more finely-graded RAE was a key recommendation of a review carried out in 2003 by physicist Gareth Roberts. Previous exercises drew criticism for creating a “cliff edge” effect, as departments that narrowly missed a particular score received much less funding from regional councils than their marginally better peers. David Eastwood, chief executive of the Higher Education Funding Council for England, which co-sponsors the RAE along with equivalent bodies in other UK regions, notes that the profile system lets members of the evaluating panels “exercise finer degrees of judgement” on research quality.

The raw data do not, however, distinguish between departments that have made improvements in research quality since the 2001 RAE, and others that may have benefited from a change in evaluation method. Jonathan Knight, head of physics at Bath University, notes that after receiving a 4* rating in 2001, his department “radically revised” their approach to research, in part by building larger groups and encouraging interaction between members. However, he adds that “we must have been a borderline case last time,” and the single-number system did not reflect that.

With such close clustering in the top third of the table, it seems the only fair way to divide funding between departments would be to make it proportionate to the number of researchers Tim Morris, University of Southampton

As for the crucial funding question, Tim Morris, head of physics and astronomy at Southampton, says that “with such close clustering in the top third of the table, it seems the only fair way to divide funding between departments would be to make it proportionate to the number of researchers”. An announcement on funding allocations based on the RAE results is not expected until March.

The task of comparing results between RAEs is complicated by the fact that the 2008 exercise was the last of its type. The RAE’s replacement, termed the Research Excellence Framework (REF), will include quantitative information like bibliometric data alongside expert opinions in evaluating research quality.