If you try to do an experiment or a calculation and it doesn’t work out, should you tell other researchers about it? Or just move on to something more promising as quickly as you can? Philip Ball explores the pros and cons of publicizing “null results” in science

In 1887 Albert Michelson and Edward Morley performed an experiment to detect the influence on the speed of light of the ether – the medium in which light waves were thought to travel. They reasoned that because the Earth was moving through the stationary ether, the speed of light would be different in mutually perpendicular directions. That difference, although probably tiny, should show up when light waves travel out and back along two arms and then interfere where they cross.

What Michelson and Morley found, instead, was probably the most celebrated “null result” in physics: there was no discernible difference in the speed of light in any direction. This failure to observe the expected result went unexplained for nearly two decades, until Albert Einstein’s 1905 special theory of relativity showed that the ether was not, in fact, required to understand the properties of light.

Whether the Michelson–Morley null result directly motivated special relativity is still disputed, but in any event it established a context for Einstein’s revolutionary idea. It also demonstrates why null results can be as significant as positive discoveries. Indeed, certain areas of physics are full of important null results. Might some of the fundamental constants of nature, such as the gravitational constant or the fine-structure constant, actually vary over time? Might the proton decay very slowly, as some theories beyond the Standard Model of particle physics predict? Could there be a “fifth force” that modifies gravity in a material-dependent way? Might dark matter consist of weakly interacting massive particles? Exquisitely sensitive experiments and observations have so far failed to find any evidence for these things. Yet we still keep looking, and null results place ever tighter limits on what is possible.

In other areas of physics, though, an awful lot of null results never see the light of day. If you do an experiment to look for a predicted but not terribly earth-shaking effect – a new crystal phase of a material, say – and you fail to find it, who is going to be interested? Which journal is going to want a paper saying “We thought we might see this wrinkle, but we didn’t”?

Some researchers feel this is as it should be. Isn’t there, after all, enough literature to wade through (and to referee) without also having to worry about things that proved not to be so? Others, however, think that null results are vital to the way science proceeds, and that their worth needs to be recognized and respected – perhaps in journals dedicated to that purpose. The debate between these two sides has produced a few intriguing possible solutions, while also revealing some deep disagreements about how best to do – and fund – scientific research in the modern era.

The value of nothing

Alexander Lvovsky is someone who believes there are reasons to accentuate the negative. A quantum physicist at the University of Calgary in Canada, he argues that in his field many groups wind up working on similar (even identical) problems, and so publishing a negative result would make research more efficient by removing dead ends quickly.

A study published in the Proceedings of the National Academy of Sciences last November provides some support for this view. In it, geneticist Andrey Rzhetsky of the University of Chicago, US, and colleagues attempted to gauge the efficiency of the scientific process of discovery by analysing how researchers select the problems they work on. Using biochemistry papers listed on the MEDLINE database from 1976 to 2010, they created a map, or network, in which the nodes were scientific concepts (in this case the specific molecules being studied) and the edges were the relationships between them (such as physical interactions or shared clinical relevance). Hence, research on molecules that are close together in this network is probably exploring tried and tested types of interaction, while links between distant nodes represent more innovative, perhaps speculative investigations.

The network structure that Rzhetsky and colleagues uncovered is one that suggests a rather conservative research strategy, in which individual researchers focus on “extracting further value from well-explored regions of the knowledge network”. They also found that this strategy has become more conservative over the years, slowing the pace of discovery. Scientific research, they argue, would be more efficient if it were more co-ordinated: for example, if scientists published all findings, positive and negative, to avoid repeating experiments.

The trend towards conservatism in research is very much in line with what Lvovsky sees as a likely outcome of a bias towards positive results. Combined with a “publish or perish” mentality, he says, this bias “encourages scientists not to engage in hard, challenging scientific problems, but to concentrate on those which are guaranteed to yield a [positive] result”. This problem, he says, “affects primarily young scientists who need to make a career”. Lvovsky reckons this is happening in his own field of quantum optics, where he says it has become common to “publish experiments whose result is known in advance, simply by giving them an exciting interpretation”.

A bias towards exciting results is perhaps understandable, but Jian Wu of the East China Normal University in Shanghai argues that null results needn’t be boring. “Sometimes a result is very interesting even if it is not the expected one and cannot be understood with available theories or models,” Wu says. Even so, he adds, “It is hard for such a null or negative result to be published in general journals, which are mainly looking for significant advances.” That bias can discourage further exploration of unexpected and unexplained findings.

Perhaps one of the most compelling reasons to publish null results is simple honesty. Although Lvovsky acknowledges that “it is the positive results that take science and technology forward”, everyone knows that science isn’t the endless succession of triumphs found in the literature. To pretend otherwise, Lvovsky says, “distorts the scientific truth”. Not only do “failures” predominate; they are also an important part of the record. Brian Nosek, a psychologist at the University of Virginia, US, who specializes in the cognitive biases that can manifest themselves in the conduct and reporting of science, notes that if people never hear about failures to reproduce an effect, they will assume it’s well established.

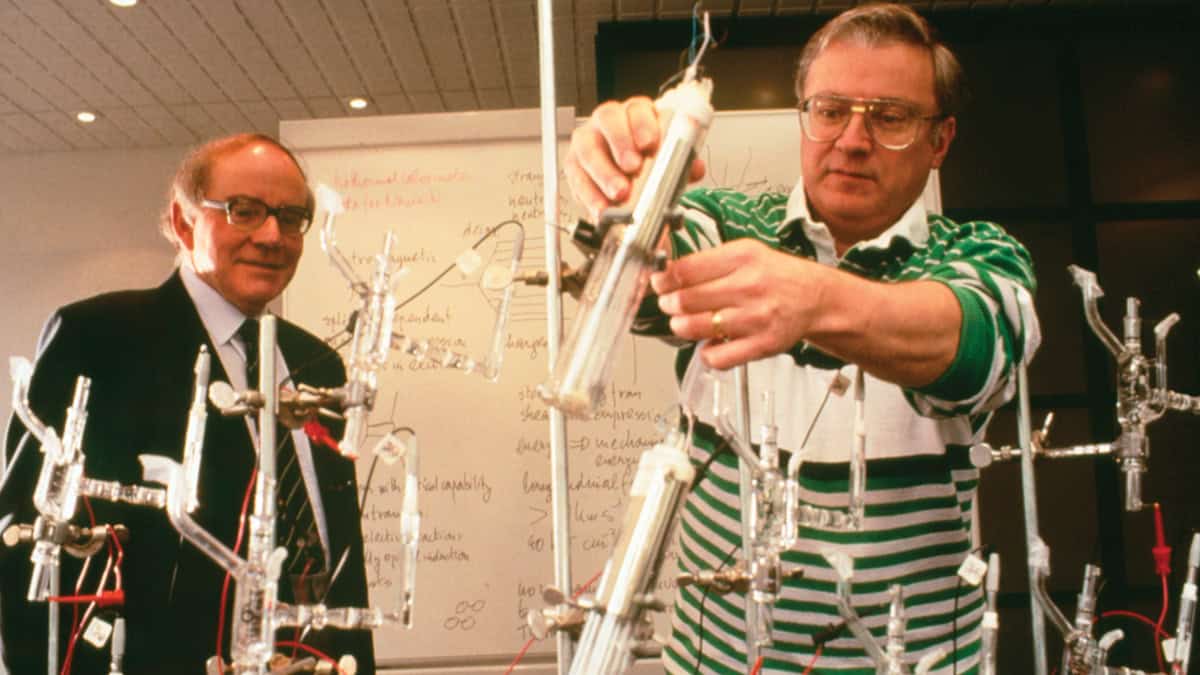

This skewing of the literature may, Lvovsky says, have played a role in some notorious incidents of false claims in physics. Faked nanotechnology results published by the then-Bell Labs researcher Jan Hendrik Schön in the late 1990s took years to unravel. Similarly, after the electrochemists Martin Fleischmann and Stanley Pons announced in 1989 that they had achieved “benchtop nuclear fusion” during electrolysis of heavy water – an announcement soon backed up with a sketchy paper published in the Journal of Electroanalytical Chemistry – there was a flood of apparent confirmations of the result. But it took much longer for carefully documented null results to emerge. Some of the most thorough and convincing were described in long papers in Nature, which led eventually to a general disavowal of the “cold fusion” claimed by Fleischmann and Pons. By that stage a large amount of time and money had been spent on a chimera.

Other ways of saying ‘no’

That null results like those investigating cold fusion were published in a top journal shows just how unusual that episode was. Usually, such journals insist on positive findings that more obviously seem to advance what we know. Might, then, the best place for null results be in journals dedicated to them?

“The difficulty of a null-result journal is the review procedure – how to decide which result is worth publishing,” Wu says. It’s extremely difficult for an editor or referee to judge the quality of a paper reporting a null result, he adds, because there could be so many reasons (including bad technique) why nothing was seen. Yet Nosek questions whether this problem should afflict null-result papers more than others. “Null results can be obtained because of incompetent execution of the research protocol, but so can positive results,” he says.

Perhaps more problematic is the status of such a journal. “It may have trouble succeeding, because if the journal is defined as publishing what no other journal will publish then it is defining itself as a low-status outlet,” says Nosek. That’s not a foregone conclusion, though. Lvovsky suggests that a null-result journal “must require that the accepted articles, rather than just reporting failures, demonstrate that failure derives not simply from a simple lack of prowess but from substantial scientific or technical reasons”. In other words, the reasons for the failure must be provably identified.

That, however, may be easier said than done. “Finding a true null in an experiment is very difficult to do, as one has to effectively limit all possible outcomes that might produce a zero,” says atomic physicist Andrew Murray of the University of Manchester, UK. “These can include the possibility that we measured the wrong thing, noise, something broken in the experiment, or some other artefact might lead to us not seeing anything. It is far easier to measure a finite quantity than a true zero in almost every experiment I know of.”

Given that challenge, the very notion that one can obtain a definitive null result could propagate a false idea that science is black and white, Murray adds. “Politicians and many policy-makers who are not scientists often make statements and draw conclusions without considering that there must be uncertainties in any measurement,” he says. “The existence of a journal that states that one can obtain a definitive null could imply that such precision measurements are possible on a general scale. I think that’s a dangerous precedent to set.”

All the same, null-result journals do exist, such as the Journal of Negative Results in BioMedicine. Interestingly, that publication also provides an outlet for “unexpected, controversial and provocative results” – an implicit acknowledgement, perhaps, that those are the kind of findings for which null results tend to have the most importance. Last year the science journal PLOS ONE, which has no editorial selection criteria beyond technical competence of submitted papers, started collating its negative results in a collection called “Missing Pieces”.

It’s surely no coincidence that these initiatives tend to be in the life sciences. Null results have received more attention there because of the vested interests that may accompany and even induce publication biases. Pharmaceutical companies, for example, might publish positive findings of drug trials but not negative ones. It’s for such reasons that Nosek has proposed an alternative to the regular publication of null results: peer-reviewed declarations of the objectives of an experiment before the data are collected (https://osf.io/8mpji/wiki/home). In these “registered reports”, Nosek explains, “The question and methods of the study are reviewed, and then if they pass review, the outcomes will be published regardless of whether they are positive or negative.” This procedure would protect against publication bias and improve research design, while also “focus[ing] research incentives on conducting the best possible experiments rather than getting the most beautiful possible results”. About two dozen (primarily life-sciences) journals are already offering registered reports as a submission option, he says.

What about the complaint that null results would just add more literature through which researchers have to wade? “There is already too much information,” says Nosek. “For an individual, the amount of science being produced is effectively infinite.” But the answer, he says, is not to suppress negative data, but “to improve search and discovery of information that is relevant and useful”.

Null results can also be released and discussed along less formal routes – as happened in 2011 with the alleged detection, made by researchers in the Italy-based OPERA collaboration, of neutrinos travelling faster than light. This was an extraordinary “positive” claim, revolutionary if true – and so understandably it stimulated several follow-up studies that rapidly disproved the idea. These studies were disseminated via the arXiv preprint server and through informal personal networks, and in the views of some physicists, they turned what could have been an embarrassment for the discipline into a demonstration of good, efficient scientific method. If that testing had relied on the usual peer-reviewed channels, says Nosek, it could have taken years.

No-one, then, seems to doubt the potential value of null results. The chief difficulty lies in establishing effective channels for communicating them. If we can find a way of doing that, we may find that discovering nothing is a vital part of discovering anything.