Physicists in Germany are the first to flip individual atomic spins in an optical lattice. The researchers, who are based at the Max Planck Institute for Quantum Optics in Garching, used a combination of laser light and microwaves to address individual rubidium atoms arranged in a state known as a “Mott insulator”. Their method could be used for making quantum computers and also for simulating the behaviour of electrons in solids – especially superconductors.

This newfound ability is just the latest example of the progress that physicists have made in understanding quantum interactions by studying ultracold atoms in optical lattices of crisscrossing laser beams. By adjusting the laser light and applied magnetic fields, scientists can “tune” the interactions between atoms and simulate the behaviour of electrons in crystalline solids. Although an atom in an optical lattice can normally tunnel from one lattice site to a neighbouring site, in a Mott insulator all the sites are occupied, which means that the energy cost of tunnelling is too great and the atoms are frozen in place.

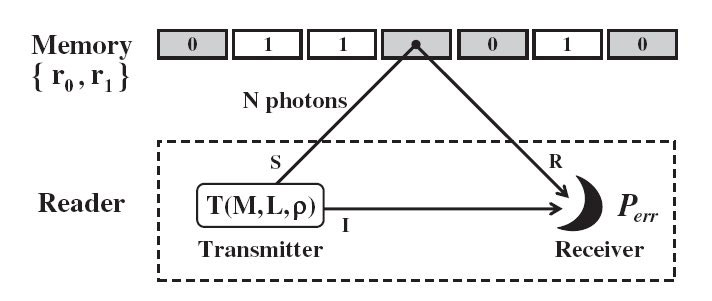

Each of these frozen atoms, however, could make an excellent quantum bit (qubit) in a quantum memory because they are highly isolated from the surrounding environment. And as each atom has a magnetic spin, optical Mott insulators could be used to simulate the effect of spin on electronic properties such as conduction. However, physicists had been unable to adjust the value of individual spins, limiting the usefulness of optical Mott insulators.

Flipping a spin

What Stefan Kuhr, Immanuel Bloch and colleagues have now done is to devise a way of flipping the spin of an individual atom without affecting the rest of the lattice. The team began with a cloud of about one billion rubidium-87 atoms, which were cooled to less than 100 nK. As this process involves atoms continually leaving the cloud, the team was left with just a few hundred individual ultracold atoms. The crisscrossing lasers were then switched on to create a 2D square lattice and the parameters of the lattice were tweaked to transform the system from a conducting superfluid to a Mott insulator with a lattice spacing of 532 nm.

All of the atoms in the lattice are initially in the “0” spin state. To flip an atom, it is first illuminated with laser light. The beam is tightly focused so that nearly all of the light falls on one lattice site, where it modifies the energy difference between the “0” and “1” spin states of that atom alone. The entire lattice is then bathed in microwaves at the modified energy difference, which flips the spin of the illuminated atom but no others.

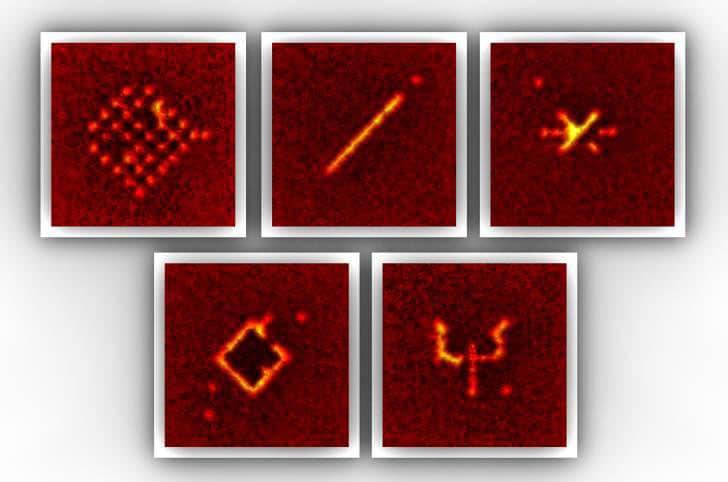

The process can then be repeated at different lattice sites and the team managed to write several patterns in the Mott insulator, including the Greek symbol ψ (see figure). While it is not possible to measure the spin of individual atoms without destroying the Mott insulator, the physicists verified the technique by firing a laser that is tuned to only eject atoms in the “1” state. An optical microscope image is then taken of the remaining “0” atoms, revealing the pattern.

Crucial step

Peter Zoller, a quantum physicist at the University of Innsbruck in Austria, thinks the work is “a seminal step forward in experiments with optical lattices”. Henning Moritz of the University of Hamburg, meanwhile, says that the team’s ability to address individual atoms “is a crucial step on the path toward quantum computing with ultracold atoms”. Indeed, Zoller thinks that quantum-computing devices based on atoms could soon catch up with those using trapped ions – which currently lead the pack in terms of performance.

However, there is more work to be done. If the Mott insulator is used as a quantum computer, atoms need to be put into a quantum superposition of the “0” and “1” state. Kuhr told physicsworld.com that requires some better control over the experimental parameters and that is on the team’s “to do list”.

Quantum computers also require quantum gates (and entanglement) between pairs of atoms. This could be realized by putting atoms into “Rydberg states” – in which the atom’s outer electron shell extends a great distance from the atomic nucleus and overlaps with that of its neighbour.

The physicists are also interested in flipping a spin and then watching how the spin excitation moves though the lattice, thus simulating quantum magnetism and transport phenomena in solids.

The work is reported in Nature 471 319.