A device that can detect DNA using quantum dots has been built by physicists in Spain. The researchers say that the device is highly sensitive, portable and cheap to build, and could one day be used by doctors to diagnose and detect disease.

Bioscientists have in recent years made huge progress in determining the human genetic code and that of many other organisms. One application of this data is to test patients for hereditary conditions — such as cystic fibrosis — by taking a DNA sample from them and comparing it with the known genetic code related to that condition.

Comparisons are usually made by mixing single strands from separate sources, which join to form their iconic double-helix structures. The speed and efficiency with which double helices form determines their genetic relatedness.

Until now, detecting double-stranded DNA has involved labelling the strands using fluorescent dyes, enzymes or radiolabels. However, the sensitivity of these techniques has been limited. Arben Merkoci and his colleague at the Autonomous University of Barcelona have overcome this problem by using quantum dots as labels; this also removes the need to chemically dissolve samples before testing (Nanotechnology 20 055101).

A quantum of semiconductors

Quantum dots are nano-scale crystals that were first developed in the mid-1980s for optoelectronic applications. They comprise hundreds to thousands of atoms of an inorganic semiconductor material.

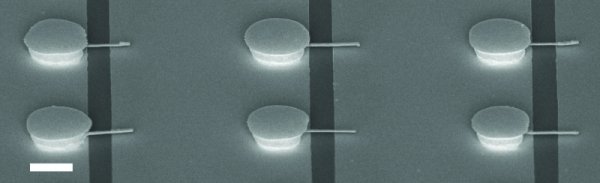

Merkoci and his colleague have created a “sandwich assay” that can be filled with DNA for testing. “Samples are inserted inside the sensor in the same way that a glucose biosensor tests for glucose levels in blood,” Merkoki told physicsworld.com. In their test report, single-strands of DNA linked to cystic fibrosis are mixed with cadmium sulfide quantum dots and inserted between two screen-printed electrodes.

When two strands form a pair they pick up a quantum dot; this affects the electrical properties of the quantum dots which leads to a detectable change in the current across the two electrodes.

Advance for DNA testing?

Nanoparticle-based detection systems for DNA have been developed in the past few years but this is the first one to incorporate screen-printed electrodes; this enables direct detection of the DNA pairs without the need for chemical analysis. However, this test used an isolated, prepared sample of DNA so the next step is to test the device using “real-world” DNA samples. “We need to conduct further study of possible interferences that could come during testing of real patient samples,” said Merkoci.

A longer term goal is to develop an array composed of several electrodes where the same quantum dot can be used to test for a range of different DNA strands, each affecting the quantum dot electrical properties by different extents. A further aim of the researchers is to create a “lab-on-a-chip device”. “It could see applications in fields where fast, low cost and efficient detection of small volumes is required,” said Merkoci.

Merkoci and his team have not yet applied for a patent but are currently looking for companies to collaborate with to develop the technology further.