Not so long ago, machine learning was a novelty in physics. Although physicists have always been quick to adopt new computing techniques, the early problems that this variant of artificial intelligence (AI) could solve – such as recognizing handwriting, identifying cats in photos and beating humans at backgammon – were not the sort of problems that got physicists out of bed in the morning. Consequently, the term “machine learning” did not appear on the Physics World website until 2009, and for several years afterwards it cropped up only sporadically.

In the mid-2010s, though, the situation began to change. Medical physicists discovered that training a machine to identify cats was not so different from training it to identify cancers. Materials scientists learned that AIs could optimize the properties of new alloys, not just backgammon scores. Before long, the rest of the physics community was finding reasons to join the machine-learning party. A quick scan of the Physics World archive shows that since 2017, physicists have used machine learning to, among other things, enhance optical storage, stabilize synchrotron beams, predict cancer evolution, improve femtosecond laser patterning, spot gravitational waves and find the best LED phosphor in a list of 100,000 compounds. Indeed, machine-learning applications have spread so rapidly that they are no longer remarkable. Soon, they may even be routine – a sure sign of a technology’s success.

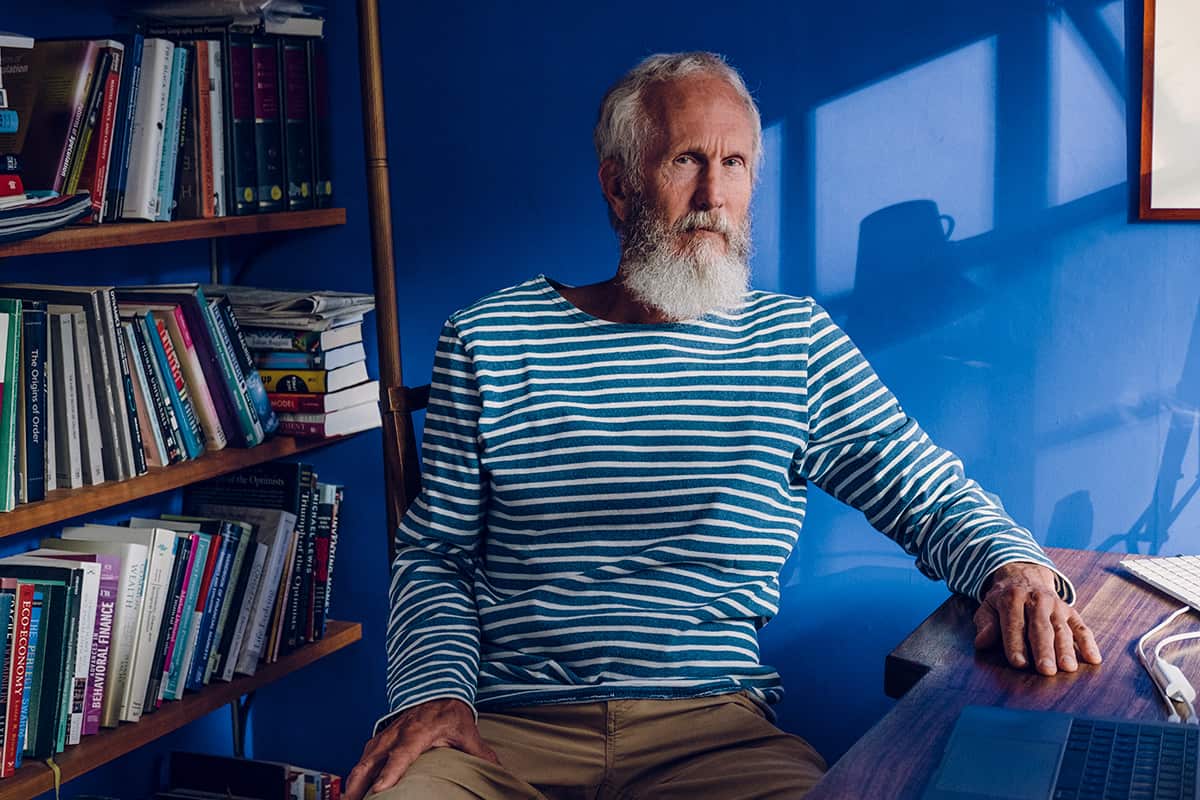

All of which makes this an excellent time for physicists to pick up a copy of You Look Like a Thing and I Love You – a deft, informative and often screamingly funny primer on the ways that machine learning can (and often does) go wrong. And by “go wrong”, author Janelle Shane isn’t talking about machines becoming hyper-intelligent and overthrowing the puny humans who invented them. In fact, the opposite is true: most of AI’s problems arise not because algorithms are too intelligent, but because they are approximately as bright as an earthworm.

Shane is well placed to comment on algorithms’ daft behaviour. Since 2016 she has regularly entertained readers of her blog AI Weirdness by giving neural networks (a type of machine-learning algorithm) a body of text and asking them to produce new text in a similar style. Many useful AIs are “trained” in exactly this fashion, but Shane’s peculiar genius is to feed her algorithms cultural oddments such as paint colours, recipes and ice-cream flavours, rather than (say) crystallographic data. The results – a warm shade of pink the neural network dubs “Stanky Bean”; a recipe for “clam frosting”; and (my personal favourite) an ice-cream flavour called “Necrostar with Chocolate Person” – are eccentric and often endearing. The book’s title is another example: apparently, “you look like a thing and I love you” is an algorithm’s idea of a great pick-up line. Frankly, I’ve heard worse.

A book full of such slip-ups would be worth reading purely for comedic value, but these bloopers have points as well as punchlines. “Necrostar with Chocolate Person”, for example, is the logical result of taking an AI that knows how to generate names of heavy-metal bands and asking it to generate ice cream flavours instead. This process is known as transfer learning, and it’s a common shortcut for AI developers, saving hours of computing time that would otherwise be wasted in teaching the algorithm to generate words from scratch. Similarly, recipes for clam frosting exist in part because AIs don’t “understand” food like a human does, but also because they have short memories. Hence, by the time the algorithm reaches the last ingredient in a recipe, it’s long since forgotten the first. AI amnesia also explains why algorithms can (and do) generate sentence-by-sentence summaries of sporting matches, but book reviews are beyond them – at least for now.

The most fascinating – and serious – problem with AIs is not their stupidity or forgetfulness, though. It’s their propensity to behave in ways their creators never intended. Shane illustrates this with a chapter on training simulated robots to walk. The idea is to start with a robot that can barely wriggle, give it a “goal” and a mechanism that rewards progress, and allow the AI to evolve ever-more-efficient propulsion methods. But whereas real evolution has produced several viable means of locomotion, an AI given this challenge is extremely likely to cheat. A robot asked to move from A to B, for example, may grow to an equivalent height and fall over. If the human programmer rules out this class of “solutions”, the robot may evolve an awkward spinning gait or exploit flaws in the simulation’s physics to teleport itself to the new location. As Shane notes, “It’s as if the [walking] games were being played by very literal-minded toddlers.”

Robots that fall over are amusing. Algorithms that preferentially reject job applications from women are not

Robots that fall over are amusing. Algorithms that preferentially reject job applications from women are not. Both are real examples; both stem from AI’s love of shortcuts. Picking the best person for a job is hard, even (especially?) for a computer. However, if the AI has been trained on successful CVs from a company with a pre-existing diversity problem, it may find it can boost its chances of picking the “right” applicants if it rejects CVs that mention women’s colleges or girls’ soccer.

In some ways, AIs that learn undesirable lessons from biased data are merely the latest example of the old programming maxim “garbage in, garbage out”. But there is a twist. Unlike human-authored code, machine-learning algorithms are a black box. You can’t ask an AI why it thinks “Stanky Bean” is a desirable name for a paint colour, or why it treats a loan applicant from a majority-Black neighbourhood as a higher credit risk. It won’t be able to tell you. What you can do, though, is educate yourself about the ways machine learning can fail, and for this, You Look Like a Thing and I Love You is an excellent place to start. As Shane puts it, “When you ask an AI to draw a cat or write a joke, its mistakes are the same sorts of mistakes it makes when processing fingerprints or sorting medical images, except it’s glaringly obvious that something’s gone wrong when the cat has six legs and the joke has no punchline. Plus, it’s really hilarious.”