Vortices, domain walls and other magnetic phenomena behave in complex and dynamic ways, but limitations in imaging technology have so far kept researchers from observing them in more than two dimensions. Scientists in the UK and Switzerland have now found a way around this obstacle. According to physicist Claire Donnelly, who led the effort together with colleagues at the University of Cambridge, ETH Zurich and the Swiss Light Source, their new technique will give physicists a deeper understanding of how 3D magnetic materials work and how to harness them for future applications.

Changes in a material’s magnetization take place on the nanoscale in both time and space. Measuring these tiny, rapidly changing details is challenging, so scientists generally limit their studies to flat samples. While three-dimensional imaging has been recently demonstrated using techniques such as X-ray, neutron and electron tomography, the images obtained are static and do not show how the magnetic structures evolve over time.

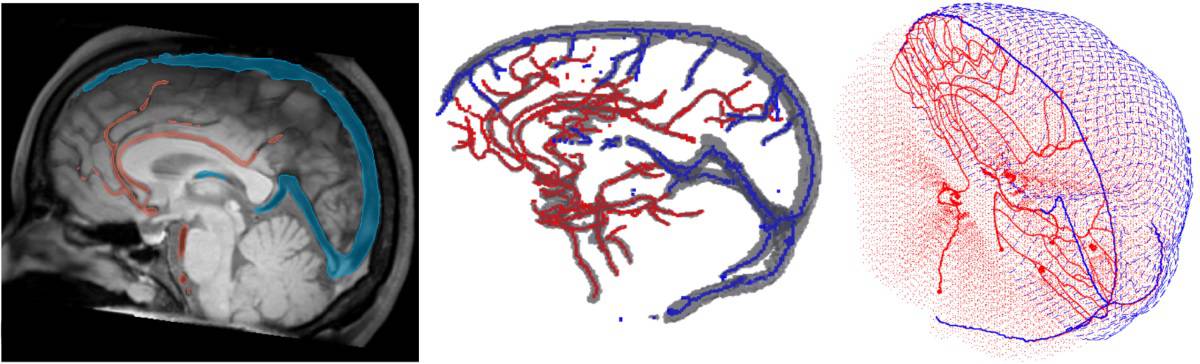

One reason it is hard to capture magnetization changes in three dimensions is that the magnetization can point in any direction. This means that, when studying a 3D sample of magnetic material, the sample’s orientation with respect to its rotation axis needs to be changed partway through the measurement in order to measure all three spatial components (x,y,z) of the magnetization.

High-energy X-ray probe

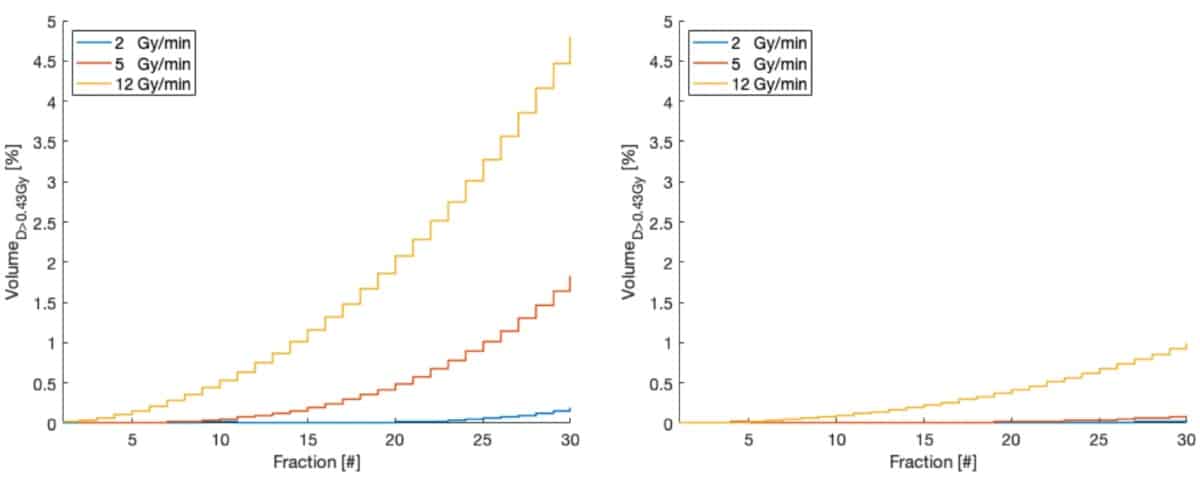

That’s not easy to do, so Donnelly and colleagues instead used short, fast pulses of high-energy synchrotron X-rays to probe the magnetic state of a 3D magnetic structure (a microdisc of gadolinium cobalt, in this case) in different directions. This technique, which the team have dubbed time-resolved magnetic laminography, enabled them to measure how the magnetic state evolves in response to an alternating applied oscillating magnetic field, which the researchers synchronized to the frequency of the X-ray pulses.

Thanks to a specially-developed reconstruction algorithm, the team obtained a seven-dimensional dataset of measurements: three dimensions for the position of the magnetization state, three for its direction and one for time. The result is, in effect, a map of the magnetization dynamics for seven different time steps evenly spaced over 2 ns, with a temporal resolution of 70 picoseconds and a spatial resolution of 50 nanometres.

In some ways, Donnelly says the team’s approach is similar to computer tomography (CT), which is widely employed for 3D imaging in many areas, including CT scans in hospitals. Laminography involves measuring 2D projections of the magnetic structure for a number of different orientations of the sample. Importantly, the axis of rotation of the microdisc being measured is not perpendicular to the X-ray beam, so the team was able to access all three spatial components of the magnetization direction.

Towards a new generation of technological devices

The researchers visualized two main types of magnetization dynamics in their experiments: the 3D motion of magnetic vortices moving back and forth; and the precession of the magnetization vector. The movement of such structures had previously only been observed in two dimensions.

Doughnut-shaped nanomagnets induce new magnetization states

The new technique could help researchers better understand magnetic materials, Donnelly says. Well-known structures such as permanent magnets and inductive materials – both widely employed in sensing and energy production – could be studied with a view to improving their performance. Donnelly adds that there is also a growing interest in 3D magnetic nanostructures, which are predicted to have completely new properties and functionalities hard to achieve in their 2D counterparts. As well as providing insights into the physics of phenomena such as ultra-high domain wall velocities and magneto-chiral effects, these nanostructures might form the basis of a new generation of technological devices, boosting information transfer rates and data storage densities.

“This is a very exciting area of research,” she tells Physics World. “With our new technique, the magnetic materials community will now be able to measure and understand these systems – and hopefully exploit their properties.”

The research is described in Nature Nanotechnology.