The cornerstone of a safe MRI workplace is repeated and updated MRI safety training and awareness. The number of MRI scanners is increasing, and scanners are also moving toward higher field strengths, both in private practice and at hospitals and institutions all over the world. Consequently, there is a large and increasing crowd of radiology staff and others who need MRI safety education to keep our working environment a safe place – and one that no one should be afraid to enter.

When we discuss MRI safety education, we must remember that the knowledge and skills need to be repeated and reactivated regularly, just as we are expected to participate in heart and lung rescue repetitions and fire drills at regular intervals.

What are the risks?

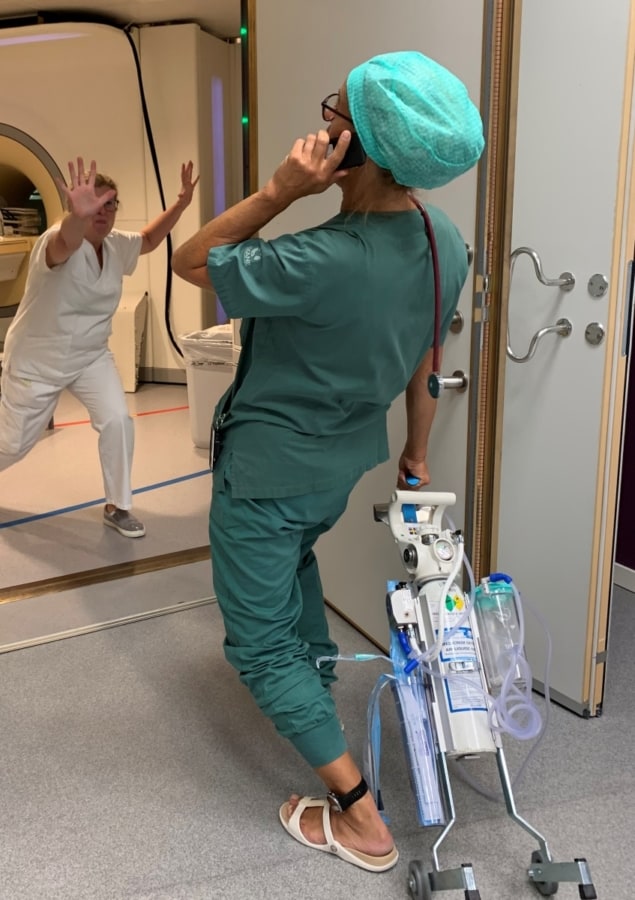

The MRI-associated risk most people are familiar with is of a projectile. There are several well-known and well-proven recommendations to prevent metal and ferromagnetic items from being brought into the examination room.

Screening for metal is important, and when it comes to screening patients, a number of routines should be used, including filling in a special screening form and changing from street clothes into safe clothing. The MR radiographer also needs to interview the patient right before entering the examination room to check that the patient has fully understood the information, and there must never be any unknown circumstances; if there are, further investigations must be done. These procedures are very important and must never be excluded.

It is also possible to use a ferromagnetic detector as a support to the screening procedure. Such a detector is a good asset if you want to reduce the risk of something being accidentally taken into the room. At the same time, it is important to know that while a ferromagnetic detector may increase MRI safety, it should never replace any of the ordinary screening procedures used.

Awareness of implants

Another risk that is greater these days has to do with the distribution of radiofrequency energy that may result in heating of the patient and/or heating of the patient’s implants. Heating injuries have increased due to the use of more efficient and powerful methods and scanners. Occasionally, they are also caused by a lack of MRI safety competence regarding how to position the patient, etc.

It is of the utmost importance to identify every implant – a task that can be both time-consuming and difficult. We must remember that it is essential to find out if the MRI examination can be performed on a patient with a certain implant and, if so, how it can be done safely.

Working with MRI requires clear and well-founded working routines that are never abandoned. It is of great importance to be alert and never take things for granted in our special environment where a lot of different professions are involved. It is a truly challenging environment, and teamwork is necessary. Working alone with MRI examinations and equipment should never be an option, and all members of the scanning team must have a high level of MRI safety skills.

How to minimize risk

There are several ways to improve MRI safety besides sufficient safety training and education and installing helpful devices. We must not forget that equipment vendors play an important role when it comes to improving the safety situation, and their support and collaboration are greatly appreciated.

We need solid recommendations regarding education and routines to be followed by everyone working in the scanner environment. A better understanding of MRI safety risks and more resources for safety education are needed to maintain a high level of competence among the growing group that needs MRI safety training.

Another area for improvement is the reporting of incidents. Today, there are several different incident reporting systems, locally and sometimes nationally, but most of us working with MRI safety know that the reported incidents to date only show the tip of the iceberg.

What we really need is a general, efficient, easy-to-access reporting system. A dream scenario would be if every single accident could be reported and analysed, and any improvements made would also be registered. This information would then be available in a database so the whole MRI community could learn from it. The reporting system would be a most welcome tool for everybody working to improve MRI safety locally as well as globally.

Titti Owman is an MR radiographer/technologist and a member of the Safety Committee of the Society for MR Radiographers & Technologists (SMRT) and a past member of the Safety Committee of the International Society for Magnetic Resonance in Medicine. She is a founding member of the national 7-tesla facility within the Lund University Bioimaging Center, Sweden, and is also a research coordinator/lecturer at the Center for Imaging and Physiology, Lund University Hospital. She is also past president of the SMRT.

Titti Owman is an MR radiographer/technologist and a member of the Safety Committee of the Society for MR Radiographers & Technologists (SMRT) and a past member of the Safety Committee of the International Society for Magnetic Resonance in Medicine. She is a founding member of the national 7-tesla facility within the Lund University Bioimaging Center, Sweden, and is also a research coordinator/lecturer at the Center for Imaging and Physiology, Lund University Hospital. She is also past president of the SMRT.

- This article was originally published on AuntMinnieEurope.com ©2019 by AuntMinnieEurope.com. Any copying, republication or redistribution of AuntMinnieEurope.com content is expressly prohibited without the prior written consent of AuntMinnieEurope.com.