An atom interferometer that does not have to be cooled to cryognenic temperatures has been created by physicists in the US. The new device instead employs a cell of warm vapour. The absence of bulky cooling equipment means the device could potentially feature in simple atomic sensors designed for a range of applications – including measuring accelerations with great precision.

Atom interferometers rely on the fact that particles of matter have wave-like properties. Like optical interferometers, they measure the interference fringes produced when the two halves of a split beam are sent along different paths and then recombined. But rather than using components made of matter to split and reflect beams of light, they do the reverse – typically using laser beams to manipulate beams of matter.

Atom interferometers are more sensitive than their optical counterparts because the matter waves they measure travel more slowly than light does. This means that the waves’ phase changes over longer periods of time. This makes them ideal for high-precision measurements, such as looking for variations in the fine structure constant or testing the equivalence principle. They are also used in inertial sensors to make very accurate measurements of position or rotation, for example.

Large and fiddly

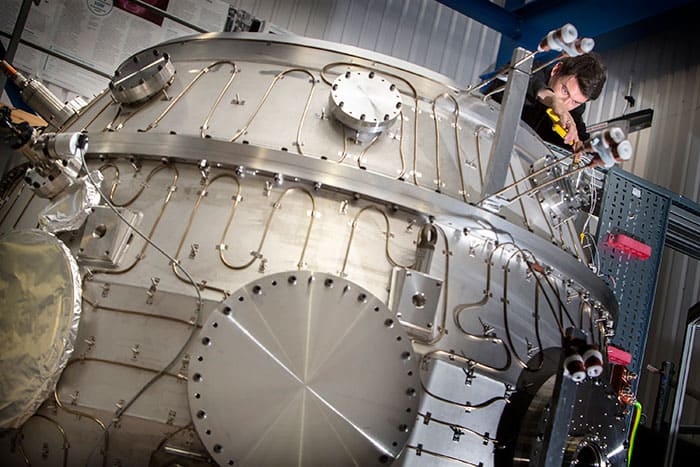

So-called light-pulse atom interferometry involves cooling down large collections of atoms to temperatures as low as a few millionths of a degree kelvin. The chilly conditions are needed to reduce the atoms’ range of velocities, so as to increase the signal at the interferometer’s output and keep the atoms closer together to maximize precision. But the lasers and ultrahigh vacuum chambers required to do this are large – the smallest (transportable) systems having a volume of about 1 m3. They are also tricky to operate because they require fine-tuning and must be kept stable.

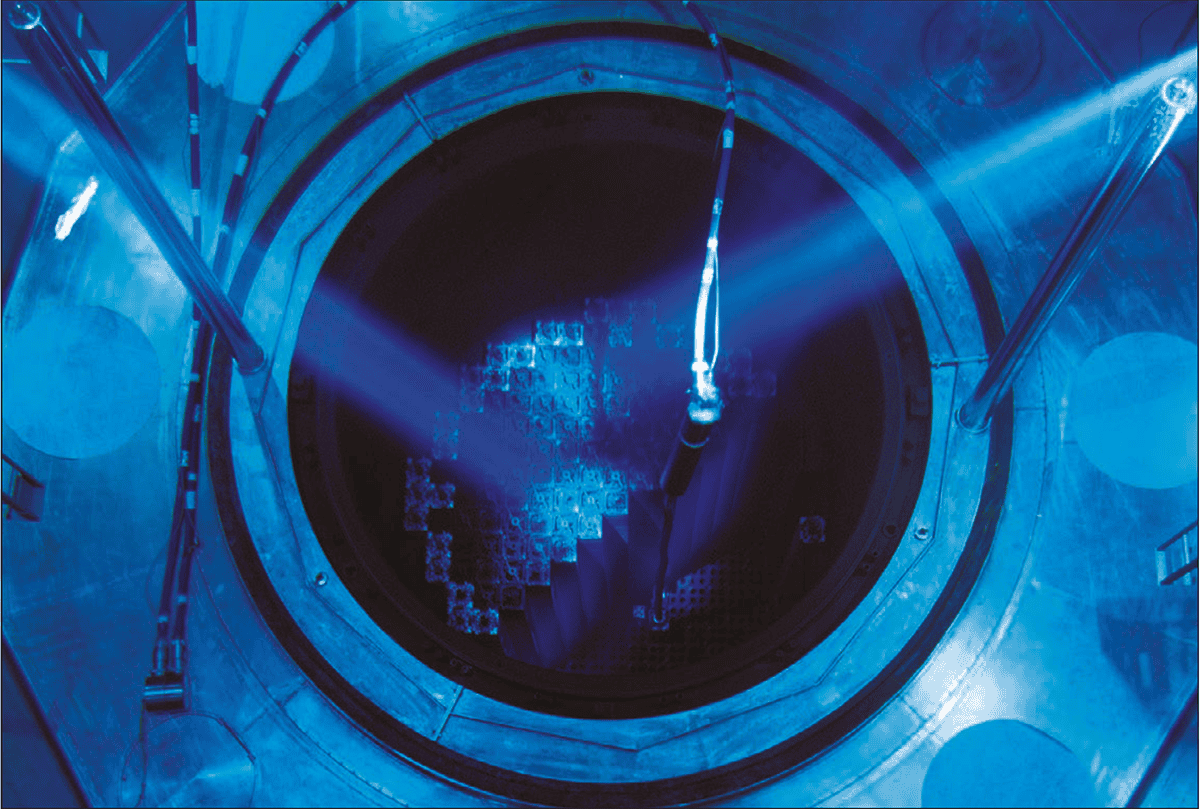

In the latest work, Grant Biedermann and colleagues at Sandia National Laboratories in New Mexico adopt a different approach involving a vapour of rubidium atoms held at 39 °C inside a 10 cm-long cell. The idea is to reduce the atoms’ velocity spread not by limiting their thermal energy as a whole, but instead by selecting two subsets of atoms with very precise velocities. The researchers did this using two counter-propagating Raman lasers, which first excite the subsets with opposite velocities and then “kick” them along different trajectories to create the interferometer.

Writing in a commentary that accompanies the Sandia group’s paper in Physical Review Letters, Carlos Garrido Alzar of the Paris Observatory in France draws an analogy with optical interferometry. Existing atomic devices, he says, operate like a laser – a coherent source of light – whereas using a warm vapour is like “searching for interferometric effects using the white incoherent light from a common light bulb”.

Flipping spins

To carry out their experiment, the researchers had to overcome a number of technical hurdles. One was how to prepare the atomic states inside their vapour cell. The atoms need to be spin polarized if they are to interfere properly, but their spin can be flipped when they bounce off the cell walls owing to electromagnetic fields created at the surface. To overcome this problem, the researchers covered the walls with a special coating.

Another major challenge was aligning the weak laser beams that were used to detect the interference fringes with the more powerful lasers used to create the interferometer, such that atoms within the two velocity subsets overlapped properly. “Since this really takes place in 3D, angle is critical,” says Biedermann. “So it was a matter of developing optical alignment tricks.”

The scheme’s sensitivity to the phase difference between matter waves travelling along the two arms of the interferometer is limited by the short time it takes thermal atoms to cross the Raman laser beams. With a transit time of just 29 μs, acceleration sensitivity is roughly five orders of magnitude or more below that possible with the best cold-atom interferometers today. However, according to Garrido Alzar, the new scheme does offer “two important advantages” compared with conventional devices. One, he says, is the fact that it can acquire data about 10,000 times more quickly. Another is its ability to measure a broader range of accelerations.

Speedy operation

Mark Kasevich of Stanford University in the US says that the high laser power needed for the interferometer “may be challenging to achieve” in practical devices. But he nevertheless thinks that the scheme’s speedy operation could prove attractive for inertial sensors used to guide cars, for example.

Guglielmo Tino of the University of Florence in Italy also believes that the new research holds promise. “The published results are still very preliminary and the achieved sensitivity is rather low but it will be interesting to see where this method can lead if optimized,” he says. “It might simplify the atomic sensors for several applications.”

- There is much more about using atom interferometry to test the equivalence principle in “The descent of mass“.