Researchers in Japan have accelerated muons into the most precise, high-intensity beam to date, reaching energies high as 100 keV. The achievement could enable next-generation experiments such as better measurements of the muon’s anomalous magnetic moment – measurements that could, in turn, that point to new physics beyond the Standard Model.

Muons are sub-atomic particles similar to electrons, but around 200 times heavier. Thanks to this extra mass, muons radiate less energy than electrons as they travel in circles – meaning that a muon accelerator could, in principle, produce more energetic collisions than a conventional electron machine for a given energy input.

However, working with muons comes with challenges. Although scientists can produce high-intensity muon beams from the decay of other sub-atomic particles known as pions, these beams must then be cooled to make the velocities of their constituent particles more uniform before they can be accelerated to collider speeds. And while this cooling process is relatively straightforward for electrons, for muons it is greatly complicated by the particles’ short lifetime of just 2 ms. Indeed, traditional cooling techniques (such as synchrotron radiation cooling, laser cooling, stochastic cooling and electron cooling) simply do not work.

Another muon cooling and acceleration technique

To overcome this problem, researchers at the MUon Science Facility (MUSE) in the Japan Proton Accelerator Research Complex (J-PARC) have been developing an alternative muon cooling and acceleration technique. The MUSE method involves cooling positively-charged muons, or antimuons, down to thermal energies of 25 meV and then accelerating them using radio-frequency (rf) cavities.

In the new work, a team led by particle and nuclear physicist Shusei Kamioka directed antimuons (μ+) into a target made from a silica aerogel. This material has a unique property: a muon that stops inside it gets re-emitted as a muonium atom (an exotic atom consisting of an antimuon and an electron) with very low thermal energy. The researchers then fired a laser beam at these low-energy muonium atoms to remove their electrons, thereby producing antimuons with much lower – and, crucially, far more uniform – velocities than was the case for the starting beam. Finally, they guided the slowed particles into a rf cavity, where an electric field accelerated them to an energy of 100 keV.

Towards a muon accelerator?

The final beam has an intensity of 2 × 10−3 μ+ per pulse, and a measured emittance that is much lower (by a factor of 2.0 × 102 in the horizontal direction and 4.1 × 102 vertically) than the starting beam. This represents a two-orders-of-magnitude reduction in the spread of positions and momenta in the beam and makes accelerating the muons more efficient, says Kamioka.

According to the researchers, who report their work in Physical Review Letters, these improvements are important steps on the road to a muon collider. To make further progress, however, they will need to increase the beam’s energy and intensity even further, which they acknowledge will be challenging.

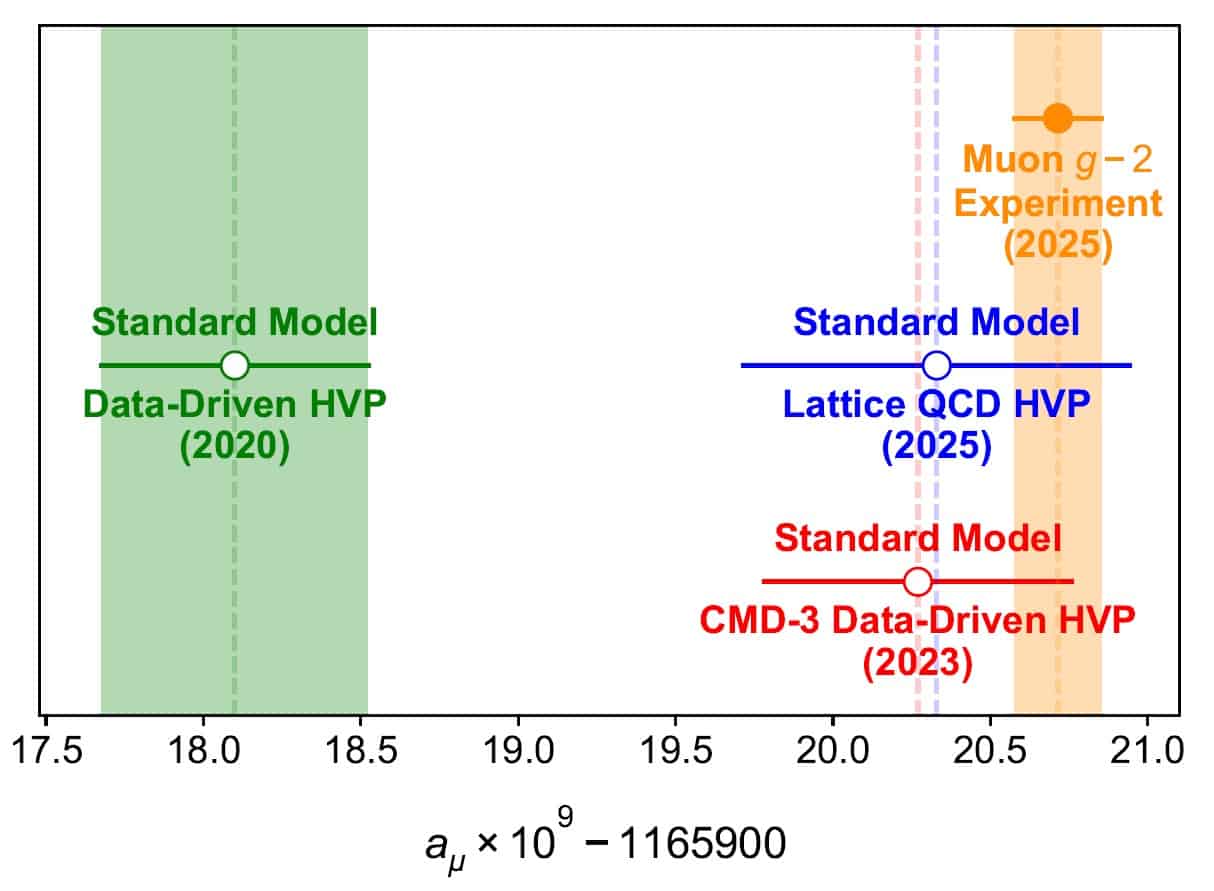

Muon g-2 achieves record precision, but theoretical tensions remain

“We are now preparing for the next acceleration test at the new experimental area dedicated to muon acceleration,” Kamioka tells Physics World. “A 4 MeV acceleration with 1000 muon/s is planned for 2027 and a 212 MeV acceleration with 105 muon/s is planned for 2029.”

In total, the MUSE team expects that various improvements will produce a factor of 105–106 increase in the muon rate, which could be enough to enable applications such as the muon g−2/EDM experiment at J-PARC, he adds.