Galaxies have been forming since the universe was less than 10% of its current age – as supercomputer simulations predict and observations have confirmed.

Ever since the 1930s when galaxies were confirmed as the fundamental building blocks of the universe, their origin and evolution have remained a central focus of physical cosmology. Speculation about the nature of galaxies dates back to the 18th century and the work of thinkers such as Thomas Wright of Durham and Immanuel Kant. Today, the prevailing theoretical ideas revolve around a 20-year-old suggestion that the origin of galaxies should be sought in quantum fluctuations generated at the time of the big bang. As with all problems in physics, the solution to the mystery of galaxy formation requires the interplay between theoretical ideas and experimental data. In this case the data are observations of galaxies stretching back over the history of the universe.

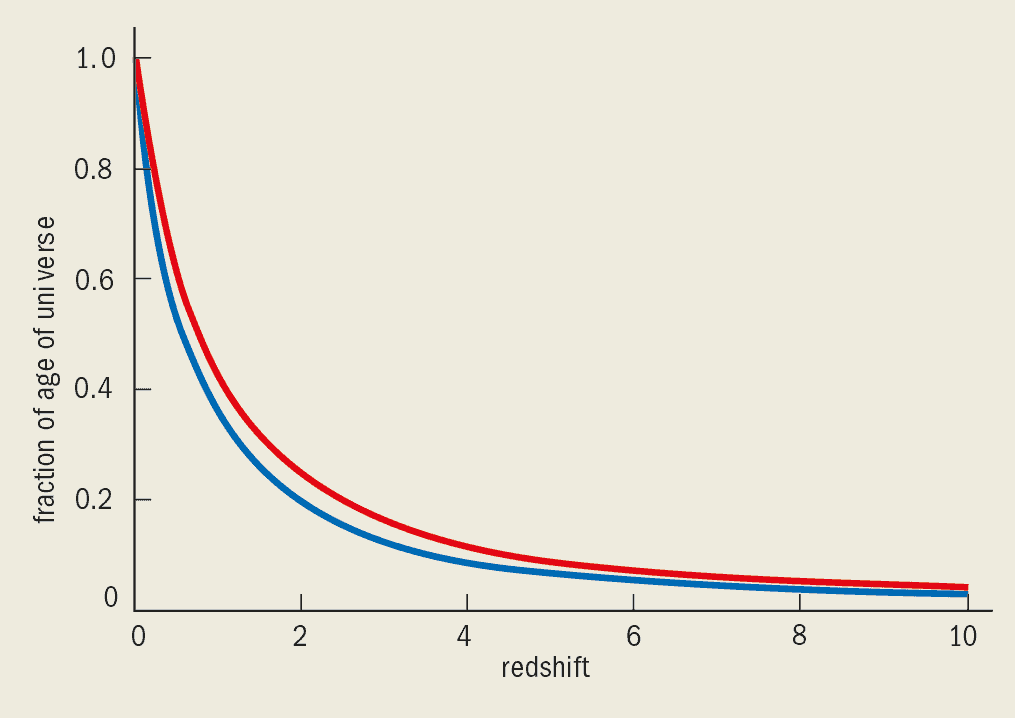

The universe is thought to be between about 10 and 15 billion years old. Its actual age depends on the Hubble constant, the density of matter in the universe and the value of the cosmological constant. The expansion of the universe means that light emitted by distant galaxies is “redshifted” to longer wavelengths on its journey to the Earth: lobserved = (1 + z )lemitted, where l is wavelength and z is the redshift. From the redshift we can calculate both the distance to the galaxy and how long the light has been travelling towards us (figure 1). The higher the redshift the greater the distance to the galaxy and the longer the light has been travelling. A redshift of z = 1 means that the light has been travelling for about 60% of the age of the universe: therefore, we are observing the galaxy as it was when the universe was only 40% of its current age. A redshift of z = 2 means that we are seeing a galaxy as it was when the universe was only about 20% of its current age.

Until about 10 years ago, astronomers were only able to measure the properties of galaxies out to modest redshifts, z » 0.2, when the universe was about 75% of its present age. Such studies established that there is considerable variation in the luminosity, colour and chemical composition of different galaxies. They also established the existence of two basic shapes of galaxies: ellipticals, which are found primarily in rich clusters, and spiral galaxies, like the Milky Way.

Heroic efforts, often spanning many years of painstaking observations, were required to obtain statistically useful samples at larger redshifts. Two such studies – one led by Simon Lilly then at the University of Hawaii, the other led by Richard Ellis then at Durham University in the UK – extended the study of basic galaxy properties to z » 1, which corresponds to almost half the age of the universe. These studies provided the first widespread indications of substantial evolutionary changes in the properties of galaxies. For instance, the number of galaxies with a given luminosity changed as a function of redshift and, hence, as a function of time.

Entering the high-redshift era

The paucity of observations of the early stages of galaxy evolution came to a dramatic end in 1996. The breakthrough was achieved by the refurbished Hubble Space Telescope (HST), working in tandem with large, ground-based, optical and infrared telescopes, particularly the 10 metre Keck telescopes on Hawaii. This spectacular progress is epitomized by the “Hubble Deep Field” images, the result of an initiative led by Bob Williams at the Space Telescope Science Institute in Baltimore in the US. During two observing sessions in 1996 and 1998, the HST was pointed at two tiny patches of the sky more or less continuously for about two weeks and the data were immediately released to the astronomical community.

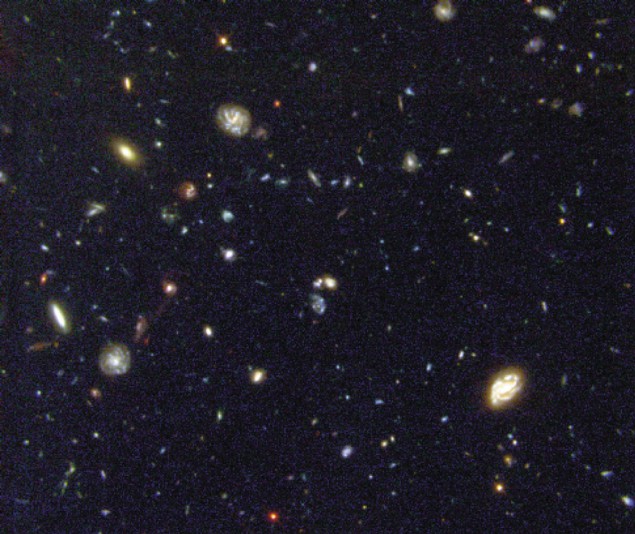

The first patch is an unremarkable piece of sky in the northern hemisphere; the second is centred on a distant quasi-stellar object, or quasar, in the southern sky. The images are the deepest ever made and provide a unique snapshot of the evolution of galaxies (see top of article). We now know that the most distant galaxies in the Hubble Deep Fields have redshifts in excess of 3.5, indicating that their light has been travelling towards us for about 85% of the age of the universe.

The Hubble Deep Fields hold the key to understanding how galaxies form and evolve – in this respect they are having a similar impact on the subject of galaxy formation to that of the fossil record on our understanding of the origin of the species and the evolution of life.

The Hubble Deep Field images give the angular positions of more than 5000 galaxies on the sky, but no direct information about their distances or redshifts. Knowledge of distance is very important for extracting all the information contained in the images, but measuring all the redshifts directly by taking a spectrum for each galaxy would be a very time-consuming task. Furthermore, many of the galaxies are too faint for their spectra to be measurable even with a telescope as large as Keck.

A novel solution to the problem of determining distances to far-away galaxies was pioneered by Chuck Steidel of the California Institute of Technology and collaborators in the early 1990s. Galaxies should emit light over a wide range of wavelengths, and the thermal radiation from hot stars plus the line emission from helium and other heavier elements (known collectively as “metals” in astrophysics) can extend well into the ultraviolet.

However, Steidel and co-workers recognized that the ultraviolet spectrum of a distant galaxy would fall sharply to almost zero at wavelengths below 91.2 nm, the wavelength corresponding to the ionization energy or Lyman limit of hydrogen. This feature, known as a “break”, arises because the majority of photons emitted at higher frequencies are absorbed by hydrogen atoms in the galaxy itself or by hydrogen clouds that exist between the distant galaxy and us. Thus, the spectrum of a distant galaxy will show a break at the wavelength corresponding to the redshifted Lyman limit: for instance, the spectrum of a galaxy with a redshift of 3.0 will have a break at a wavelength of l = (1 + 3) ´ 91.2 nm = 364.8 nm.

Steidel and co-workers used a series of filters that only transmitted light in a range of wavelengths about 50-100 nm wide to observe galaxies. A filter transmitting in the range, say, 450-500 nm would detect a galaxy at z = 3, but this same galaxy would not be seen through a filter transmitting in the range 325-375 nm since it emits very little light at wavelengths shorter than 364.8 nm. Therefore, by subtracting the two images, galaxies with redshifts around z = 3 could be quickly identified and analysed in much greater detail.

Using this simple “Lyman break” idea, large numbers of candidate high-redshift galaxies were identified and their individual spectra were subsequently measured with the Keck telescope. This technique has now been successfully applied to find large samples of galaxies at redshifts ranging from about z = 2 to z = 4. The most distant galaxy known to date, at z = 5.34, was discovered last year by Hy Spinrad of the University of California at Berkeley and collaborators using this technique.

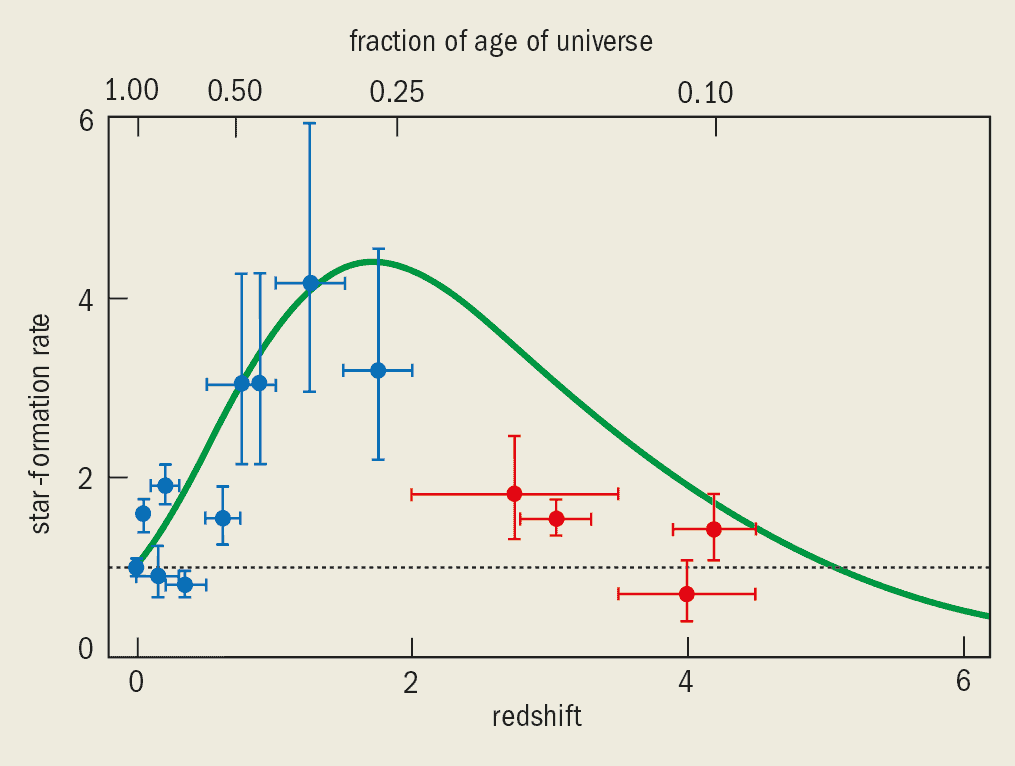

The application of the Lyman-break technique provided the first samples of genuinely high-redshift galaxies. Almost overnight observational studies of distant galaxies were turned on their head. Among the first results that caused a commotion was a graph of the rate at which stars are being formed in galaxies from when the universe was only about 10% of its current age (z » 4) to the present day (figure 2). This graph, first plotted by Piero Madau of the Space Telescope Science Institute in 1996, offers the prospect of tracking the entire cosmic history of star formation and the attendant production of the chemical elements. The results suggest that the star-formation rate has been fairly constant over time, peaking at about four times the current rate when the universe was about 25 % of its current age (z » 2).

These findings surprised proponents of the view that galaxies had formed in one big burst of star formation at very high redshifts because, in this case, the star formation rate should have been orders of magnitude higher than the current rate. Over the past two years, it has been recognized that the interpretation of these data could be tricky because only the brightest galaxies are sampled at each redshift and, furthermore, much of the UV light from young stars could be absorbed by dust particles in the galaxy. However, the graph is in reasonable agreement with the hierarchical model of galaxy formation developed by the authors’ group and described in detail below.

The new data together with the theoretical ideas discussed below make it possible for the first time to address meaningfully key questions about the way in which galaxies were formed and evolved over 10 billion years of cosmic history. When did the first galaxies appear? What triggered the process of galaxy formation? Do all galaxies form at a single, well defined epoch or is galaxy formation spread out in time? Were the early proto-galaxies similar to present-day galaxies? Why do galaxies form with a huge range of luminosities? What are the processes that establish the observed relations between the various properties of galaxies such as their luminosity, colour and metallicity? Why are some galaxies spiral and others elliptical? And perhaps, most interestingly of all, what is the connection between the properties of the galaxy population and the physics of the early universe?

The physics of galaxy formation

Recent studies of the dynamics of galaxies confirm a fundamental inference made 25 years ago from studies of the kinematics of stars within galaxies: on scales larger than galactic nuclei, the dominant physical interaction is gravity. Furthermore, it is now well established that the predominant source of gravity is matter that does not emit detectable electromagnetic radiation. This “dark matter” is now routinely “observed” through the phenomenon of gravitational lensing – the relativistic deflection of the light from distant galaxies as it passes near an intervening great cluster of galaxies.

Dark matter behaves very differently from ordinary matter. In particular, it only interacts with other matter through gravity. One consequence of this is that dark-matter particles tend not to collide with other particles (dark or otherwise). This property, combined with the fact that it does not emit photons, means that dark matter does not lose energy very easily – a key consideration in models of galaxy formation.

The dominance of gravity leads directly to a general scheme for galaxy formation, first calculated by Lev Landau and Evgenii Lifshitz of the Institute of Physical Problems in Moscow in the 1950s and rigorously developed by Jim Peebles of Princeton University in the US during the 1960s and 1970s. The key concept is the instability experienced by small regions with higher-than-average matter densities in the expanding universe. The small fluctuations in the density of matter present in the early universe grow with time and eventually collapse to form a self-gravitating object – in other words an object held together by gravity. For a wide class of initial conditions, the abundance of such objects as a function of time can be calculated using a simple and surprisingly accurate ansatz proposed in 1974 by Bill Press and Paul Schechter at Harvard University in the US.

The process of gravitational instability sets the scene for galaxy formation. Although the precise details depend on the identity of the dark matter, under quite general conditions, we expect galaxy formation to proceed in the manner first outlined in 1978 by Simon White and Martin Rees at Cambridge University in the UK. First, the gravitational instability acting on the dark matter leads to the formation of self-gravitating dark-matter halos. These halos are aspherical distributions of matter with a maximum density at their centre. Gas, initially assumed to be well mixed with the dark matter, also participates in the collapse and is heated by shocks to the thermal temperature of the dark matter.

Just prior to gravitational collapse, however, the gravitational torques exerted by neighbouring clumps of matter supply angular momentum to both the gas and the dark matter, as proposed by Fred Hoyle at Cambridge in 1948. This means that the initial collapse will generically result in the formation of a spinning gaseous disk. These disks will become galaxies.

Before that, however, the hot gas must cool radiatively due to bremsstrahlung, recombination and other atomic-physics processes. The cooling rate depends on the density and temperature of the gas, and was most efficient at high redshift, when the universe was denser. Once the disk has become centrifugally supported, the material in it begins to fragment into stars by processes that are still poorly understood. In this simplified picture, the spheroidal components of galaxies can only form by mergers of disk galaxies.

The cosmological stage

The processes of gravitational instability and collapse, gas cooling and star formation operate under quite general conditions. A quantitative theory of galaxy formation, however, requires that two key cosmological questions be addressed: (a) what is the origin of primordial mass fluctuations, and (b) what is the identity of the dark matter? Great strides towards answering these questions were made in the early 1980s, largely by a fruitful interaction between particle physics and cosmology.

The first influential idea of the “new cosmology” was proposed around 1980 by Alan Guth of the Massachusetts Institute of Technology and elaborated on by Andrei Linde, then at the Lebedev Physics Institute in Moscow. Searching for a solution to the magnetic monopole problem, Guth proposed that the universe had undergone a period of exponential expansion, or “inflation”, very early on, triggered perhaps by the transition of a quantum field from a false minimum to the true vacuum. Quantum fluctuations generated during this epoch became established as classical ripples in the energy density of the universe (see Turner in further reading).

The subsequent evolution of the fluctuations depends on the values of three cosmological parameters – the Hubble constant, H 0, the matter density of the universe, W, and the cosmological constant, L – and on the identity of the dark matter. Inflation predicts that the universe is “flat” and will continue to expand for ever: this implies that W + L/H 20 = 1 ( figure 1). For many years it was thought that the cosmological constant, L, was zero, but in the past year or so there has been some evidence that it is non-zero. (The constant was introduced by Einstein to convert the expanding universe predicted by the general theory of relativity into the stationary universe favoured at the time. However, this was before Edwin Hubble demonstrated that the universe was expanding. Einstein famously called the cosmological constant his biggest blunder, but recent observations suggesting that the expansion of the universe is accelerating may mean that he was correct in the first place.)

The second key idea from the 1980s concerns the identity of the dark matter. The abundance of the light elements (hydrogen, deuterium, helium and lithium) produced during big bang nucleosynthesis only agrees with observations of the deuterium density measured today if the total density of ordinary or baryonic matter is less than the total mass density by about an order of magnitude. (The total mass density can be inferred from, for example, the dynamics of galaxies on large scales.) Thus, a fundamental conclusion is that the dark matter must consist of non-baryonic elementary particles not included in the Standard Model of particle physics.

Particle candidates for the dark matter can be conveniently classified into “hot” and “cold” varieties, a nomenclature coined by Dick Bond, currently at the Canadian Institute for Theoretical Astrophysics in Toronto. The prototype of a hot particle is a stable neutrino with a mass of the order of 30 eV, while examples of cold particles include the neutralino and the much lighter axion. While neutrino mass is permitted within the Standard Model, neutralinos and axions require “new physics”. The neutralino, for example, is the lightest stable particle predicted by supersymmetry, the theory that seeks to unify the electroweak interaction and the strong force by allowing all fundamental particles to have “superpartners”. The superpartners of bosons (particles with integer spin) would be fermions (which have half-integer spin) and vice versa. Cold particles are often referred to as weakly interacting massive particles or WIMPs.

There is a fundamental difference in the way in which galaxies are predicted to form in hot and cold dark-matter models. If the universe were dominated by massive neutrinos, then fluctuations that contain a mass less than some critical mass would be wiped out because the neutrinos, which move at relativistic speeds, can “free-stream” from the over-dense regions into adjacent under-dense areas. For a neutrino mass of 30 eV, this critical mass is about 1016 solar masses. In the case of cold dark matter, on the other hand, free-streaming is not important and density fluctuations persist on all scales.

In neutrino-dominated models, the first structures that can form are flat, pancake-like objects with masses comparable with the critical free-streaming mass. These are objects of supercluster scale and they must somehow fragment for galaxies to form. Computer simulations of this “top-down” process carried out at Berkeley in 1981 by Marc Davis, Simon White and one of the authors (CF) showed that to obtain the clustering of galaxies observed at the present time, galaxies could only form at redshifts z £ 1. However, in the early 1980s it was already known that quasars can have redshifts much higher than this figure. And today we know that there is a large population of galaxies at z = 3-5. Thus, although rather appealing at first, neutrino-dominated universes were soon abandoned.

The alternative, a cold dark-matter universe, proved to be much more successful, as shown, for example, in a series of computer simulations by Davis, George Efstathiou from Cambridge University, White and one of us (CF). The cold dark-matter model of cosmogony (as theories of the origins of the universe are known) has been explored in tremendous detail over the past 15 years and is now generally regarded as the “standard model” of structure formation.

The defining property of a cold dark-matter universe is that small-scale fluctuations are preserved at all times. Thus, subgalactic-mass halos are the first to collapse and separate out from the expansion of the universe. These halos then grow either gradually by accreting smaller clumps, or in big jumps by merging with other halos of comparable size. The timetable for the formation of structure is therefore hierarchical or “bottom-up” – small objects form first, larger objects form later. The spectrum of the fluctuation of cold dark matter thus specifies completely the evolution of the dark-matter halos into which the baryons must fall in order to make the galaxies.

The clumping of baryons, however, can only begin in earnest after the matter and radiation in the universe have ceased to interact through the Thomson scattering of photons by electrons. This event, known as decoupling, took place about 300 000 years after the big bang. Prior to decoupling, the radiation presents a huge pressure force that supports perturbations in the baryon density against gravitational collapse. It is possible to calculate very precisely how large these fluctuations have to be at the time of decoupling for them to grow and form galaxies. Such fluctuations are reflected in the temperature of the fireball radiation that has been freely propagating since it was last scattered at decoupling and is observed today as the cosmic microwave background.

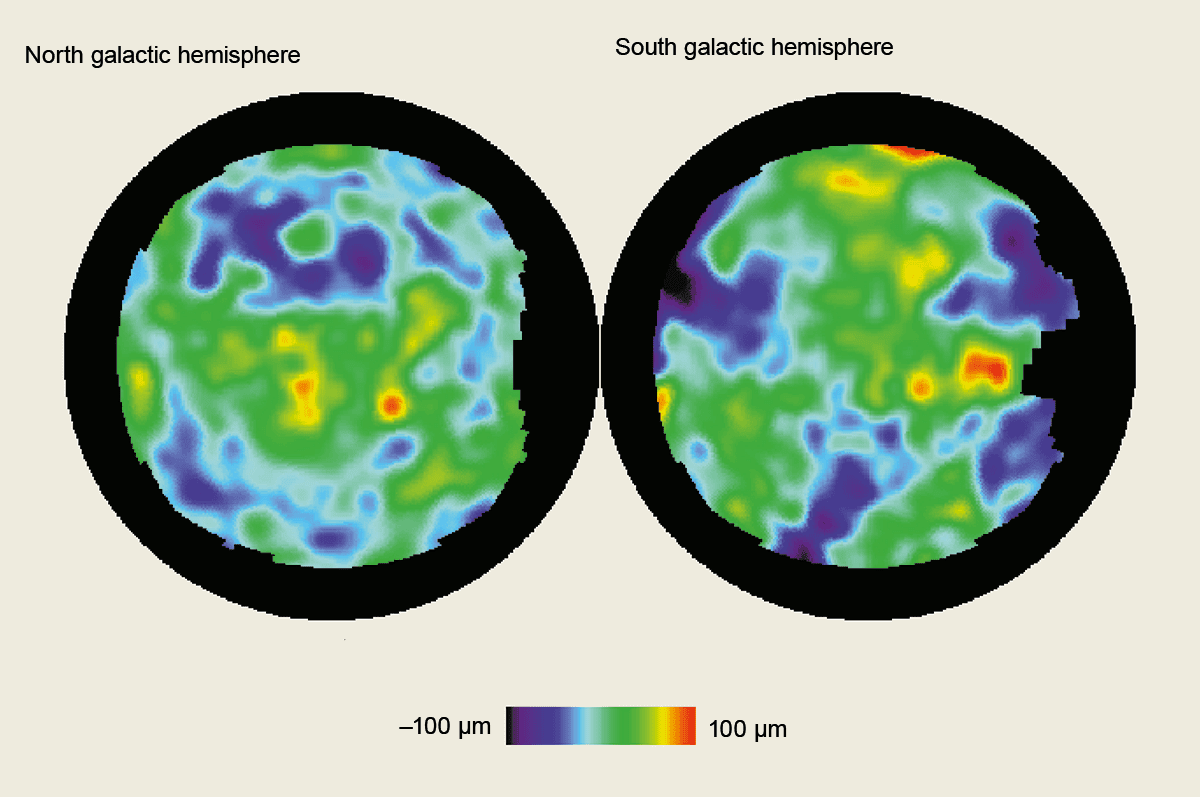

In April 1992 the team in charge of NASA’s Cosmic Background Explorer (COBE) satellite, led by George Smoot from Berkeley, announced the detection of temperature variations or anisotropies in the cosmic microwave background at the level of a few parts in 105 (figure 3). The actual measurements were only about a factor of two higher than had been predicted in the simplest cold dark-matter models of the time. This discovery and the attendant theoretical predictions must count among the greatest achievements of science in the 20th century.

The COBE discovery confirmed the general picture of cosmic-structure formation by the gravitational amplification of small primordial fluctuations, and supports the cold dark-matter cosmogony, in particular its fundamental prediction that galaxies formed by hierarchical clustering of pre-galactic fragments.

Computer simulations of galaxy formation

The remarkable developments of the past 15 years – the idea of cosmic inflation, the development of the cold dark-matter model, the discovery of ripples in the microwave background, and the recent observations of galaxies at high redshift – have laid down very solid foundations for an understanding of galaxy formation. In particular, the “initial conditions” for the evolution of at least the dominant dark-matter component are fully specified.

But in spite of this, formulating an ab initio theory of galaxy formation and evolution over 10 to 15 billion years remains a tall order. The main stumbling block is our poor understanding of the behaviour of cosmic gas and the physics of star formation, and of the feedback between the two mediated by winds from massive stars and supernovae explosions. To address these problems cosmologists must exit the cosy, elegant world of linear theory and pure gravity to enter the murky domain of gas dynamics and radiative astrophysics. The best way in is through extensive computer simulation and modelling.

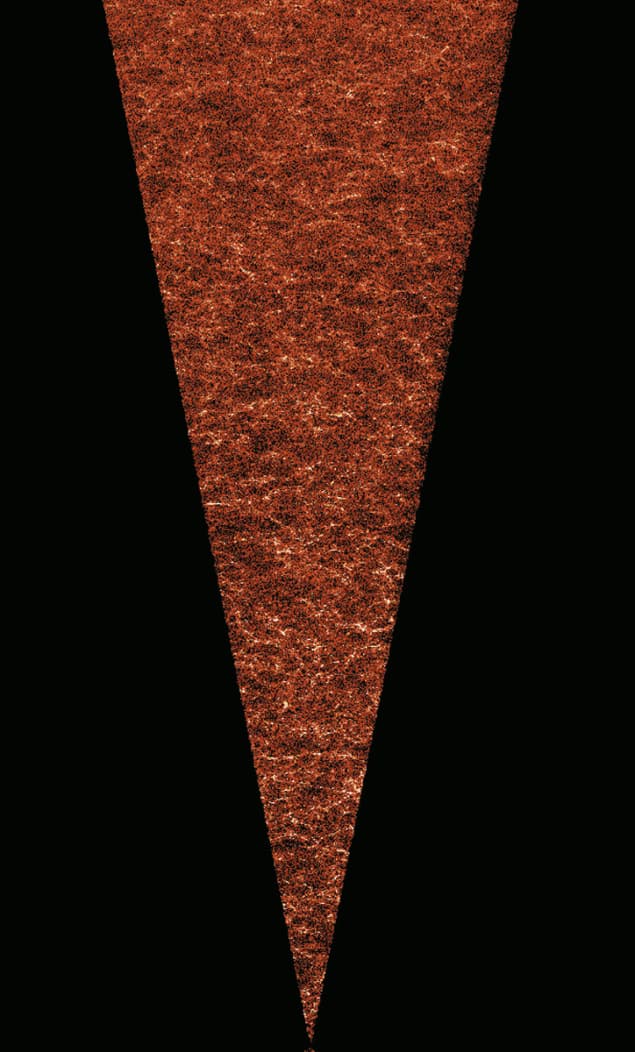

The basis for contemporary cosmological-simulation work is the N -body technique. Using various computationally efficient methods, the computer solves the coupled equations of motion of N particles in the expanding universe interacting only through gravity. (These “particles” are lumps of dark matter. The number of dark-matter particles in each lump depends on the type of dark matter included in the simulation, the computing resources available and the nature of the problem being addressed.) This is the approach that was used to rule out massive neutrinos as the dark matter and to develop the cold dark-matter cosmogony. To date the largest simulation of the evolution of structure in a cold dark-matter universe was carried out by the Anglo-German Virgo consortium in 1998 (figure 4).

The N -body technique can be augmented with numerical methods to model the hydrodynamic evolution of the cosmic gas. At present, most progress has been achieved in modelling the physics of primitive gas clouds at high redshift, the so-called “Lyman-a” clouds. The first simulations that can follow the evolution of gas from the time of decoupling to the present epoch with enough resolution to model the brightest galaxies are now being carried out by various groups around the world. The best simulations have a resolution approaching about 1 kiloparsec or about 3000 light-years. This is good enough to resolve galaxies, which are typically 10 kiloparsecs across, but is too coarse to resolve regions in which star formation is taking place, which are typically just 1 parsec across. (Most galaxies are thought to have black holes at their centres, but existing simulations do not have enough resolution to follow their formation.)

A complementary technique for simulating galaxy formation is known as semi-analytic modelling, and has been pioneered over the past five years by the authors, Shaun Cole, Cedric Lacey and co-workers at Durham, and by a group led by Guinevere Kauffmann of the Max Planck Institute for Astrophysics in Garching, near Munich. Recent work by our group shows that the N-body and semi-analytic approaches agree remarkably well.

In the semi-analytic approach one gives up the ideal of solving the equations of hydrodynamics directly. Instead, one uses a simple, spherically symmetric model in which the gas is assumed to have been fully shock-heated to the equilibrium temperature of each dark-matter halo. The cooling of the gas, and its accretion onto the halo, can then be calculated accurately. This simplification speeds up the calculations enormously and has the added advantage of bypassing resolution considerations, which are one of the main limiting factors in full hydrodynamic simulations.

Phenomenological models of star formation, feedback and metal enrichment by supernovae can be included in the semi-analytic programme. For instance, we know how much cold gas is needed to form a star, and it is relatively straightforward to apply this model to halos grown in an N-body simulation or derived from the Press-Schechter ansatz discussed above.

The semi-analytic models reconstruct the entire star formation and merger history of the galaxy population. The models have surprisingly few free parameters and these can be fixed by, for example, matching the luminosity distribution predicted by the simulations to observed luminosities. This results in a fully specified model that provides an ideal tool for comparing the predictions of the cold dark-matter theory with observations of the high-redshift universe.

The state of play

The combination of direct N -body simulations and semi-analytic modelling has revealed in detail the manner in which galaxies are expected to form in the cold dark-matter model. The picture that emerges is one of gradual evolution punctuated by major merging events that are accompanied by intense bursts of star formation. These events also trigger the transformation of disks into spheroids.

The simulations at Durham show that galaxy formation stutters into action around a redshift of z = 5, and that only a tiny fraction of the stars present today would have formed prior to that time. By z = 3, the epoch of the Lyman-break galaxies, galaxy formation has started in earnest even though only 10% of the final population of stars has emerged. The midway point for star formation is not reached until about a redshift of z = 1-1.5, when the universe was approximately half its present age.

The agreement between the observations described above and our predictions – which were published two years earlier – is encouraging ( figure 2). The major uncertainty comes from the possible obscuring effects of dust. But unless these effects turn out to be much larger than anticipated, we can be sure that we have tracked most of the star-formation activity and the associated production of chemical elements over the entire lifetime of the universe.

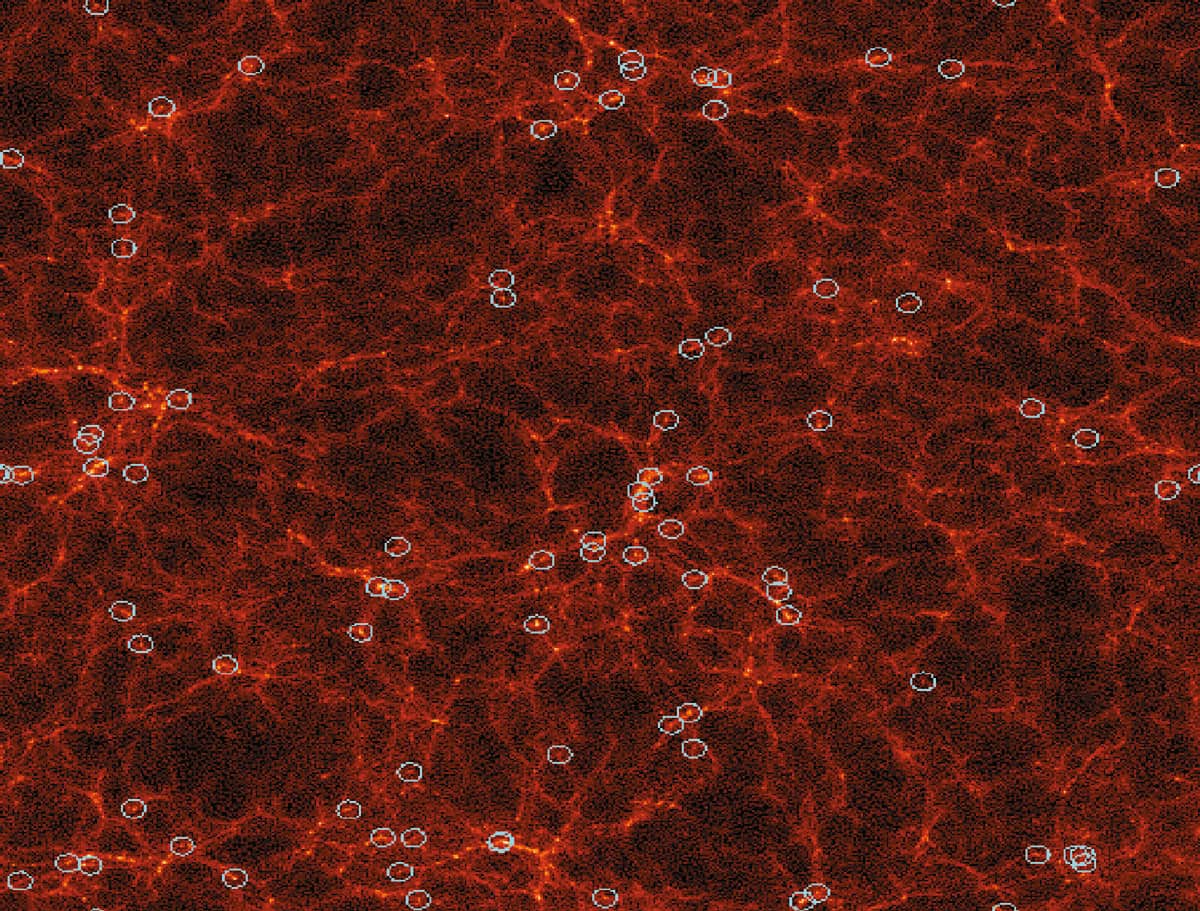

A second important prediction of the cold dark-matter theory that predated the observational measurement concerns the clustering properties of galaxies at high redshift. At the heart of the hierarchical clustering process lies the fact that galaxies tend to form first near high peaks of the density field because these are the first to collapse at any given time. This predilection for high-density regions is known as “biased galaxy formation” because the distribution of galaxies offers a biased view of the underlying distribution of mass.

An important consequence of biased galaxy formation is that bright galaxies tend to be born in a highly clustered state and remain so for long periods of time. This signature was already recognized in the very first N -body simulations in the mid-1980s and was calculated for the case of Lyman-break galaxies by the Durham group in 1998 (figure 5). Remarkably, subsequent measurements of clustering among the Lyman-break galaxies by Steidel’s student Kurt Adelberger, at Caltech, and colleagues, agreed amazingly well with these theoretical predictions. (The hierarchical model also reproduces the clusters and superclusters of galaxies observed today, as well as other large-scale features such as voids and the “great wall”.)

The two areas of agreement between theory and data highlighted here – the cosmic history of star formation and the clustering of high-redshift galaxies – are particularly noteworthy because they concern fundamental aspects of the theory. There are also several other critical predictions of the theory that seem to be broadly consistent with the data.

To the authors, the broad agreement between models and data suggests that the main ingredients of a coherent picture of galaxy formation are now in place. These ingredients are as follows: a specific model for the form of the primordial density fluctuations; collisionless, non-baryonic dark matter; gravitational instability; and the growth of galaxies by hierarchical clustering. Galaxy formation is a protracted process that is still going on today. Simon White, now at the Max Planck Institute in Garching, recently summarized the situation rather neatly by saying that “Galaxy formation is a process, not an event.” There were no fireworks in the early universe, just a gradual build up of the structure and stellar content.

Not everyone, however, agrees with our conclusions. Astronomers are a rather conservative bunch and many of them tend to view theorists with suspicion. A popular view held by many astronomers for a long time was that galaxies, particularly ellipticals, formed in what would have been a truly spectacular event: the monolithic collapse of a gigantic proto-galaxy at a redshift just beyond those accessible with large telescopes. This view – for which there has never been one shred of theoretical or empirical evidence – is rapidly becoming untenable and, in our opinion, it is just a matter of time before even the staunchest diehards finally relinquish this cherished belief. The paradigm shift will then be complete.

The next steps

There are justifiably high expectations for the next decade. The number of 10 metre class telescopes is proliferating: the first of the four “Very Large Telescopes” at the European Southern Observatory in Chile saw first light last year, and the Gemini telescopes on Hawaii and in Chile are scheduled to come into operation shortly. These will be followed in rapid succession by large telescopes being built by astronomers from Japan (the Subaru telescope on Hawaii), Spain (Gran TeCan on the Canary Islands) and the US (the Large Binocular Telescope on Mount Graham).

The middle of the next decade should also see the launch of NASA’s Next Generation Space Telescope that will search for galaxies at high redshift. Around the same time, two satellites that will map the microwave background radiation with unprecedented precision – NASA’s Microwave Anisotropy Probe (MAP) and the European Space Agency’s Planck satellite – will be launched. And towards the end of the decade the “large millimetre array” will be constructed in Chile to study galaxy formation at high redshift and star formation in nearby galaxies.

Ultimately, however, the cornerstone upon which much of modern cosmology rests is the idea of non-baryonic dark matter. Advanced experiments are already underway in Italy, the UK and the US, and they stand a good chance of detecting dark matter, if it really exists, within the next few years. That discovery, when it comes, will be the jewel in the crown of contemporary cosmology.