For more than 20 years, particle theory has left experiment far behind in its wake. Michael Riordan hopes that the Large Hadron Collider will help bring particle physics back to its experimental roots

With the Large Hadron Collider (LHC) springing to life at the CERN laboratory near Geneva, the great data drought in elementary particle physics is finally about to end. Not since the second phase of CERN’s Large Electron–Positron Collider (LEP) began operations in 1996 has the field been able to probe virgin territory and measure truly new and exotic phenomena. And that machine merely doubled the energy reach of electron–positron colliders into regions that had already been partially explored using the Tevatron at Fermilab in the US. Researchers at these colliders — the world’s most powerful for over a decade — could only chip away at the outer fringes of the unknown. But the LHC, built by installing thousands of superconducting magnets in the LEP tunnel, will permit physicists to strike deep into its dark heart. There they will almost certainly discover something distinctively different.

When it finally attains its design parameters, the LHC will have seven times the collision energy of the Tevatron. And the machine’s absolutely bruising proton–proton collision rate, what physicists call its “luminosity,” should eventually come in 100 times higher than the US facility. Taken together, these advances in accelerator technology will extend the experimental reach of high-energy physics almost as dramatically as did early particle colliders — ADONE in Italy, the CERN Intersecting Storage Rings (ISR), and SPEAR at the Stanford Linear Accelerator Center (SLAC) — during the 1970s, a tumultuous decade that culminated in today’s dominant paradigm of particle physics, the Standard Model. Expectations are high that the particles soon to appear at the LHC will equal or perhaps even exceed in importance the discoveries that led to this achievement.

It has been a long, long wait. During the intervening decades, particle theory has leapt far beyond experiment, to unattainable energy levels and correspondingly tiny distances that humans can never hope to experience — at least not directly. First came supersymmetry during the 1970s, an outgrowth of the gauge theories that had just proved so successful in unifying the weak and electromagnetic forces. These SUSY theories, as they came to be known, extended the unification programme by incorporating the strong and the electroweak forces into a grand, all-encompassing whole. They also predicted a plethora of detectable new particles with masses tens to hundreds of times greater than the mass of the familiar proton.

What left experiment hopelessly in defeat and completely unable to respond meaningfully were the string theories of the 1980s. In the absence of any agreed-upon criterion of verifiability to limit their population, those early string theories began to multiply unfettered like rabbits in Australia. The only criteria that limited their numbers were subjective ones like mathematical consistency and elegance. Historians of science began to note a subtle but important shift in the manner of doing physics, in which observations no longer seemed to matter as much — at least not to string theorists — in the process of justifying theories. Particle physicists could only complain, and some did so loudly, that the field was reverting to metaphysics and philosophy.

Rags to riches

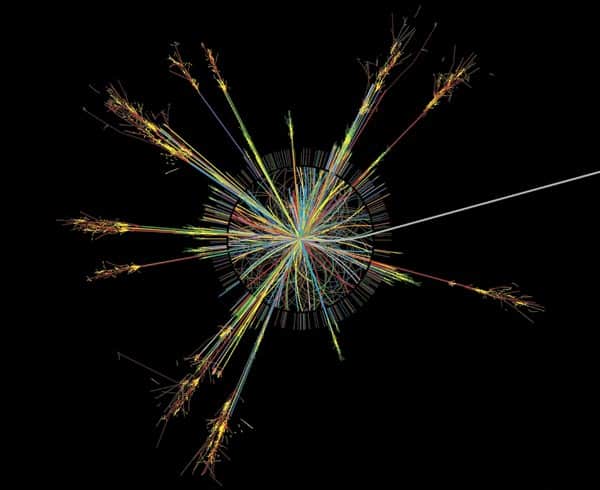

This sorry state of scientific affairs is happily coming to an end. The LHC’s two enormous detectors ATLAS and CMS — together sporting enough metal to rebuild the Eiffel Tower — will soon be clobbered by vast numbers of energetic particles spewing from the violent proton—proton collisions in their midsts. While the Tevatron gives physicists insights into the particles and processes occurring at energies up to a few hundred billion electron-volts and at distances down to almost 10–18 m, the LHC will permit them to observe what happens at several trillion electron-volts (TeV) and distances 10 times smaller.

One of the early targets will be the long-sought and much-touted Higgs boson (or bosons), the capstone of the Standard Model thought to imbue most of its fundamental entities with their intrinsic masses. Experiments so far at LEP and the Tevatron have constrained the mass of a single, standard Higgs boson to lie between 0.114 and about 0.150 TeV, a low and very narrow range of energies, given existing capabilities. But the Tevatron has nowhere near the LHC’s potential luminosity, which should prove crucial in tracking down this rare, elusive ghost. Fermilab researchers may still catch a fleeting glimpse of the Higgs boson in their remaining experimental runs, but its bona fide, full-fledged discovery will almost certainly require the LHC.

But the curiously low mass of the Higgs boson poses another puzzling conundrum, known as the “mass hierarchy problem”. According to quantum field theory, huge quantum corrections should boost the mass of the Higgs boson (and those of the other fundamental particles) to near the Planck-mass level of 1016 TeV, orders of magnitude higher than that required by precision experiments. What keeps its mass so low?

Supersymmetry provides a clever, if cumbersome, answer to this problem by predicting the existence of supersymmetric partners for all Standard Model particles — a photino for the photon, a selectron for the electron, a squark for every quark, and so on. Such exotic new particles naturally cancel out these nasty corrections in the theory and keep the masses of the Standard Model particles relatively low. Although these superparticles can weigh in substantially higher than their Standard Model partners, they cannot have masses above a few TeV or the delicate cancellations get out of whack. If supersymmetry is indeed the ultimate resolution of the hierarchy problem, these particles must finally turn up at LHC energies.

Actually discovering superparticles will not be easy, however. For one thing, there is a bewildering variety of possible theories, leading to a vast array of predictions for observable particles with a broad range of potential masses and decays. As SLAC theorist James “BJ” Bjorken once cautioned me, “SUSY is an awfully slippery lady!”

Another problem is that most superparticles will decay into invisible particles that escape the detector unobserved. Such occurrences will thus become manifest as missing transverse energy — an imbalance in the distribution of visible energy perpendicular to the beam direction. However, similar deficits can also be caused by invisible Standard Model particles such as neutrinos or by gaps in a detector’s angular coverage. In order to establish that a new and unusual particle has indeed appeared inside their detector, therefore, experimenters must accurately calculate and subtract all such backgrounds, which can be substantially larger than the signal that they are trying to extract. This is a hugely difficult task, compounded by the fact that the expected signal will probably not appear as a sharp resonance peak but will be broadened instead by the motions of other decay particles. And in the LHC, signals are diluted by helter-skelter motions of the quarks and gluons within the colliding protons — a messy process that theorist Richard Feynman once likened to “colliding garbage cans with garbage cans” to see what is inside.

In contrast, take the startling signals that led to the first evidence for quarks in the late 1960s and early 1970s. These were huge — orders of magnitude larger than the backgrounds that remained after simple cuts had been applied to the data to eliminate obvious junk. The first-generation ISR detectors, for example, were swamped by hordes of hadrons spewing out at large angles from hard collisions of the protons’ constituent quarks and gluons. And in November 1974, a narrow peak erupted in the hadron-production cross-section at the SPEAR electron–positron collider that was about 1000 times above baseline. These blockbusting signals were impossible to miss!

If they exist, superparticles will leave no such obvious footprints for the two industrial-strength LHC experimental collaborations, each involving over 1000 physicists. Tracking down such evanescent, elusive prey may well prove arduous work, fraught with ambiguity. Here is where subjectivity can creep into the experimental enterprise. Many physicists, theorists and experimenters alike, would dearly love to see supersymmetry proven true, for it solves so many nagging problems in a single stroke. Not only does it resolve the hierarchy problem, but it can also readily account for the mysterious dark matter in the universe, the indirect evidence for which has become overwhelming. And string theories absolutely require it. But the urge to be the first to make important discoveries can lead eager experimenters to tune data cuts, underestimate backgrounds, and thus unwittingly fabricate the very results they yearn to unearth.

Physicists who cried Higgs

This unfortunate process has occurred too often in the history of physics, and spurious Higgs-boson discoveries seem to be particularly recurrent examples. One false alarm occurred at LEP just before its scheduled shutdown in 2000. In this episode, which made front-page headlines in major newspapers such as the New York Times, three of the four LEP collaborations reported excess events — six or seven, all told — that were eagerly interpreted to be decays of a 0.115 TeV Higgs boson. The evidence was intriguing, but not conclusive enough to convince the CERN management to extend LEP’s life into 2001 and so delay construction of the LHC. When the dust began to settle months later, it turned out that certain experimenters had underestimated the possible errors in their measurements and thus exaggerated the statistical significance of the apparent signal. What they finally concluded was only that the mass of the Higgs boson must lie above 0.114 TeV, with a confidence level of 95%. There is still an outside chance that the apparent phenomenon was indeed real, but few physicists are betting on it.

More recently, there was a similar surge of rumours that a Higgs boson with a mass of 0.160 TeV had been glimpsed at Fermilab in early 2007 (see “The tale of the blogs’ boson”). The possibility that such a boson could readily be accounted for by a SUSY theory after minor tuning of its theoretical parameters added fuel to these rumours — which erupted after the results were tentatively revealed at an informal January workshop appear to matter that this was only a “2σ” effect, a fluctuation of two standard deviations above background levels, which can occur at random with a probability of 5%. As 2σ fluctuations happen all the time, sceptical experimenters usually do not take them very seriously. Three standard deviations is the absolute minimum, corresponding to only a 0.3% chance of a random fluctuation, and 5σ is the “gold standard” for particle physics. But the allure of finding evidence for supersymmetry, abetted by journalists eager for a scoop, must have proved too difficult to resist.

While similar rushes to judgment may well occur at the LHC, I suspect the innate scepticism of the experimenters will restrain them and eventually win out, as it did in the above cases. Experimental particle physicists have developed truly powerful analysis techniques, such as use of neural-network analysis software that learns on the job and improves as the data roll in — to cope with the suffocating backgrounds encountered at hadron colliders. They have begun employing “blind” data analyses, in which the end results are deliberately not viewed until after enough data have come in, to limit the subjective tuning of experimental cuts. And the cautious vetting processes of these huge collaborations, in which tentative discoveries run a gauntlet of internal reviews before ever becoming official, further mitigates against releasing incorrect results. If any doubt remains, the LHC’s tremendous experimental reach should act as the final judge. For this collider can generate events with TeV missing energies, where most Standard Model backgrounds plummet. If a SUSY signal is indeed present, it should ultimately stand out rather distinctively in this domain.

Gravity’s rainbow

Another area of intense theoretical and experimental interest is the possibility — raised in the last decade or so — of observing gravity at work in particle collisions. All string theories involve extra spatial dimensions beyond the three familiar ones, but in certain classes of string theories that have been studied since the mid-1990s, some of these hidden dimensions are far larger than the usual 10–35 m Planck scale. They can be curled up, or “compactified,” at length scales up to about 10–18 m and still not violate existing short-range tests of Newtonian gravity. These lengths correspond to the TeV energy scale that the LHC is about to explore. This is tantamount to dragging the Planck scale down to a much lower energy level, thus making gravity much stronger there than we experience at macroscopic distances. If these more recent string theories are valid at such lengths and energies, then exotic new phenomena should begin to appear at the LHC. For hardened sceptics like me, this is a truly fabulous turn of events. String theories — or at least some of them — may have made testable, falsifiable predictions!

A possible manifestation of large extra dimensions would be the appearance at the LHC of single jets — tightly collimated sprays — of hadrons recoiling against an invisible particle, leading to missing energy in the opposite direction. Such “monojet” events could be interpreted as production of a gluon plus a graviton (the hypothetical elementary particle that mediates the gravitational force), which occurs because gravity is so much stronger at this scale than previously imagined. But like SUSY events, these monojet events suffer from huge Standard Model backgrounds, and experimenters would have to distinguish any excess from the emergence of SUSY itself. About the only way to do so is by determining the spin of the invisible particles (gravitons have spin-2), which may be a tricky affair with the LHC.

Fortunately, there is a distinctive signal required by theories with large extra dimensions that should be observable if they indeed exist: Kaluza–Klein excitations. Predicted way back in the 1920s by Theodore Kaluza and Oscar Klein, who worked with 5D field theories, these are multi-TeV versions of the photon and Z-boson. They would decay to electron–positron or muon pairs that should stand out sharply from the LHC’s choking hadronic backgrounds — just like the famous J-particle did at the Brookhaven National Laboratory in the US in late 1974. In fact, there could be observable “towers” of these excitations, repeating series of them stretching to ever higher energies. But here again, even if such tantalizing excesses of these lepton pairs turn up, the LHC researchers will still have to find ways to distinguish them from other possibilities.

There are many other potential theories and phenomena under consideration by physicists as they await the great data flood expected at the LHC over the next few years. If gravity does indeed grow strong enough at the multi-TeV scale, for example, micro-black-holes may be created profusely. What will appear at the LHC is still any theorist’s guess. Most likely, something completely unexpected will eventually show up in ATLAS and CMS. Whenever the experimental reach is extended so far, history suggests, something usually does.

And there will, of course, be the usual blind alleys and wrong turns taken by too-eager experimenters trying to beat the competition and establish their scientific reputations. That is to be expected in the normal course of such a thrilling scientific endeavour. But with a healthy, active intercourse re-established once again between theory and experiment, I am confident that the truth will ultimately emerge from any such confusion — along with a strikingly new physics landscape that few could have anticipated.