Measurement is at the heart of physics. As physical quantities have been measured with greater and greater accuracy, our knowledge of the world has improved. This applies equally to measurements on the subatomic and intergalactic scales, although the relative error bars are very different. The Planck constant is known to an accuracy of one part in ten million, for instance, whereas the uncertainty in the Hubble constant is about 10%.

This year marks the 125th anniversary of the signing of the “metre convention” in Paris and the creation of the Bureau International des Poids et Mesures. It is also the 100th birthday of the National Physical Laboratory (NPL) in the UK. Other standards labs have similarly long histories: the Physikalisch-Technische Bundesanstalt (PTB) in Germany dates back to 1887, while the forerunner of the US National Institute of Standards and Technology was set up in 1901. Then, as now, the laboratories were responsible for maintaining the measurement standards that underpin national and international commerce.

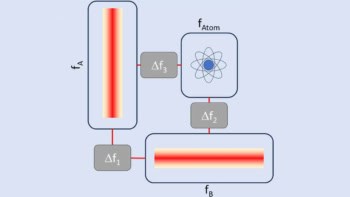

Back in those days the metre was defined in terms of quadrants of the Earth: today it is defined by the speed of light. Redefining the seven SI base units in terms of the fundamental physical constants is a long-term goal in metrology. The ruling body for the physical constants, the Committee on Data for Science and Technology (CODATA), has just published the “1998 CODATA recommended values“, which are based on all the data available at the end of 1998.

As might be expected, the accuracy of most of the values has improved since the 1986 recommended values. There is, however, one exception – the gravitational constant, G. The recommended value of “big G” is now 6.673 X 10-11 in units of metres cubed per kilogram per second squared, with an uncertainty of 1.5 parts in a thousand. This is less accurate than the 1986 value by a factor of ten. The reason for the increased uncertainty is a puzzling measurement at the PTB that differed from the existing value by more than 40 standard deviations.

The same problem that confronts big G researchers – the fact that gravity is the weakest of the four fundamental forces by many orders of magnitude – is also a major challenge for many astrophysicists. Detecting a gravitational wave must surely be the most difficult experiment in physics – it is necessary to detect tiny changes (about 1% of the size of a proton) in the separation of two masses that are several kilometres apart. However, there are even plans to place a gravitational-wave detector in space: the LISA mission will involve three satellites separated by five million kilometres.

Those who test the equivalence principle – the fact that the gravitational and inertial masses of bodies are the same – face similar daunting challenges. However, as a workshop organized by the European Space Agency and CERN heard last month, it is clear that the technology needed for such experiments – such as ultrastable lasers and drag-free satellites – now exists. We are on the verge of an exciting, yet immensely difficult, age of experimental gravitational physics and measurement science.