The publish-or-perish ethic too often favours a narrow and conservative approach to scientific innovation. Mark Buchanan asks whether we are pushing revolutionary ideas to the margins.

In 1890 an electricity company enticed the German physicist Max Planck to help it in its efforts to make more efficient light bulbs. Planck, as a theorist, naturally started with the fundamentals and soon became enmeshed in the thorny problem of explaining the spectrum of black-body radiation, which he eventually did by introducing the idea — a “purely formal” assumption, as he then considered it — that electromagnetic energy can only be emitted or absorbed in discrete quanta. The rest is history. Electric light bulbs and mathematical necessity led Planck to discover quantum theory and to kick start the most significant scientific revolution of the 20th century.

Around the same time, Planck’s colleague Wilhelm Röntgen was experimenting with cathode rays when he noticed an odd glow coming from a fluorescent screen some distance away that was not part of the intended experiment; in so doing he discovered X-rays, and helped propel medicine into the modern era. Of course, it is not just German scientists who make world-changing discoveries by unexpected paths. In 1964 US physicists Arno Penzias and Robert Wilson famously detected the cosmic microwave background radiation in annoying noise that they could not eliminate from their cryogenic microwave receiver at Bell Labs.

This is how discovery works: returns on research investment do not arrive steadily and predictably, but erratically and unpredictably, in a manner akin to intellectual earthquakes. Indeed, this idea seems to be more than merely qualitative. Data on human innovation, whether in basic science or technology or business, show that developments emerge from an erratic process with wild unpredictability. For example, as physicist Didier Sornette of the ETH in Zurich and colleagues showed a few years ago, the statistics describing the gross revenues of Hollywood movies over the past 20 years does not follow normal statistics but a power-law curve — closely resembling the famous Gutenberg— Richter law for earthquakes — with a long tail for high-revenue films. A similar pattern describes the financial returns on new drugs produced by the bio-tech industry, on royalties on patents granted to universities, or stock-market returns from hi-tech start-ups.

What we know of processes with power-law dynamics is that the largest events are hugely disproportionate in their consequences. In the metaphor of Nassim Nicholas Taleb’s 2007 best seller The Black Swan, it is not the normal events, the mundane and expected “white swans” that matter the most, but the outliers, the completely unexpected “black swans”. In the context of history, think 11 September 2001 or the invention of the Web. Similarly, scientific history seems to pivot on the rare seismic shifts that no-one predicts or even has a chance of predicting, and on those utterly profound discoveries that transform worlds. They do not flow out of what the philosopher of science Thomas Kuhn called “normal science” — the paradigm-supporting and largely mechanical working out of established ideas — but from “revolutionary”, disruptive and risky science.

Squeezing life out of innovation

All of which, as Sornette has been arguing for several years, has important implications for how we think about and judge research investments. If the path to discovery is full of surprises, and if most of the gains come in just a handful of rare but exceptional events, then even judging whether a research programme is well conceived is deeply problematic. “Almost any attempt to assess research impact over a finite time”, says Sornette, “will include only a few major discoveries and hence be highly unreliable, even if there is a true long-term positive trend.”

This raises an important question: does today’s scientific culture respect this reality? Are we doing our best to let the most important and most disruptive discoveries emerge? Or are we becoming too conservative and constrained by social pressure and the demands of rapid and easily measured returns? The latter possibility, it seems, is of growing concern to many scientists, who suggest that modern science is in danger of losing its creativity unless we can find a systematic way to build a more risk-embracing culture.

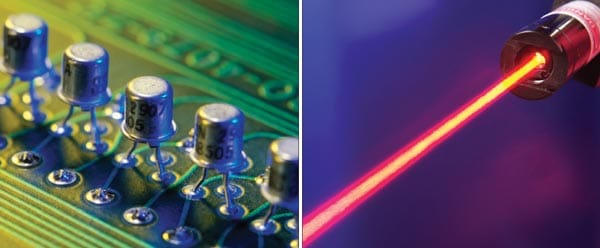

The voices making this argument vary widely. For example, the physicist Geoffrey West, who is currently president of the Santa Fe Institute (SFI) in New Mexico, US, points out that in the years following the Second World War, US industry created a steady stream of paradigm-changing innovations, including the transistor and the laser, and it happened because places such as Bell Labs fostered a culture of enormously free innovation. “They brought together serious scientists — physicists, engineers and mathematicians — from across disciplines”, says West, “and created a culture of free thinking without which it’s hard to imagine how these ideas could have come about.”

Unfortunately, today’s academic and corporate cultures seem to be moving in the opposite direction, with practices that stifle risk-taking mavericks who have a broad view of science. At universities and funding agencies, for example, tenure and grant committees take decisions based on narrow criteria (focusing on publication lists, citations and impact factors) or on specific plans for near-term results, all of which inherently favour those working in established fields with well-accepted paradigms. In recent years, tightening business practices and efforts to improve efficiency have also driven corporations in a similar direction. “That may be fine in the accounting department,” says West, “but it’s squeezing the life out of innovation.”

The black swans of science

A key problem, suggests mathematical physicist Eric Weinstein of the Natron Group, a hedge fund in New York, is that it is too easy for scientists in the “establishment” of any field to cut down new ideas, and to do so without really putting anything at risk, thereby leading to a culture that is systematically biased toward caution. “High-risk science is much more associated with figures from the past,” he says.

The result, he suggests, is that science is becoming less a “bottom-up” enterprise of free-wheeling exploration — energized by the kind of thinking that led Einstein to relativity — and more a “top-down” process strongly constrained by social conformity, with scientific funding following along fashionable lines. The publish-or-perish ethic, in particular, strongly rewards those scientists doing more or less routine technical work in established fields, and punishes more risky work exploring unproven ideas that may take a considerable period of time to reach maturity.

This is especially damaging given the disproportionate benefits that come from the most important discoveries, which seem to be inherently unpredictable in both timing and nature. As Taleb argues persuasively in The Black Swan, any sensible long-term strategy in a world dominated by extreme and unpredictable events has to accept, and even embrace, that unpredictability. He illustrates the idea in the financial context. People investing in venture-capital start-ups, for example, have to expect continual losses in the short term, and bank on the fact that they will ultimately make up for those losses by hitting on a few really big winners in the long run.

More generally, Taleb’s basic investing strategy — which could easily be translated into research terms — is to put a fair fraction of funds into very conservative processes that will not lose their value, even if they have little chance of producing big gains; and to put a small but reasonable fraction into high-risk, high-reward settings, thereby gaining exposure to the potentially enormous gains from these investments. These may be unpredictable in detail, but the statistics makes the expected long-term pay-off very high.

Even so, it takes discipline and fortitude to stick with this strategy. As Taleb points out, if everyone around you believes in the dominance of normal statistics, then they will think that you are foolish, and the short-term evidence will probably back them up. You will be losing money in the short run, seeing no returns, and this may go on for a considerable time. The same goes for high-risk science relative to research pursuing more short-term goals. In the short run, what the mavericks do will almost always seem less successful, perhaps even like wasting their time, and it is easy to think that this is the kind of research we should not pursue, even if this is actually very much mistaken.

This is a trap, West suggests, into which modern science planning has fallen. “My fear”, he says, “is that by eliminating the mavericks we end up hobbling our ability to discover the big, new ideas — the next transistor. That’s a serious and tragic error.”

Hill climbers and valley crossers

What is to be done? Some funding agencies, of course, have long recognized the need to fund “blue sky” research — work that may be high risk but may also be high reward. In the US, for example, the National Science Foundation has high-risk programmes in areas ranging from basic physics through to anthropology. Similarly, the European Commission, even in the decidedly practical area of information and communications technology, has a programme in future and emerging technologies that only funds research identified as having the potential to overturn existing paradigms. Perhaps the most famous centre that supports high-risk science demanding long-term commitment and transdisciplinary involvement is the SFI, which is privately funded. In the past few years, the SFI has been joined by a host of new centres, such as the Perimeter Institute for Theoretical Physics in Waterloo, Canada, a public–private initiative strongly aided by the Canadian government and founded in 1999 by Mike Lazaridis, chief executive of Research in Motion, which created the BlackBerry.

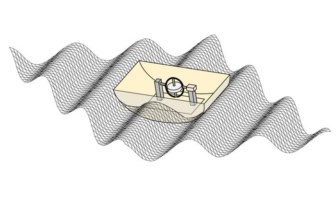

But physicist Lee Smolin, currently at the Perimeter Institute, suggests that science overall requires a much broader and more coherent approach to risky science. To see the kinds of policies needed, he suggests, it is useful to note that scientists, at least in some rough approximation, follow working styles of two very different kinds, which mirror Kuhn’s distinction between normal and revolutionary science.

Some scientists, he suggests, are what we might call “hill climbers”. They tend to be highly skilled in technical terms and their work mostly takes established lines of insight that pushes them further; they climb upward into the hills in some abstract space of scientific fitness, always taking small steps to improve the agreement of theory and observation. These scientists do “normal” science. In contrast, other scientists are more radical and adventurous in spirit, and they can be seen as “valley crossers”. They may be less skilled technically, but they tend to have strong scientific intuition — the ability to spot hidden assumptions and to look at familiar topics in totally new ways.

To be most effective, Smolin argues, science needs a mix of hill climbers and valley crossers. Too many hill climbers doing normal science, and you end up sooner or later with lots of them stuck on the tops of local hills, each defending their own territory. Science then suffers from a lack of enough valley crossers able to strike out from those intellectually tidy positions to explore further away and find higher peaks.

“This is the situation I believe we are in,” says Smolin, “and we are in it because science has become professionalized in a way that takes the characteristics of a good hill climber as representative of what is a good, or promising, scientist. The valley crossers we need have been excluded or pushed to the margins.”

Smolin suggests that we need to shift the balance to include more valley crossers, and that this should not really be too hard to do if we take a determined approach. What we need, in general, is to put policies in place that will judge young scientists not on whether they are linked into programmes established decades ago by now-senior scientists, but solely on the basis of their individual ability, creativity and independence. Some specific steps that might be taken, he suggests, include ensuring that departments strong in any established field also include scientists with diverging views. Similarly, conferences focusing on one research programme should be encouraged to include participants from competing rival programmes.

In addition, funding agencies should develop a means of penalizing scientists for ignoring the really “hard” problems, and of rewarding those who attack long-standing open issues. Perhaps, Smolin suggests, an agency or foundation could create some really long-term fellowships to fund young researchers for, say, 10 years, thus allowing them the space to pursue ideas deeply without the pressure for rapid results.

The wisdom of crowds

Weinstein suggests another idea — that we should borrow some ideas from financial engineering and make scientists back up their criticisms by taking real financial risks. You think that some new theory is utterly worthless and deserving of ridicule? In the world Weinstein envisions, you could not trash the research in an anonymous review, but would buy some sort of option giving you a financial stake in its scientific future, an instrument that would pay off if, as you expect, the work slides noiselessly into obscurity. The money would come from the theory’s proponents, who would similarly benefit if it pans out into the next big thing.

Weinstein’s point is that markets, in theory at least, work efficiently and — putting the current financial meltdown to one side — lead to the accurate valuation of products. They exploit the “wisdom of crowds”, as a popular book of the same title recently put it. Take the famous electronic prediction markets at the University of Iowa, which pool the views of thousands of diverse individuals and consistently seem to give better predictions than any expert. For example, they predicted last year’s US presidential election correctly to within half a percentage point.

Could the same not be done for weighing up the likely value of scientific ideas? Those ideas, Weinstein argues, do not get weighed fairly today. As he points out, mavericks get their papers routinely rejected for what they feel are unfair reasons, and they often feel suppressed by the mainstream community, while mainstream scientists think it is perfectly obvious that the ideas in question are ludicrous and should not waste the community’s time. Current research practice lacks any mechanism that would arrange a fruitful meeting between the two — letting the maverick’s ideas gain free expression while at the same time letting the critics take a real stake based on their own knowledge.

“What do you do when you’re confronted with some maverick with a crazy idea?” he asks. “You’ve tried it, your students have tried it, and you know it’s almost certain to fail. Why can’t you use this knowledge to your own advantage? At the moment, you just can’t express your view in the market efficiently.”

The situation is directly akin to a trader on the stock market who has sound knowledge, for example, that a certain asset is currently undervalued, but, for whatever reason, cannot buy it and so benefit from that knowledge. In financial theory, a market of this kind of called “incomplete”, and its incompleteness leads to inefficiency, because all relevant knowledge does not get expressed in the market.

To counter the analogous inefficiency in the case of science, Weinstein suggests, it should be possible for the critic to take a position on the idea. “It would be more efficient,” he says, “if the maverick could demand of the critic, if my theory is so obviously wrong, why don’t you quantify that by writing me an options contract based on future citations in the top 20 leading journals secured by your home, furniture, holiday home and pension?”

That may be going a little over the top, but it makes the point. Bringing such possibilities into play, Weinstein suggests, would move research practice closer to the “efficient frontier” — the place where ideas get judged fairly based on all available knowledge, and risk takers, rather than being suppressed by social conformity, get encouraged by those taking a financial stake in the potentially enormous consequences of their success. Such mechanisms, Weinstein argues, would help avoid the effective censorship that often afflicts peer review, and that currently keeps research on the cautious side of the efficient frontier.

As one specific idea, Weinstein envisions something he calls synthetic tenure, which resonates with Smolin’s call for long-term fellowships. Today, he suggests, young researchers can easily be deterred from tackling really hard problems because they fear for their careers if they work on an issue for a decade and do not make significant progress. To give exceptional researchers the confidence to tackle hard problems, he suggests that an agency or foundation might make an agreement by which they would guarantee that person a good position in the future in some stimulating field, if their project does not work out.

The new Einsteins

It is precisely this kind of thing, Smolin argues, that could be helpful. If more scientists started pushing for a return to independent, curiosity-driven science, then this might also encourage the big funding agencies and the other new sources of private funds such as the Perimeter Institute or the Howard Hughes and Gates Foundations. Indeed, Weinstein suggests, these new structures may have similarities with recent developments in financial engineering with the new structures emerging as “intellectual hedge funds” in response to perceived inefficiencies of more traditional agents, which play the role of more risk-averse mutual funds.

The price to pay for not moving to re-establish such independence will lie in a failure to realize the huge and unpredictable discoveries that move science forward most in the long term — discoveries made possible only when individuals leap out of what is comfortable and accepted, and wander out into spaces unknown. It is the true enormity of the potential gains that makes this goal of reaching the “efficient frontier” so important. If today we seem to have a dearth of new Einsteins, Smolin suggests, this may just reflect that we have become a little too risk averse.

New Einsteins, he points out, will not be working in areas that have been well established for decades. They may not even work in an area linked to the name of any established, senior scientist. New Einsteins may be slipping out of view and out of science altogether just because our scientific culture currently simply has no way of encouraging them.