Physics World editor Matin Durrani thinks he’s not biased. But in an eye-opening journey of self-discovery, he finds that the truth is very different for him – and for all of us

Like you, I’m not biased. No way, not me. How could I be? I’m the editor of a magazine that’s devoting a whole issue to diversity. I did my PhD with Athene Donald – one of the first female professors of physics in the UK. The Physics World editorial team has more women than men. My father’s from Pakistan and my mother’s from Germany so that makes me ethnically and culturally diverse. I grew up in Birmingham in the English Midlands, which means there’s no way I have a north/south bias. I’m tolerant and fair-minded. I’m not rich or poor. And I’m sure I give everyone an equal chance. If I were a coin and you spun me, you couldn’t predict if I’d land heads or tails. So that’s me: definitely not biased.

But hang on a minute. Here’s the rub. We all like to think we’re not biased but, whatever our background, we all have in-built prejudices. This hidden, or “unconscious”, bias means we naturally prefer the company of certain people, namely those who look and sound like ourselves. It’s not about us being bad or nasty people, although it can mean we end up stereotyping others. Our unconscious bias is just the natural outcome of how we’ve been brought up, where we went to school, who we mixed with, what subject we studied and where we’ve lived. That preference for certain kinds of people can be acceptable socially, but when it comes to positions of power – hiring staff, sitting on funding panels or promoting people – that bias can lead to decisions that are irrational, unfair and possibly even illegal.

Once you’re aware of unconscious bias, it becomes easier to spot. I was recently talking to my colleague Louise Mayor, who’s Physics World’s features editor, after she’d returned from a visit to the European Southern Observatory in Chile. She happened to mention two astronomers she’d spoken to who’d both been using the Atacama Large Millimeter Array to study various cosmic phenomena. Based on the many scientists I’ve met over the years, I automatically pictured the researchers as two white, middle-aged men. I think they had beards too. Wrong! Both astronomers were young women. I’d had a stereotyped picture in my head of the “average” scientist.

I could brush that incident under the carpet as an inconsequential one-off. No harm was done; it was a mere thought that popped into my head. What did it matter that I’d imagined those two astronomers as men? But my unconscious bias can have consequences. It could make me more likely to pick bearded white men to write features or book reviews for Physics World, subtly perpetuating the myth that physics is only for bearded white men. I might pay slightly less attention when speaking to a female physicist on the phone; after all, there have only ever been two female Nobel laureates in physics so it’s surely just efficient to focus more on the thoughts of male physicists?

There is, of course, nothing wrong with publishing articles by bearded white men or listening carefully to the thoughts of male physicists on the phone. It’s just a case of giving everyone a fair chance. Unfortunately, our unconscious bias trickles into all areas of our lives. It can lead to schoolteachers treating boys and girls subtly differently in science classes and to recruiters judging women’s CVs as less striking than those of men. It might also explain why many people organizing scientific conferences end up with all-male panels even though women make up a sizeable proportion of the relevant community (see “Reflecting reality”, below).

Uncovering my bias

To find out how biased I really am, I decided to take several Implicit Association Tests (IATs), which are designed to tease out your subconscious attitudes to everything from race and gender to disability and sexual orientation. Originally developed in the 1990s by US-based social psychologists including Mahzarin Banaji and Anthony Greenwald, these tests form part of Harvard University’s Project Implicit and can be done by anyone online (http://ow.ly/WF9J9). In the case of race, you’re asked to cross-link white and black faces to positive- and negative-sounding words by striking the correct keys on your computer keyboard. There are no wrong answers; the test measures only how fast and accurately you respond.

Before doing the test, I started reading Banaji and Greenwald’s book Blind Spot: Hidden Biases of Good People (2013 Delacorte Press). It warned me that many people who take IATs are shown to have discriminatory views – despite them genuinely believing they hold egalitarian beliefs. And so it proved for me. According to the test, which takes about five minutes to complete, I have a “strong automatic preference for white people compared to black people”. Essentially, I responded faster when faces of white people and good words were paired than when black people and good words were paired. I took the test again just to make sure it hadn’t miscalculated my score, but no, once again it decreed I strongly prefer white people.

That was my ego punctured. There was some comfort, though, in finding that I’m not alone. More than 20,000 people take the online IATs each week, with about 70% of respondents to the race test having a “slight”, “moderate” or “strong” automatic preference for white people. Some 17% have no preference and the rest prefer black people. So despite my best intentions, I’m unconsciously racist – or, as Banaji and Greenwald put it, I’m an “uncomfortable egalitarian”.

Still, surely my attitudes to women in science were going to be beyond reproach? Gender equality is something we take seriously at Physics World so I was banking on a better score on the IAT for that. This time I was asked to link male and female words with arts- and science-related words. Another disaster. The test suggested I have “a strong association of male with science and female with liberal arts compared to female with science and male with liberal arts”. Again, I’m not alone. Overall, 72% of respondents have a “slight”, “moderate” or “strong” association between men and science, with 18% having no association and the rest identifying women more with science.

The impact of bias

Chastened by the results, I continued reading Blind Spot. I’d supposed that having an automatic attitude doesn’t necessarily mean that you endorse it. The test, in other words, might have shown me to be biased, but surely I don’t act that way in real life. Yet as Banaji and Greenwald point out, we are so prone to stereotyping certain groups that even people in those groups can hold such stereotyped views to some extent. A female physicist on, say, a recruitment or grant-allocation panel can unconsciously favour a male applicant because she assumes men are better scientists and that it’ll help her to be part of a powerful “in-group” of male colleagues.

So how can we change our unconscious bias? The bad news is we can’t as it’s ingrained into our automatic thinking. However, being aware of the issue – as I now am – at least means we can recognize our bias and seek to address it. Finding solutions can be tricky but they don’t have to be hard to put into practice. Banaji and Greenwald cite a great example from the US symphony-orchestra scene back in the 1970s, which was then – like physics – dominated by men. Several orchestras changed their auditions by simply adding a screen between a musician and the judging committee. These “blind” auditions led to the proportion of women hired doubling from 20% to 40%. Ironically, the procedure wasn’t adopted to improve the gender ratio, but to ensure judges didn’t pick musicians who’d been trained by a small band of famous teachers.

One organization tackling unconscious bias is Research Councils UK, which oversees the activities of the UK’s seven research councils. To help ensure that the £3bn it hands out each year in research grants is distributed fairly, it has just launched a new programme that will see more than 1300 people – including peer-reviewers, policy-makers and research-council staff – having access to online training on unconscious bias over the next three years. The training will be based on a series of workshops that will, according to an RCUK spokesperson, “openly explore bias, allowing participants to recognize their own biases and the impact these could have on their decision-making”. With such big sums at stake, even a small shift in behaviour could reap big dividends.

Reflecting reality

One consequence of unconscious bias is that people organizing conferences, who are often men, inadvertently pick people who are just like them. That can lead to situations where all invited speakers or every member of a panel debate is a man, not reflecting the gender ratio of the relevant community. Faced with a backlash, conference organizers will defend themselves by claiming there just aren’t enough suitably qualified or appropriate women to invite onto the panel – it’s “just one of those things”. There’s even a blog (http://allmalepanels.tumblr.com) devoted to mocking all-male line-ups, in which a picture of David Hasselhoff giving the thumbs up appears on a seemingly endless stream of men-only panels.

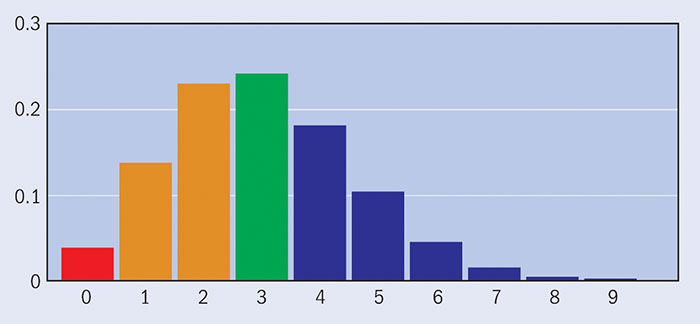

To illustrate just how statistically unlikely all-male panels or invited-speaker lists are for a given community, Aanand Prasad – a London-based Web developer – has created the Conference Diversity Distribution Calculator (http://ow.ly/WO3px). It tells you how many women you would expect to find in a random selection of x people assuming they make up y% of available speakers. In the case of physics, women account for about 15% of research-active staff according to 2013 data from the Institute of Physics, which publishes Physics World. This means there’s a 44% chance that, at random, a five-member group would have no women at all. With 10 people, the likelihood falls to 20%, while with 20 people the chance is less than 4%.

I can see many physicists rolling their eyes at the prospect of being forced to sit through training sessions on unconscious bias when they could be do something more useful, like proper work. As they will rightly point out, our unconscious brains are wired up to process and sift vast amounts of information looking for patterns. If you only ever come across, say, male IT staff and female receptionists, then surely it’s just an efficient short cut to assume who’s who if faced with a room full of IT staff and receptionists.

The problem is that when we are in positions of power, our unconscious bias can lead to us holding back others professionally. As the Royal Society put it in a briefing note issued last year to those who decide who should win its grants, awards and fellowships: “We perceive a pleasant fluency of action when we experience familiarity, and this makes us feel confident and in control of our decisions. With unfamiliar members of other groups we are on less sure ground.” As we feel it’s risky to pick a candidate from such a group, scientists “redefine merit to justify discrimination”.

The Royal Society’s note also has five tips for those seeking to avoid unconscious bias (see “Five tips to avoid bias”, below). But another simple solution I came across several times while researching this article is that if you have, say, an unconscious bias against female scientists, then simply put lots of photos of female scientists on your pinboard or screensaver. The idea is that by surrounding yourself with such images, the group you’re biased against will feel less different and more normal to you. With time, you’ll become less prone to making negative, snap judgements about people from that group and more likely to keep your bias under control.

As I finished my exploration of unconscious bias, I came across a fascinating study published last year by a team of psychologists in the US, which showed that some of us have a bias against research that shows a bias (Proc. Natl Acad. Sci. 112 13201). Led by Ian Handley from Montana State University, the researchers asked more than 200 university staff to read the abstract of a paper reporting a bias against women in science, engineering, technology and mathematics (STEM) and then to rate the quality of the research. While both men and women rated the findings positively, men ranked it less favourably, agreeing with the results less, finding the study less important and judging it more poorly written. Male STEM staff showed a particular bias against the findings.

Perhaps this article is destined for the same fate.

Five tips to avoid bias

The Royal Society last year issued a briefing note for members of its panels and committees who decide which scientists should get grants, awards or fellowships from the society. In seeking to ensure decisions adhere to the society’s ethos – and so are made “purely on the basis of the quality of the proposed science and merit of the individual” – it offered these five tips:

1. When preparing for a committee meeting or interview, try to slow down the speed of your decision making.

2. Reconsider the reasons for your decision, recognizing that they may be post-hoc justifications.

3. Question cultural stereotypes that seem truthful. Be open to seeing what is new and unfamiliar and increase your knowledge of other groups.

4. Remember you are unlikely to be more fair and less prejudiced than the average person.

5. You can detect unconscious bias more easily in others than in yourself so be prepared to call out bias when you see it.