People who raise “unknown unknowns” to promote a particular course of action – such as closing particle colliders – are doing a disservice to science, says Robert P Crease

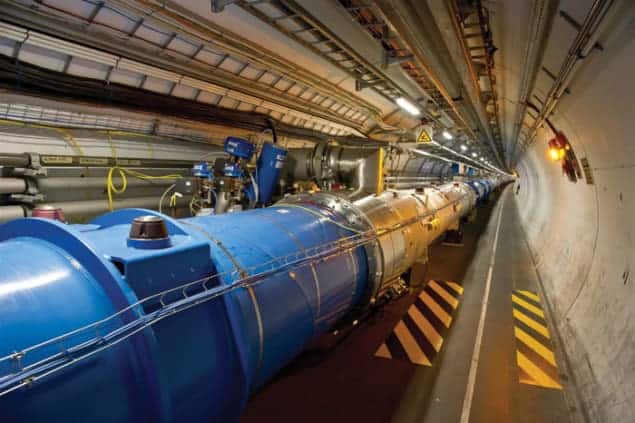

Last May the Huffington Post ran a piece entitled “Why physics experiments at the subatomic level may cause ‘unknown unknowns’ to destroy the world”. It was written by Peter Reynosa, a poet and painter from San Francisco, who’s also the author of a dystopian novel about the dangers of rationalism. As an enthusiastic newcomer to the old debate over whether the Large Hadron Collider (LHC) will destroy the universe, Reynosa reckoned the LHC is a monster that is “nightmaring itself into our world”. Though vague on specifics, Reynosa claimed the collider is staging “very dangerous experiments that may cause the world’s destruction”.

To support his claim, Reynosa cited the Bush Administration defence secretary Donald Rumsfeld’s famous phrase concerning “unknown unknowns”, which he originally used in 2002 to defend the invasion of Iraq. The LHC is designed to investigate matter at its fundamental levels. Although we may not think this poses any dangers, there could be, Reynosa claimed, things we don’t know that may cause its operation to trigger “catastrophic repercussions” that will create an “unthinkable horror”.

Bush league

Frightening! But arguments using “unknown unknowns” were dishonest when Rumsfeld first made them to defend the Iraq invasion, and are equally dishonest made against the LHC. Iraq, after all, had nothing to do with the terrorist attack of 11 September 2001, but in its aftermath the Bush administration prepared to invade the country anyway. As a shameless pretext, it cited the possibility Iraq might give terrorists weapons of mass destruction.

At a news conference on 12 February 2002, a reporter asked Rumsfeld what evidence he had. Rumsfeld replied evasively. “There are,” he said, “things we know we don’t know.” Then he added: “But there are also unknown unknowns – the ones we don’t know we don’t know.” Those, he implied, were sufficient to justify invasion. As it turned out, there was no evidence of weapons of mass destruction, and an inspection team that hunted for them came up empty-handed.

Rumsfeld’s use of “unknown unknowns” to justify the decision to invade Iraq is like justifying pulling out a gun and shooting a suspect who shows no sign of ill intent by saying “You never know!” It was a smokescreen to rationalize the already-taken decision. The appeal to “unknown unknowns” uses naked fear-mongering to try to turn a lack of evidence for an action, such as invading Iraq or shutting down the LHC, into a positive reason for proceeding as if there were evidence.

We might call this a Chicken Little argument, as it’s like the children’s fable in which Chicken Little, after getting hit on the head with an acorn, tells the other animals to run because “the sky is falling”. But this new version is a “could-have” Chicken Little argument, for the chicken is claiming the sky is falling because she “could have” been hit by an acorn. The “unknown unknowns” argument is a fallacy.

Two kinds of probabilities

Theoretical physicists who’ve looked into whether heavy-ion collisions can produce “strangelets” or black holes that will consume other forms of matter have concluded that the probability of such events is non-zero. So isn’t there at least minuscule evidence that the LHC is a threat? No, because there are two kinds of probabilities. One – established probability – is based on sound principles and empirical data of frequencies of actual occurrences. This is the probability of coin tosses, winning the lottery, human mortality, the molecular behaviour of gases and so on. We’re sure of the principles and data, and no magical thinking is involved. Established probabilities are reliable guides to the world.

The other kind of probability is fictive or subjective. This is when you assume certain principles and initial conditions and use them to estimate possible outcomes. You don’t know whether all the principles are sound, and you have no data on outcomes from relevant similar experiments. You have no reason to suppose the probabilities reflect the real world, and it would be folly to use them as a guide to action.

We can show this by turning the argument on Reynosa’s own profession. I’m sure someone can concoct a theory of insanity that yields a non-zero probability that poetry and painting can drive individuals to commit mass murder. If there were no empirical data to back up this finding, wouldn’t it be insane and unjust to use it as a reason to ban poets and painters from practising?

The safe working of our scientific, technological and medical infrastructure is highly vulnerable to dishonest unknown unknown arguments. If you use that argument, then it’s easy to claim that cell phones may cause cancer, vaccines autism, and genetically modified organisms disease, and so they should be banned. After all, you never know!

The problem is that those who advance such arguments often have no real interest in public safety but only in self-advancement or special pleading. Politicians and activists exploit such arguments to counter policies they do not want to support. Others use such arguments to sell books or get attention. Media sources lap up such incendiary messages. In my May 2007 column, I called people who seek to advance themselves by sowing unwarranted suspicion “social Iagos”. Iago, the villain in Shakespeare’s Othello, used a handkerchief to cause his boss to doubt his wife; today’s scoundrels use “unknown unknowns”.

The critical point

Arguments that use “unknown unknowns” to promote a particular course of action are harmful in several ways. They can lead to different kinds of harmful and destructive courses of action, such as shutting down accelerators or stopping medications. They can be used to create distrust of legitimate and valuable institutions, such as review committees. Finally, they are harmful in seeking to curtail investigating the dynamics of the world, which is far safer than not investigating it at all.