A commercial artificial intelligence (AI) system matched the accuracy of over 28,000 interpretations of breast cancer screening mammograms by 101 radiologists. Although the most accurate mammographers outperformed the AI system, it achieved a higher performance than the majority of radiologists (JNCI: J. Natl. Cancer Inst. 10.1093/jnci/djy222).

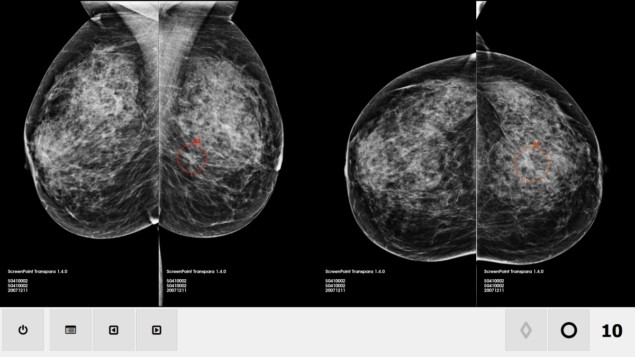

With the addition of deep-learning convolutional neural networks, new AI systems for breast cancer screening improve upon the computer-aided detection (CAD) systems that radiologists have used since the 1990s. The AI system evaluated in this study — conducted by radiologists and medical physicists at Radboud University Medical Centre — has a feature classifier and image analysis algorithms to detect soft-tissue lesions and calcifications, and generates a “cancer suspicion” ranking of 1 to 10.

The researchers examined unrelated datasets of images from nine previous clinical studies. The images were acquired from women living in seven countries using four different vendors’ digital mammography systems. Every dataset included diagnostic images, radiologists’ scores of each exam and the actual patient diagnosis.

The 2652 cases, of which 653 were malignant, incorporated a total of 28,296 individual single reading interpretations by 101 radiologists participating in previous multi-reader, multi-case observer studies. The readers included 53 radiologists from the United States, who represented an equal mix of breast imagers and general radiologists, plus 48 European radiologists who were all breast specialists.

Principal investigator Ioannis Sechopoulos and colleagues reported that the performance of the AI tool (ScreenPoint Medical’s Transpara) was statistically non-inferior to that of the radiologists, with an AUC (area under the ROC curve) of 0.840, compared with 0.814 for the radiologists. The AI system had a higher AUC than 62 of the radiologists and higher sensitivity than 55 radiologists.

The performance of the AI system was, however, consistently lower than the best performing radiologists in all datasets. The authors suggested that this may be because the radiologists had more information available for assessment, such as prior mammograms, for the majority of cases. However, the team did not have access to the experience levels of the 101 radiologists, and therefore could not determine whether the radiologists who outperformed the AI system also were the most experienced.

The researchers suggest that there may be several ways that an AI system designed to detect breast cancer could be used. One possibility is its use as an independent first or second reader in regions with a shortage of radiologists to interpret screening mammograms. It also could be employed in the same manner as CAD systems, as a clinical decision support tool to aid an interpreting radiologist.

Sechopoulos also thinks that AI will be useful for identifying normal mammograms that do not need to be read by a screening radiologist. “With the right developments, it could also be used to identify cases that can be read by only one radiologist to confirm that recalling the patient is necessary,” he tells Physics World. “These strategies could give radiologists more time to focus on more complex cases, and eventually could be part of the solution needed to implement digital tomosynthesis in screening programs. This is important because tomosynthesis takes considerably longer to read than mammography.”

When asked about future research, Sechopoulos suggests that a really interesting next step will be to compare the performance of AI against human performance in real screening conditions with the actual prevalence, or percentage of cases that are positive. “We need to gather that data first, and then do the comparison overall and also broken down by case characteristics, including lesion type, lesion location, and tumour characteristics,” he says.