Maria Schuld describes how researchers are enhancing machine learning – an approach that enables computers to learn and make predictions – by combining it with quantum computation

The blue room is dense with concentration. At a table in the centre sit two opponents staring at a board of black and white marbles that are moved in silent turns. Finally, the player on the right resigns. It is 9-dan Go master Lee Sedol. On the left sits software developer Aja Huang who gets his instructions from AlphaGo, a computer program developed by Google’s DeepMind project. It is March 2016 and AlphaGo has just beaten one of the world’s best players in four out of five matches of the popular board game Go.

The success of AlphaGo has been widely perceived as a milestone in artificial intelligence research. Go is a much more complex game than chess, at which a computer first won against a world champion in 1997. In Go, exploring all strategies by brute force – in which all possible moves are evaluated to decide the best move to make – is no option; there are more possible marble positions than there are atoms in the universe, and the 2200 computer processors delivering the power for the game are lightweight compared with today’s supercomputers. The secret of AlphaGo’s success lies much more in a strict training regime with a special sparring partner, namely the software itself. To become a worthy training partner, AlphaGo’s “deep neural networks” – computer algorithms inspired by our brain’s architecture – initially learnt how to master the game by consulting a database of around 30 million professional moves.

Machine learning can be understood as the data side of artificial intelligence, where one often deals with large amounts of information, or “big data”. Similarly to human learning, machine learning involves feeding very many instances of a problem into a computer that has been programmed to use patterns in the data to solve a previously unseen instance. For example, a computer could be fed a lot of images of a particular person, and then given a new image before being asked whether it is the same person as before. The crux is that we do not know how we link the visual stimulus to the concept of recognizing a person in the image. In other words, there is no simple correlation between the pixel at, say, position (1334, 192) being red and the picture containing our friend Sivu that we could programme the computer to exploit. Machine-learning research therefore has to come up with generic ways to find complicated patterns in data, and as Facebook’s automatic tagging function shows, this is done with ever-increasing success.

What does this have to do with physics, and more precisely, with quantum physics? The computer that executed the AlphaGo software is based on classical physics. Information is processed by microelectronic circuits that manipulate signals of zeroes and ones, and these circuits follow the laws of classical electrodynamics. But for two decades, physicists have been rethinking the concept of a computer right from scratch. What if we built a computer based on the laws of quantum theory? Would such a device fundamentally change the limits of what is computable? The answer, it turns out, is not so easy to find, although we seem to understand the question a lot better today. Despite the fact that we haven’t yet been able to build quantum computers large enough to solve realistic problems, several powerful languages have been developed to formulate and study “quantum algorithms”, the software for quantum computers, from a theoretical perspective. This research effort has now left the borders of purely academic interest and is pursued in the labs of large IT companies such as Google and IBM. As its realization seems more and more certain, the pressure to find “killer apps” for quantum computing grows. This is where machine learning comes in.

Since we know the language quantum computers will speak one day, we can already start thinking about what impact they will have on the frontiers of machine learning. This approach is called quantum-enhanced machine learning and is part of the larger research field of quantum machine learning (which also investigates the opposite approach of using traditional machine learning to analyse data from quantum experiments). To get an idea of quantum-enhanced machine learning, one first has to understand how machine learning works, and the “black art” involved in using it to greatest advantage.

Machine learning

A quick way to access the concept of machine learning is through data fitting, an exercise that most scientists have come across during their undergraduate studies and forms one of many methods used to recover patterns or trends in data. Imagine you run an experiment that generates data points (x, y) for setting a control parameter x and measuring the result y. As a physicist you would like to obtain a model that can explain these measurement results. In other words, you want to find a relationship y = f(x) that, up to some degree of noise, produced the data. This can be done by feeding the experimental data into a computer and using numerical software to find the best fit of a parameter-dependent function f(x) (figure 1). Mathematically speaking, this is an optimization problem.

Solving the optimization problem is already the job half done for machine learning, where one can now use the best model function to predict the measurement outcomes for new control parameters without performing the actual experiment. Of course, in most machine-learning applications one is less interested in physical experiments than in tasks that traditionally require human experience. For example, x could represent a set of macroeconomic variables and y stand for the oil price development in the next week. If we derive a model y = f(x) from the data, we can use it to predict tomorrow’s oil price. Alternatively, the inputs could be the pixels of images and the output a yes-or-no answer to whether your friend Sivu is in the picture, in which case the machine-learning software is used for image recognition. One thing most applications have in common is that they allow us to answer questions about complex relationships where the answers are worth a lot of money.

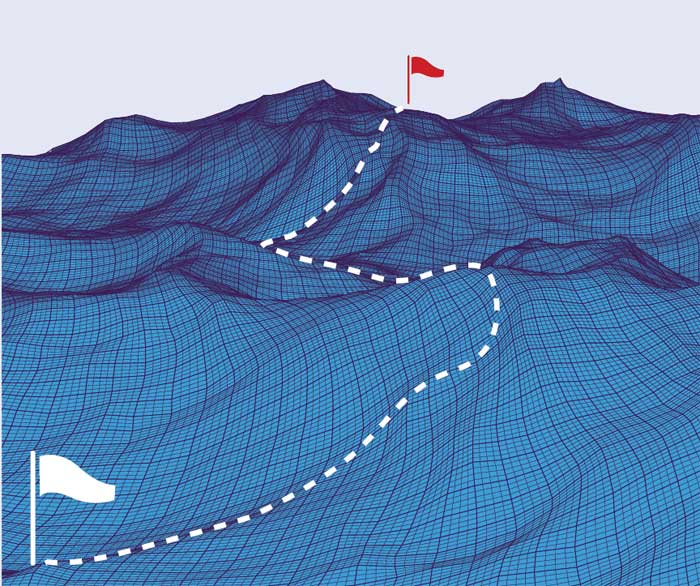

So far this sounds pretty straightforward. All you have to do is solve an optimization problem to find the best predictive model. But machine learning usually deals with very difficult types of optimization problems that are avoided by even the more adventurous mathematicians. Think, for example, of an optimization landscape like the Himalayan mountain range, where you want to find the deepest valley on foot and without a map (figure 2). The real “black art” lies in the subtleties of formulating the optimization problem. In the data-fitting case of figure 1, for example, if we define the best model to be the one where the prediction f(x) is closest to the real value y for all data points, the more flexible model function (blue) would win, because the model function goes through all data points. But when we introduce a new data point, it is clear that the “rougher fit” (red) gives a much better prediction. For our hiker, the optimization landscape that corresponds to the more flexible model is not very helpful, because even if they find the deepest valley it does not necessarily lead to a good model. A useful optimization landscape leads to optimal models that generalize from the underlying pattern to unseen data, even if they do not predict the seen data perfectly well. Formulating effective optimization problems requires a lot of intuition and hands-on experience, which are key to harnessing the power of machine learning.

A quantum boost

The most common approach to enhancing machine learning with quantum computing is to outsource the hard optimization problems to a quantum computer, either the small-scale devices available in the labs today, or the full-blown versions that we hope to have access to in the future. An entire toolbox of algorithms for this purpose has been developed by the quantum-information-processing community. The continuing challenge is to combine, adapt and extend these tools with the aim of improving number crunching on conventional computers. Three different approaches to solving optimization problems using quantum computers are explained in more detail in the box opposite. Although we know by now that most hard computational problems tend to remain hard even if quantum effects are exploited, modest speed-ups can still prove crucial for today’s big-data applications.

There is one important caveat in outsourcing, however. For this approach to work, one needs to encode the data that shape the optimization landscape into the quantum system. One way to do this is to represent a black-and-white image with a lattice of spins pointing up (white pixel) or down (black pixel), as in figure 3. Using quantum superposition – where a physical system is in two or more quantum states at the same time – allows us to store many images in a single quantum system. Other encoding strategies are more involved, but all of them require in practical terms that we prepare the initial state of the quantum system (or, in some cases, the interactions) to represent the values of the dataset. For an experimental physicist, preparing a microscopic physical system so that it encodes billions of pixels from an image dataset, to a high precision, must sound like a nightmare. Data encoding is therefore a crucial bottleneck of quantum algorithms for machine learning and a challenge with no direct equivalent in classical computing.

Towards a quantum AlphaGo?

Without question, there is a long road to walk before future generations of AlphaGo and its companions can run on quantum hardware. First we need robust large-scale quantum computers to run the software developed. We need to design an interface between classical data and quantum systems in order to encode the problems in these devices. We also need better quantum tools for optimization, especially when the landscapes are complex.

More than anything, we need to learn the “black art” of machine learning from those who have been practising it for decades. Instead of merely outsourcing optimization tasks formulated for classical computers, we should begin to formulate problems for quantum computing right from the start. An early generation of quantum devices is waiting in the labs for practical implementations. The question is, what type of optimization problems do these devices allow us to solve, and can the answer to this question be used to define new machine-learning methods? Could there be specific physics-research problems that quantum-enhanced machine learning could tackle? Can we use genuine “quantum models” for these tasks? And can the way we think in quantum computing give rise to innovation for conventional strategies in machine learning?

In summary, the emerging discipline of quantum-enhanced machine learning has to be relocated from the playgrounds of quantum computing and must become a truly interdisciplinary project. This requires a fair bit of communication and translation effort. However, the languages might be less far apart than we expect: both quantum theory and machine learning deal with the statistics of observations. Maybe we do not need to take the detour via digital bits of zeros and ones in the first place. All in all, we do not know yet if some decades into the future, a quantum computer will calculate AlphaGo’s decisions. But asking the question gives us a lot to think about.

Approaches to quantum-enhanced machine learning

Quantum search

In the mid-1990s, computer scientist Lov Grover showed that a future quantum computer can search an unsorted database – such as telephone numbers in a phone directory – faster than classical computers can. This method can be adapted to find the k “closest” entries, or the k phone numbers that have the most digits in common with a given number. Finding closest data points to a new input is an important task in machine learning, for example in a method called “k-nearest neighbour” that chooses the new y-value according to the neighbours’ y-values. Maybe the most straightforward approach to machine learning with quantum computers is therefore to reformulate search problems in the language of quantum computing and apply Grover’s well-studied algorithm.

Linear algebra

A small quantum system can have a large number, N, of different configurations or measurement outcomes. Quantum theory describes the probability that one of the possible outcomes from all of these configurations is measured, and it is largely based on the mathematical language of linear algebra. In 2009 Aram Harrow, Avinatan Hassidim and Seth Lloyd from the Massachusetts Institute of Technology proposed a quantum algorithm that uses these properties in a clever way to solve systems of linear equations, which can under very specific circumstances be done incredibly fast. Likewise, many machine-learning optimization problems can be mathematically formulated as a linear system of equations where the number of unknowns depends on the size of the dataset. For big-data applications, numerical solutions can take a lot of computational resources and they are therefore excellent candidates for the application of the quantum linear systems algorithm.

Finding the ground state

A third type of optimization problem minimizes an overall energy function to find an optimal sequence of bits. A popular numerical method to carry out such “combinatorial optimization” is “simulated annealing”. Such an approach simulates the process in thermodynamics in which a system cools down until it reaches its ground state. In the quantum equivalent of the process, “quantum annealing”, an energy landscape is similarly minimized, however the algorithm can in addition use quantum-mechanical tunnelling to travel through the energy peaks – rather than having to “climb” over them – meaning it may find the lowest valley more quickly. Quantum annealing devices already exist, such as that announced in 2010 by the Canadian firm D-Wave as the world’s first commercial quantum computer. These devices have been shown to solve (admittedly, rather exotic) problems where they find a global minimum 100 million times faster than a conventional computer running a simulated annealing algorithm.

- Enjoy the rest of the March 2017 issue of Physics World in our digital magazine or via the Physics World app for any iOS or Android smartphone or tablet. Membership of the Institute of Physics required