With software development becoming ever more important in physics research, Arfon Smith argues that we need to adopt better ways of recognizing those who contribute to this largely unrewarded activity

What would you say are the core “products” of academic research? Most people, when asked this question, talk about research papers, trained scientists, books and perhaps even data. But this list misses a critical component of much of the research being done today: software.

We all know that much of modern physics research relies on the development of specialist software, whether it’s for experiments that create a huge amount of data such as the Large Hadron Collider, or for supercomputer simulations modelling the distribution of dark matter in the early universe. More than 90% of UK academics use software, according to a survey of Russell Group Universities (Hettrick et al. 2014 UK Research Software Survey 10.7488/ds/253). About 70% say their research would be impractical without it and more than half develop their own. Why, then, is software in physics not as visible as it arguably deserves to be?

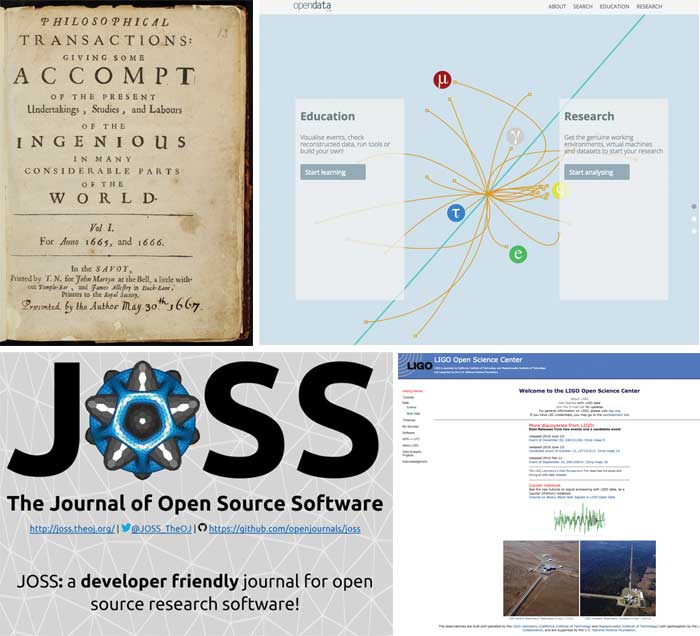

Part of the problem here is that the research paper is becoming an increasingly unsatisfactory way of describing modern, data-intensive research. Academic publishing hasn’t changed substantially since the first communications in the journal Philosophical Transactions in 1665. Academics writing down their thoughts and sharing results with their peers in a journal-based system (paper or electronic) is the same solution we’ve had for more than 300 years.

Yet the full spectrum of activities in modern physics (and many other disciplines) simply can’t be completely described with text, a few equations and the occasional plot or figure. To completely describe the origin of any individual result, researchers need to share both their ideas and results (perhaps in the form of a paper) but also the data they collected and the analyses they carried out to reach their conclusions.

This idea of sharing more than just a paper isn’t new. In 1995 statisticians Jonathan Buckheit and David Donoho wrote “An article about a computational result is advertising, not scholarship. The actual scholarship is the full software environment, code and data, that produced the result.” Buckheit and Donoho argued that papers about “computational science” (the same argument also holds for physics) aren’t sufficiently complete descriptions of the work. They’re simply “adverts” for the research that we place in journals. For a third party to properly understand the research, they would need to be able to see all of the components that resulted in the paper (Wavelets and Statistics, New York: Springer, pp55–81).

The publishing problem

With dependencies on software woven into the fabric of modern research, finding ways for researchers to share this work seems like it should be a high priority.

On the face of it, asking researchers to share a more complete description of their research is hard to argue against. In reality though, there are a number of factors limiting progress. Probably the biggest impediment is that for many researchers, especially those in the early stages of their career, the pressure to publish as many papers as possible trumps almost every other activity. Publishing anything in addition to a peer-reviewed paper requires additional time and effort that most researchers simply cannot afford.

But as we move towards a future where a growing fraction of research output is described by data and software, it becomes increasingly urgent to find ways of at least capturing references to software in papers and ideally establishing community norms for the publishing of these tools.

There are a number of challenges to actually doing this. First, it’s not completely obvious how a researcher should cite software in a paper. Unlike a paper, which is a static “snapshot” of a research idea, popular software packages often have lifetimes of many years and are released multiple times with different version numbers and often with subtly different behaviour of the tools. As a result, capturing both the software name, location (i.e. where to find it) and the version of the software used is considered by many to be the minimal useful citation. Another obstacle is that even if an author wants to cite a piece of software, many journals don’t let them cite anything other than papers in their bibliographies. Finally, most academic fields lack cultural norms, such as dedicated journals, for publishing research software, which in turn means that spending time doing so generally isn’t recognized as a creditable research output. Put bluntly, why would anyone spend time publishing anything other than papers if it doesn’t contribute substantially to their career?

Large physics collaborations are one area where all research outputs, including software and data, are shared well. This is probably because of a number of factors, including, first, that the collaborations are so large that there are individuals who devote most of their time to authoring software for data analysis and reduction and so they “go the extra mile” in publishing their code. Second, the results from these experiments have such a high impact in science that the community expectations for publishing all the research products (code, data, papers) is higher. Third, the community interested in reproducing these big results is large and so it’s more efficient for the wider community if the project releases software tools that enable others to check the data analyses.

A good recent example of a large collaboration publishing its research products well was in February 2016, when members of the Laser Interferometer Gravitational-Wave Observatory (LIGO) collaboration announced they had made the first detection of a gravitational wave. When announcing their results they published not only a paper describing the detection, but also all of their software used to analyse the data. The collaboration in addition created a complete online analysis environment, the “LIGO Open Science Center”, which leveraged this software in an interactive online environment. Publishing all of the constituent parts of their work meant that anyone with the time and interest could dive into the analysis carried out by the LIGO team, thereby increasing the community’s confidence in this groundbreaking result.

Looking outside of academia

Over the past few decades, there has been a major shift in the cultural norms of developing and sharing software that affects individuals, businesses and parts of academia. The reliance of businesses on closed-source, proprietary software, has given way to open-source software development, with even the biggest stalwarts of proprietary software such as Microsoft embracing open source as the future of technology development.

The term “open source” is often used to describe more than one thing. Strictly speaking, open-source software is software that has been shared publicly together with one of a number of approved licences that describe the conditions by which the code can be modified, reused and shared with others. What can be done with the code varies depending on the licence, but all of them permit the use of the software for any purpose. This is in contrast to, for example, image usage licences, which can specify that an image may not be used for commercial purposes.

In addition to being a collection of licences and legalese, the term “open source” is often used to describe the culture of open-source projects, in which there is an emphasis on working in an open and collaborative way, a focus on transparency and an effort to engage the community. As such, many of the principles of open source are well aligned with the core tenets of the open-science movement and academia more generally.

The success of open source relies not only on the goodwill of software developers and businesses to share their work free of charge, but also on an organically developed “ecosystem” that relies on a variety of factors (see box below). If open-source software is to flourish in the field of physics, physicists should consider adopting some of these key ingredients of success.

Data-science brain drain

Many of the problems we solve in academia, especially in data- and computer-intensive sciences, are, at least functionally, very similar to those in data-rich industries. This has led to a growing overlap in the skills required to be successful in both sectors. Often described with the catch-all term of “data scientist”, an individual capable of collecting, analysing and creating knowledge from data is highly employable in any large, data-driven organization. They might also be a good physicist.

In his 2013 blog post “The big data brain drain: why science is in trouble”, University of Washington data-science researcher Jake VanderPlas captures the essence of the problem facing academia. “The skills required to be a successful scientific researcher,” he writes, “are increasingly indistinguishable from the skills required to be successful in industry.”

VanderPlas is an astronomer and a prolific contributor to open-source tools that are used both in academia, for his research, and in industry, by data scientists. In his blog post, he describes a number of factors that should worry anyone who cares about the long-term health of our universities. First, the individuals most likely to be suffering a career penalty from spending time working on (open-source) software are some of the most employable people outside of academia. Second, the work these individuals contribute to open source is highly visible, and discoverable, because of the significance of these tools in industry. Third, with jobs in industry often paying two or three times more than postdoctoral-level salaries, many of the best and brightest young academics are leaving academia for industry.

One could argue that this “brain drain” is the university system working well for our economy – training a skilled workforce for industry. Unfortunately though, much of modern research is highly data-driven and needs individuals with these skills to make the best use of the voluminous data streams from modern experiments.

An imbalance of incentives

As things currently stand, most academic fields rely on a one-dimensional credit model where the academic paper is the dominant factor. Incentives to publish other parts of the research cycle, such as software and data, do exist but they don’t currently exist at the individual researcher level.

Papers that are accompanied by well-described data and analysis routines should be easier to understand and reproduce, which in turn should lead to an increased level of confidence in any new result. While physics has been left relatively unscathed compared to some other disciplines, without this increased level of transparency, many fields are running the risk of placing too much trust in “black box” methods whereby data are fed into analysis routines and results published with little critical review. Described by some as a reproducibility “crisis”, a number of high-profile retractions of novel results, especially in the biosciences in recent years, have led some scientific and medical publishers such as PLOS to require that submitting authors make software and data available when publishing a paper.

In physics and astronomy, publishers have so far been slower to adopt such requirements. But change is afoot: the American Astronomical Society journals The Astronomical Journal and The Astrophysical Journal, for example – published by IOP Publishing, which also publishes Physics World – now allow software papers describing research software with an astrophysics application. The Elsevier-published journal Astronomy and Computing, meanwhile, is dedicated to topics spanning astronomy, computer science and information technology.

In addition, there is a growing list of journals designed specifically for publishing software papers such as the Journal of Open Research Software, Software X and The Journal of Open Source Software – for which I led the development and continue to play the role of editor-in-chief. While these solutions are not the same as an academic ecosystem that rewards all of the constituent parts of the research output, they are a step in the right direction.

Physics experiments are only getting bigger and their data sets more complex. As such, much of modern research depends upon the availability of high-quality software and data products for community use. If we are to continue to make the best use of these experiments then we’re going to need to train – and retain – a workforce with a broad range of skills including data analysis, visualization and theory. To achieve this is going to require us to rethink what “counts” as an academic contribution.

Authorship and reputation in open source

Authorship is an important potential signal of trust in open source. When choosing an open-source project to solve a problem, knowing who the main authors of a project are is critical for evaluating the potential quality of a package. With platforms such as GitHub, Bitbucket and GitLab, contributions of individuals to the open-source ecosystem are placed front-and-centre on user profiles and easily discovered when viewing a project.

Another core tenet of open source is the reuse of existing tools. There are tens of millions of open-source packages hosted on a variety of platforms and most of these packages “depend” on other open-source tools. Tools such as Libraries aggregate all of these packages and provide rich metrics tracking their usage and the inter-project dependencies throughout the ecosystem. Understanding the “rank” of a project – that is, which projects are most reused by others – is similar to the citation count in the academic literature and is a strong reputation signal for the community.

- This article is published under the terms of the Creative Commons Attribution 3.0 licence.

- Enjoy the rest of the March 2017 issue of Physics World in our digital magazine or via the Physics World app for any iOS or Android smartphone or tablet. Membership of the Institute of Physics required