A technique known as a “weak measurement”, which allows physicists to measure certain properties of a quantum system without disturbing it, is being called into question by two physicists based in Canada and the US. The researchers argue that such measurements, and their counterparts known as “weak values”, might not be inherently quantum mechanical and do not provide any original insights into the quantum world. Indeed, they say that the results from such measurements can be replicated classically and are therefore not properties of a quantum system.

More than 25 years ago, Yakir Aharonov, Lev Vaidman and colleagues at Tel Aviv University in Israel came up with a unique way of measuring a quantum system without disturbing it to the point where decoherence occurs and some information is lost. This is unlike conventional “strong measurements” in quantum mechanics, in which the system “collapses” into a definite value of the property being measured – its position, for example. Instead, the researchers suggested that it is possible to gently or “weakly” measure a quantum system, and to gain some information about one property (such as its position) without disturbing a complementary property (momentum) and therefore the future evolution of the system. Although each measurement only provides a tiny amount of information, by carrying out multiple measurements and then looking at the average, one can accurately measure the intended property without distorting its final value.

Screening unwanted measurements

The process involves making a “pre-selection” by preparing a group of particles in some initial state, followed by weakly measuring each of the particles at some point in time. Then, a “post-selection” second set of measurements is made at a slightly later time. The results of the weak measurements will, on average, imply certain results for the post-selection measurements, but they do not determine them. Ultimately, by screening unwanted measurements, one is left with a “weak value”.

In the original theoretical paper published in 1988, Aharonov and colleagues consider measuring the spin of a spin-½ particle. First, an ensemble of particles of only a particular state, say spin up, is created – this is the pre-selection. Next, one would make weak measurements of the spin of the particles many times, but as “gently” as possible. A final measurement would be made, and particles that are not in the desired state are discarded in the post-selection process. Then, by combining all three measurements, one would be able to measure the state of the system, according to Aharonov and colleagues.

The paper, however, identifies a very strange property of weak values. If the weak measurement is done in a certain way, it is possible for the weak value of the spin to be 100 rather than one-half, which would be the outcome of a strong measurement. Aharonov and colleagues call this an “anomalous weak value” and the paper remains controversial.

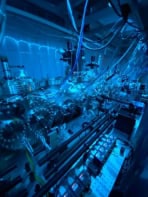

In 2011 Aephraim Steinberg and colleagues at the University of Toronto demonstrated the technique by tracking the average paths of single photons passing through a Young’s double-slit experiment. In recent years, this method of weak measurement has gained momentum and has been used in some quantum-information technologies, including quantum feedback control and quantum communications.

Weak understanding

Now, Christopher Ferrie of the University of New Mexico along with Joshua Combes of the Perimeter Institute for Theoretical Physics in Waterloo, Canada, are questioning the concept of weak measurement. Indeed, they are highly sceptical of the whole field, saying that, at the very least, what information is gleaned from a weak measurement is currently not understood.

There might be something genuinely quantum about weak values, but to my eye that’s not clear yet

Joshua Combes, Perimeter Institute for Theoretical Physics

“Weak values do not seem to be a property of the system in any way,” says Ferrie. He and Combes claim that while the idea of weakly measuring a system is fine, making pre- and post-selections is akin to having a set of data and just favouring a subset of it – meaning that any measurement made is a consequence of classical statistics, rather than a physical property of the system. “So long as there is some co-relation between the second [weak measurement] and third [post-selection] steps, you will have an anomalous weak value,” says Ferrie. But such a correlation would mean that the original quantum system being measured is no longer sound.

To illustrate their point, the researchers have come up with a classical analogue of a weak value presented in the Aharonov paper by adopting the world’s simplest random system: a coin flip.

It can be imagined as a coin-flipping game in which one player, called Alice, flips a coin and only passes the coin on to the other player, Bob, if it is “heads”, which is pre-selection. Bob has no prior knowledge of the state of the coin and can only glance at it quickly to try to determine its state, which is the weak measurement. Bob then fumbles the coin so there is a small chance that it flips, which is the disturbance. Finally, he hands the coin back to Alice, who looks at its state and discards all coins that come back heads – which is post-selection.

Very occasionally, Alice will receive a coin that is tails. Because all the coins were pre-selected heads, she assumes that Bob had measured heads and then flipped the coin during the disturbance process.

If heads is given the value +1 and tails –1, and if Alice concludes that Bob flips one in every 100 coins, the mathematical operations outlined by Aharonov and colleagues suggest that the weak value for Bob’s weak measurements is 100. Like Aharonov’s “spin 100” weak value, this is an anomalous result, because the values assigned to heads and tails are +1 and –1, and one would expect the weak value to be somewhere between the two.

Ferrie and Combes say that their example shows that weak measurements are merely an artefact of classical statistics and classical disturbances, and they argue that when a classical explanation suffices, there is no need to invoke a quantum explanation. “Statistics can fool you,” says Combes. “We think this particular weak-value puzzle is a statistical question, not a fundamentally quantum question. There might be something genuinely quantum about weak values, but to my eye that’s not clear yet.”

Rainer Kaltenbaek of the Quantum Foundations and Quantum Information group at the University of Vienna found the general idea underlying Ferrie and Combe’s analysis very interesting. “In particular, it shows that there’s often still confusion about what to make of weak values,” he says. He points out other research, carried out by Franco Nori of the University of Michigan and colleagues, which Combes and Ferrie refer to in their current work. Nori’s team has interpreted Steinberg’s experiment in completely classical terms.

Referring to Steinberg’s experiment with the photon trajectories, Kaltenbaek says that a weak value can be calculated if one averages over many photons. “It only becomes complicated if you try to infer something from that for a single photon – in my opinion, it [a weak value] does not tell you anything useful at all for a single photon,” he says, and that Combes and Ferrie’s recent research illustrates that quite well.

Combes and Ferrie’s analysis is published in Physical Review Letters.