Do you know that as you get older, the flexible crystalline lenses in your eyes become stiffer? This stiffness makes it difficult for the lens to deform its shape to suit the distance you are aiming to look at. This reduction in the range of accommodation of the eye’s lens is known as presbyopia and affects more than a billion people, particularly the elderly population.

Recent technology advances have provided different solutions to correct presbyopia by offering several types of glasses and contact lenses. Yet, these forms of correction all have shortcomings. For instance, “progressives” (which split far and near focus vertically in each lens) perform poorly in tasks requiring side-to-side head movement as they cannot adjust for the changes in focus during such movements. Others, like “monovision” lenses (which split far and near focus between the two eyes), fall short on visual acuity and near-distance performance.

Smart glasses focus automatically

The main reason for these methods’ flaws is that they all employ fixed focal elements and therefore can only achieve an approximate adjustment for vision correction. Approaching a more natural remedy, such as attaching a flexible lens to the eye’s ciliary muscles, would require invasive surgical procedures to restore the flexibility of the crystalline lens or implement some form of focus-tunable lens element.

To overcome these shortcomings and achieve the optimal adjustment to the correction lens, a research team – led by Gordon Wetzstein at Stanford University – designed focus-tunable eyeglasses called autofocals. The researchers used two infrared-sensitive cameras to determine the relative angle between the eyes – known as vergence. This angle is directly related to the depth at which the viewer is focusing (Science Advances 10.1126/sciadv. aav6187).

In other words, one camera records the location of everything in the outside world and the other camera faces the eyes to track where they are looking. Based on information regarding the location of binocular disparity (the difference in an object’s location seen by the right and left eyes) and the horizontal distance between objects in the images, the autofocals combine the images from the two cameras and enable the user to calculate the distance of the object that the viewer is looking at.

Adjusting the lens to object depth

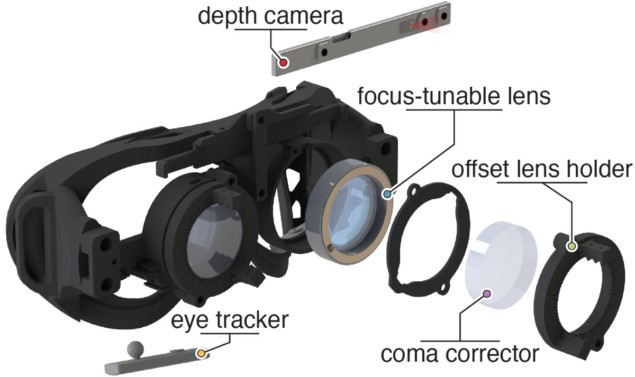

The research team built a wearable prototype that combines electronically controlled liquid lenses enclosed in glass and metal with two chambers separated by a membrane, a wide field-of-view depth camera and binocular eye tracking. The flexibility of the membrane is achieved by pushing the liquid lens into or out of the two chambers.

The team compared their autofocal design against progressive and monovision lenses using metrics including visual acuity (sharpness), contrast sensitivity and refocusing rate. They found, for instance, that their design helped people to maintain 20/20 visual acuity at tested distances of 0.167, 1.25 and 2.5 D (dioptres, equal to the reciprocal of focal length in meters). In order to account for near- and far-sight correction or astigmatism, the team used an offset lens to test a wide variety of conditions in a wide variety of people.

The researchers concluded that focus-tunable eyeglasses perform better than (or at least comparable to) progressives and monovisions in terms of visual acuity and contrast sensitivity, and enable faster and more accurate adjustments in focus.

“Despite power requirements and remaining engineering challenges, our study demonstrates that a paradigm shift toward digital eyeglasses is valuable, with the benefits extending beyond presbyopia correction. What seems at first like a disadvantage, the need for a battery, actually opens the door to more capabilities,” the authors wrote.