With fully fledged quantum computers still potentially decades off, several groups of physicists around the world have found an alternative way of exploiting the processing power of quantum mechanics. They have built a relatively simple photonic device to carry out one specific calculation that is very difficult to perform using classical computers and which, they say, might demonstrate the greater inherent speed of quantum-based devices within the next 10 years.

Quantum computers process quantum bits – or qubits – which can exist in two states at the same time. This could, in principle, lead to an exponential increase in the processing speed of a quantum computer compared with classical devices. This quantum processing could be used, among other things, to rapidly factorize large numbers into their constituent primes and so break codes that are, in practice, uncrackable using conventional computers. However, many technical challenges remain for those trying to develop quantum computers and today the best that a quantum computer can do is to factor small numbers such as 15 and 21.

Some physicists believe that an intermediate quantum computer called a “boson sampling” machine could offer a shortcut to achieving the greater speed of quantum computing. This does not involve what is known as a universal quantum computer, but instead carries out one fixed task. The device consists of a network of beam splitters that converts one set of photons arriving at a number of parallel input ports into a second set leaving via a number of parallel outputs. Its task is to work out the probability that a certain input configuration will lead to a certain output.

Bosonic properties

In 2011 Scott Aaronson and Alex Arkhipov of the Massachusetts Institute of Technology showed that calculating that probability becomes exponentially more difficult using a classical computer as the number of input photons and the number of input and output ports increases. That difficulty is due to the unusual behaviour of photons, which belong to a class of fundamental particles known as bosons, any number of which can occupy a given quantum state. When two photons reach a beam splitter at exactly the same time, they will always follow the same path afterwards – both going either left or right – and it is that behaviour that is so hard to model classically. The MIT researchers found that predicting the machine’s output in fact requires the calculation of a series of “permanents” – single numbers associated with specific matrices that are similar to determinants but which are much harder to work out.

“Having to work out these permanents means that even the best desktop computers would struggle to get above about 30 photons,” says Ian Walmsley of the University of Oxford in the UK. “But a boson sampling machine instead is a kind of analogue computer that uses the physical properties of bosons themselves to work out the answer.”

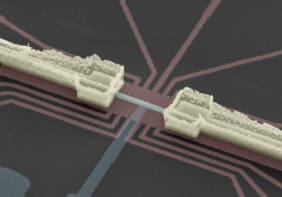

Four independent groups of researchers have now backed up Aaronson’s theoretical work with experimental results. One is led by Walmsley and includes researchers from Oxford and Southampton universities. Another is an Australian/US group led by Andrew White of the University of Queensland that includes Aaronson. Both of these groups have had their results published in Science, while the other two groups – based in Austria/Germany and Italy/Brazil – have released their results on the arXiv preprint server. All groups used similar experimental set-ups based on either custom compact optical chips or commercial photonics with five or six inputs and outputs.

Injecting photons

The tests involved injecting up to three photons, or four in the case of the Oxford group, into specific individual inputs and then registering at which outputs photons emerged. Repeating this process over and over again, the researchers were able to work out what fraction of the time specific output configurations appeared, and therefore the probability that those configurations would occur.

Comparing these results with the probabilities calculated using matrix permanents, the groups found experiment and theory to be in very good agreement, once the theory had been corrected to account for experimental errors arising from photons that sometimes were not indistinguishable (which they must be) and which sometimes entered the system as pairs rather than individually. The finite time over which data were collected also limited the accuracy of the results.

“The bottom line is that we have built boson sampling machines, that they work and that the errors are not fatal,” says Walmsley.

Direction is clear

Being able to account for these errors suggests that the devices can be scaled up to the point where they overtake classical computers, according to Walmsley. The aim, he explains, is to improve the technology in order to process around 30 photons, which is generally considered to be about the limit for a present-day desktop classical computer, and then up towards 100, which would put the boson samplers in a league of their own. “To do that you need to make sure that the photons are all exactly the same, that they arrive at the beam splitters at exactly the same time, and that the detector efficiency allows you to sample enough data,” he says. “There are challenges in overcoming all of these things and that will mean a lot of work, but I think the direction we need to take is clear.”

White agrees. “I think that within a decade we can get there,” he says.

In fact, Walmsley believes that boson sampling machines “might not simply be a test exercise” for universal quantum computers and that they may, in fact, be used to compute additional, useful algorithms – although he points out that no such algorithms have been discovered yet.

However, Raymond Laflamme of the University of Waterloo in Canada is a little more cautious. He says that he read about the latest results “with enthusiasm” and believes that boson sampling “is teaching us something important about quantum information processing – that we might not need a fully operational quantum computer to have an interesting speed up from quantum mechanics”. But he is not sure the current devices really can be scaled up. “It is not clear to me that the experimental results will not be swamped by the imperfections when trying to reach 20 or 30 photons,” he says. “But on the other hand I can’t prove it, and that is the challenge for the experimentalists.”