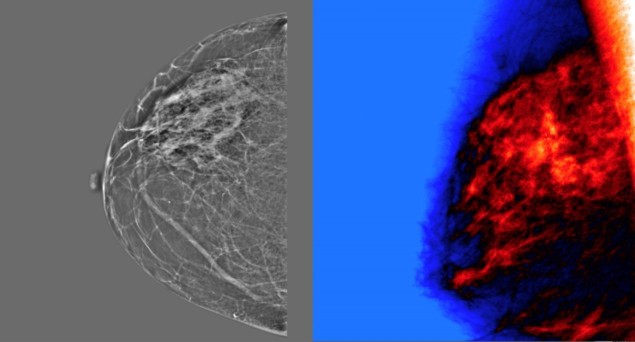

Mammography screening is widely employed for early detection of breast cancer. But mammograms currently rely on subjective human interpretation and, as such, the screening process is not perfect. In the USA, for example, such screening leads to an estimated 10% false positives, which increases patient anxiety and can result in unnecessary interventions or treatments.

Advances in deep learning and increased computational power have recently renewed interest in the use of artificial intelligence (AI) to increase screening accuracy. With this aim, the Digital Mammography (DM) DREAM Challenge used a crowdsourced approach to develop and validate AI algorithms that may improve breast cancer detection. The goal: to assess whether such algorithms can match or improve interpretations of mammograms by radiologists (JAMA Netw. Open 10.1001/jamanetworkopen.2020.0265).

The DM DREAM Challenge – directed by IBM Research, Sage Bionetworks and the Kaiser Permanente Washington Research Institute – is the largest objective study of deep learning performance for automated mammography interpretation to date. “This DREAM Challenge allowed for the rigorous and appropriate assessment of tens of advanced deep learning algorithms in two independent databases,” explains Justin Guinney, president of the DREAM Challenges.

The challenge required participants to develop algorithms that input screening mammography data and output a score representing the likelihood that a woman will be diagnosed with breast cancer within the next 12 months. In a sub-challenge, the algorithms could also access images from previous screening examinations, as well as clinical and demographic risk factor information.

The data for the challenge were provided by Kaiser Permanente Washington (KPW) in the USA and Karolinska Institute (KI) in Sweden. The KPW data set, which included 144,231 screening exams from 85,580 women, of whom 1.1% were cancer positive, was split for use in algorithm training (70%) and evaluation (30%). The KI data set, used only for algorithm validation, comprised 166,578 exams from 68,008 women, of whom 1.1% were cancer positive.

To ensure the privacy of these data, both data sets were securely protected behind a firewall and not accessible to challenge participants. Instead, participants sent their algorithms to the organisers for automated training and testing behind the firewall.

Crowdsourced competition

The challenge was taken up by more than 1100 participants, making up 126 teams from 44 countries. In a first stage, the algorithms were trained and evaluated on the KPW data, with AUC (a measure of how well the algorithm’s continuous score separates positive from negative breast cancer status) used to evaluate and rank algorithm performance.

Interestingly, including clinical data and prior mammograms did not improve the algorithms’ performance. The DM DREAM team suggest that perhaps participants did not fully exploit this information and recommend that future algorithm development should focus on the use of a patient’s prior images.

The eight top-performing teams were invited to collaborate to further refine their AI algorithms, to evaluate whether an ensemble approach could improve overall performance. The output of this “community phase” was the challenge ensemble method (CEM), a weighted aggregation of algorithm predictions. This CEM model was also integrated with the radiologists’ assessment into a second ensemble model called CEM+R.

To compare CEM predictions with radiologists’ interpretation (recall/no recall), the competition determined CEM specificity when using the sensitivity of radiologists at each institution. For the KPW data set (with a radiologist sensitivity of 85.9%), the top-performing AI model, the CEM and the radiologists achieved specificities of 66.3%, 76.1% and 90.5%, respectively. While CEM remained inferior to the radiologists’ performance, CEM+R increased the specificity to 92%.

The challenge team repeated the assessment using the KI data. For these exams, each mammogram underwent double reading by two radiologists, so the first reader interpretation was used to mirror the KPW data set. At the sensitivity of first readers’ (77.1%), the specificities of the top model, the CEM, the radiologists and the CEM+R were 88%, 92.5%, 96.7% and 98.5%, respectively. Again, CEM+R provided the highest specificity. The team also compared the ensemble method with the double-reading results, observing that in this case, the CEM+R did not improve upon the consensus interpretations.

Artificial intelligence versus 101 radiologists

The results show promise for deep learning to enhance the accuracy of mammography screening. While no single AI algorithm outperformed the radiologist benchmarks, the CEM+R model improved performance over single-radiologist interpretation, such as used in the USA. In the double-reading and consensus environment, as seen in Sweden for example, adding AI may not have as great an effect. However, that it’s likely that training an ensemble of AI algorithms and radiologists consensus assessments would improve accuracy.

The challenge team conclude that combining AI algorithms with radiologist interpretation could reduce mammography recall rates by 1.5%. With some 40 million women screened for breast cancer in the USA each year, this means more than half a million women annually would not have to undergo unnecessary diagnostic work-up.