Quantum theory is often portrayed as a disruptive force, complicating everything that classical physics seemed to have figured out. Now, however, physicists at Lawrence Berkeley National Laboratory (LBNL) in the US have demonstrated that the two can work side-by-side, in a proof-of-principle study that shows how a quantum computer can complement a classical method of modelling high-energy particle collisions.

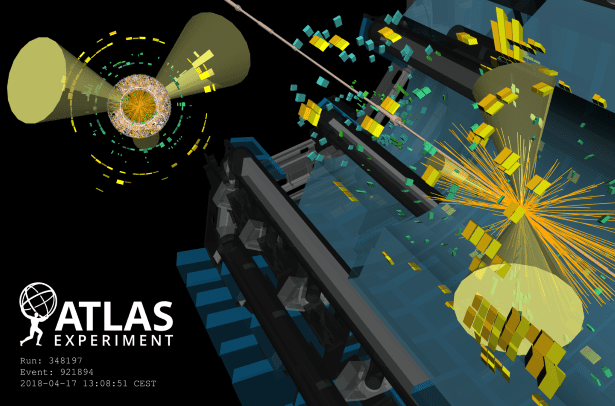

Machines such as CERN’s Large Hadron Collider (LHC) smash protons together at energies of more than 1 TeV, producing showers of thousands of particles. Physicists use computer models to predict what happens to those particles by the time they reach a detector. In one such modelling technique, known as a parton shower, the assumption is that the particles that make it to the detector are the last step in a long cascade of particles and radiation that converted into one another after the initial collision.

For this parton shower to include quantum features of particle interactions, however, the model needs to simultaneously consider all possible intermediate particles that could form between the initial and final particles – something that cannot be done by a classical computer algorithm, says Christian Bauer, a theoretical physicist at LBNL and co-author of the paper. “What a classical [parton] shower does is that it sort of goes through and produces a particular event, one at a time, with a particular intermediate particle,” Bauer explains. “The quantum version of the shower sort of does all possibilities in one shot.”

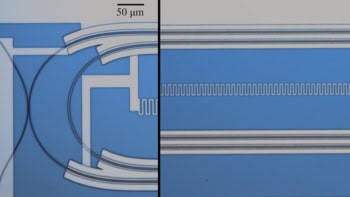

In their study, which appears in Physical Review Letters, Bauer and colleagues created a quantum algorithm for the parton shower. To do this, they developed a simplified version of the Standard Model of particle physics that shares some of the full model’s features but is simple enough for present-day quantum computers to execute. They then used the IBM Q Johannesburg chip to calculate details of particle processes that can occur within this simplified model. This IBM chip has 20 superconducting quantum bits (qubits), and the LBNL scientists used cloud access to program it to run their quantum parton shower algorithm on 5 qubits, using 48 quantum gate operations. When they compared the real chip’s output to a prediction made by IBM’s quantum computer simulator, they found excellent agreement – indicating that the computer fully captured quantum effects in their particle model.

A quantum problem for a quantum machine

The idea that quantum effects are hard or impossible to model on non-quantum devices is an old one, dating back to lectures given by the physicist Richard Feynman in the early 1980s. Features of parton showers that are formulated in the language of quantum mechanics from the get-go certainly fall into this category, says Jesse Thaler, a physicist at the Massachusetts Institute of Technology who was not involved in the study. “While some aspects of particle scattering can be described in a classical language, nature is fundamentally quantum mechanical,” Thaler says. The current study, he suggests, could be a stepping-stone towards a future in which theorists use the outputs of both classical and quantum computers to piece together more complex models of what happens inside particle colliders.

The speed at which that future arrives, however, will depend on overcoming certain hardware challenges within quantum computing. “While I would be surprised if these challenges could be overcome before the end of the LHC era, it is plausible that these kinds of hybrid classical and quantum algorithms could be useful for interpreting data from a future collider,” Thaler predicts.

Two-way collaboration

Even though quantum computers are not yet advanced enough to outperform classical machines completely, Bauer thinks there are benefits in making them work together. While classical computational techniques produce excellent results in some areas of particle physics, he and his colleagues aim to concentrate on inherently quantum effects that classical machines could never properly handle. “We should only ask quantum computers to do the things that are hard to do on classical computers,” he says.

The past, present and future of computing in high-energy physics

Benjamin Nachman, a physicist at LBNL and lead author of the study, adds that collaboration between high-energy physicists and quantum information scientists is a two-way street. “There are techniques for how to do [high energy] physics that we could apply to improve error mitigation on quantum computers,” he says. He and his collaborators have begun exploring some of these techniques, aided by a US Department of Energy programme that provides funding for this type of interdisciplinary partnership.

In the meantime, the LBNL team is focusing on making their current “toy” version of Standard Model physics, and the accompanying quantum algorithm, more sophisticated. “If a better [quantum] computer comes tomorrow, we can run the model that we developed here with more precision,” Bauer says. “But in order to really go to the Standard Model, there’s more theoretical work needed as well – which is not unsurmountable at all, it just needs to be done.”