A new set of codes that, for the first time, are able to apply Einstein’s complete general theory of relativity to simulate how our universe evolved, have been independently developed by two international teams of physicists. They pave the way for cosmologists to confirm whether our interpretations of observations of large-scale structure and cosmic expansion are telling us the true story.

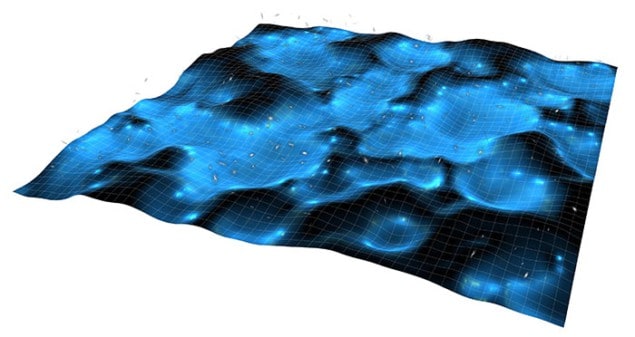

The impetus to develop codes designed to apply general relativity to cosmology stems from the limitations of traditional numerical simulations of the universe. Currently, such models invoke Newtonian gravity and assume a homogenous universe when describing cosmic expansion, for reasons of simplicity and computing power. On the largest scales the universe is homogenous and isotropic, meaning that matter is distributed evenly in all directions; but on smaller scales the universe is clearly inhomogeneous, with matter clumped into chains of galaxies and filaments of dark matter assembled around vast voids.

Uneven expansion?

However, the expansion of the universe could be proceeding at different rates in different regions, depending on the density of matter in those areas. Where matter is densely clumped together, its gravity slows the expansion; whereas in the relatively empty voids, the universe can expand unhindered. This could affect how light propagates through such regions, manifesting itself in the relationship between the distance to objects of known intrinsic luminosity (what astronomers refer to as standard candles, whereby we measure their distance, based on how bright they appear to us) and their cosmological redshift.

Now, James Mertens and Glenn Starkman of Case Western Reserve University in Ohio, together with John T Giblin at Kenyon College, have written one such code; while Eloisa Bentivegna of the University of Catania in Italy and Marco Bruni at the Institute of Cosmology and Gravitation at the University of Portsmouth have independently developed a second similar code.

Fast voids and slow clumps

The distances to supernovae and their cosmological redshifts are related to one another in a specific way in a homogeneous universe, but the question is, according to Starkman: “Are they related in the same way in a lumpy universe?” The answer to this will have obvious repercussions for the universe’s expansion rate and the strength of dark energy, which can be measured using standard candles such as supernovae.

The rate of expansion of our universe is described by the “Hubble parameter”. Its current value of 73 km/s/Mpc is calculated assuming a homogenous universe. However, Bruni and Bentivegna showed that on local scales there are wide variations, with voids expanding up to 28% faster than the average value for the Hubble parameter. This is counteracted by the slowdown of the expansion in dense galaxy clusters. However, Bruni cautions that they must “be careful, as this value depends on the specific coordinate system that we have used”. While the US team used the same system, it is feasible that it creates observer bias and that a different system could lead to a different interpretation of the variation.

The codes have also been able to rule out a phenomenon known as “back reaction”, which is the idea that large-scale structure can affect the universe around it in such a way as to masquerade as dark energy. By running their codes, both teams have shown, within the limitations of the simulations, that the amount of back reaction is small enough not to account for dark energy.

Einstein’s toolkit

Although the US team’s code has not yet been publically released, the code developed by Bentivegna is available. It makes use of a free software collection called the Einstein Toolkit, which includes software called Cactus. This allows code to be developed by downloading modules called “thorns” that each perform specific tasks, such as solving Einstein’s field equations or calculating gravitational waves. These modules are then integrated into the Cactus infrastructure to create new applications.

“Cactus was already able to integrate Einstein’s equations before I started working on my modifications in 2010,” says Bentivegna. “What I had to supply was a module to prepare the initial conditions for a cosmological model where space is filled with matter that is inhomogeneous on smaller scales but homogeneous on larger ones.”

Looking ahead

The US team says it will be releasing its code to the scientific community soon and reports that it performs even better than the Cactus code. However, Giblin believes that both codes are likely to be used equally in the future, since they can provide independent verification for each other. “This is important since we’re starting to be able to make predictions about actual measurements that will be made in the future and having two independent groups working with different tools is an important check,” he says.

So are the days of numerical simulations with Newtonian gravity numbered? Not necessarily, says Bruni. Even though the general relativity codes are highly accurate, the immense computing resources they require means that achieving the detail of Newtonian gravity simulations will require a lot of extra code development.

“However, these general relativity simulations should provide a benchmark for Newtonian simulations,” says Bruni, “which we can then use to determine to what point the Newtonian method is accurate. They’re a huge step forward in modelling the universe as a whole.”

The teams’ work is published in Physical Review Letters (116 251301; 116 251302) and Physical Review D.